Microsoft has released the technical preview of IoT Central, the company's SaaS offering designed to let organizations roll out IoT-based solutions with minimal configuration or knowledge of the complexities of integrating operational systems with IT.

The company, which announced IoT Central back in April, said customers can use these preconfigured application templates to deploy IoT capabilities within hours and without the need for developers skilled in IoT. The new SaaS offering will compliment Microsoft's Azure IoT Suite, a PaaS-based offering that requires more customization and systems integration.

"For Microsoft IoT Central, the skill level [required] is really low," said Sam George, Microsoft's director of Azure IoT, during a press briefing earlier this week. Both IoT Central and the IoT Suite are built upon Azure IoT Hub as a gateway that the company said provides secure connectivity, provisioning and data gathering from IoT-enabled endpoints and devices.

They also both utilize other Azure services such as Machine Learning, Streaming Analytics, Time Series Insights and Logic Apps. The Azure IoT Suite is consumption-based, while IoT Central is based on a subscription model based on the amount of usage starting at 50 cents per user per month (or for a fixed rate of $500 per month). The company is also offering free trials.

"Up until now IoT has been out of reach for the average business and enterprise," George said. "We think it's time for IoT to be broadly available. There is nothing with rapid development like this from a major cloud vendor on the market."

IoT Central gives each device a unique security key and the service provides a set of device libraries, including Azure IoT device SDKs that support different platforms including Node.js, C/C# and Java. The service offers native support for IoT device connectivity protocols such as MQTT 3.1.1, HTTP 1.1 and AMQP 1.0. The company claims IoT Central can scale to millions of connected devices and millions of events per second gathered through the Azure IoT cloud gateway and stored in its time-series storage.

The number of IoT-based connected "things" is forecast at 8.4 billion for this year and on pace to reach 20 billion by 2020, according to Gartner. But ITIC Principal Analyst Laura DiDio warns that most customers are still in the early stages of true IoT deployments, though she said they are on pace to ramp up rapidly over the next two-to-three years. "Scalability will be imperative," DiDio said. "Corporations will require their devices, applications and networks to grow commensurately with their business needs."

Posted by Jeffrey Schwartz on 12/06/20170 comments

It's no secret that Microsoft wants enterprises to migrate all their PC users to Windows 10 as a service and to move to its new modern approach to configuring, securing and managing those systems and the applications associated with them. This year's launch of Microsoft 365 -- a subscription service that bundles Windows 10 licenses, Office 365 and the Enterprise Mobility + Security (EMS) service -- is the strongest sign yet that the company is pushing IT pros away from the traditional approach of imaging and managing PCs with System Center Configuration Manager (SCCM) in favor of Microsoft Intune in EMS.

While many IT pros have embraced the new modern systems management model, others are bemoaning it and quite a few remain unsure, according to a spot survey of Redmond readers over the weekend.

Nearly half, or 48 percent, of Redmond subscribers who are planning Windows 10 migrations said they intend to continue using SCCM to configure, deploy and manage those systems, according to 201 respondents to an online poll. Yet 31 percent are undecided and only 4 percent have decided on Microsoft's EMS offering. And while 9 percent will use a third-party MDM/EMM/MAM offering, a near-equal amount will implement a mixture of the aforementioned options.

While 19 percent responded that they plan to use Microsoft 365 where it makes sense, only 10 percent plan to use it enterprisewide. A formidable number, 42 percent, said their organization has no plans to use Microsoft 365, while 28 percent are undecided.

As Intune takes on more automated deployment capabilities, organizations upgrading to Windows 10 -- which they must do by 2020 -- may find SCCM becoming less essential in a growing number of scenarios. Brad Anderson, Microsoft's corporate vice president for enterprise client mobility, drove that point home during a keynote session at the recent Ignite conference in Orlando. "One of the big things about modern management is we are encouraging you to move away from imaging," Anderson said. "Stop maintaining those images and all of the libraries and drivers and let's move to a model where we can automatically provision you from the cloud."

Anderson estimated that SCCM now manages 75 percent of all PCs, hundreds of millions, and continues to grow by 1 million users per week -- hence the point that this change is going to happen quickly. Microsoft continues to upgrade SCCM, and has moved from its traditional release cycle of every two to three years to three times per year, and its current branch model with new test builds for insiders issued every month. Microsoft also now offers an SCCM co-management bridge and PowerShell scripts for Intune.

Organizations have various decisions to make, and there are still many moving parts. Which is why despite the fact that 600 million systems now run Windows 10, many organizations still haven't migrated or are only in the early stages of doing so.

Join MVP and longtime Redmond contributor Greg Shields, Enterprise Strategy Group Senior Analyst Mark Bowker and me tomorrow at 11 a.m. PT for a Redmond webinar: Microsoft 365 for Modern Workplace Management: Considerations for Moving to a Post-SCCM World. You can sign up here.

Posted by Jeffrey Schwartz on 12/04/20170 comments

The Box collaboration and enterprise content management (ECM) service is now available in Microsoft's Azure public cloud, marking the latest integration points between the two companies in recent years. Box and Microsoft, which are also competitors with overlapping collaboration capabilities, found it in their respective best interests two years ago to work together, staring with basic Office 365 integration.

Both companies extended their partnership in June and elaborated on their extended roadmap at last month's BoxWorks 2017 conference. Box had indicated then that the first new capability would start when it began offering its service in all of Microsoft's Azure global regions. The service that Box made available last week allows Box customers to use Azure as their primary storage for Box content, said Sanjay Manchanda, in an interview last week.

"It's Box content management capabilities with the content being stored in Azure," he said. Box historically has run its own datacenter operations throughout the world, but decided its best route to scaling would be to partner with the large global cloud providers. In addition to its arrangement with Microsoft, Box has partnerships with Amazon Web Services (AWS) and IBM. Manchanda, who worked at Microsoft for more than 10 years before joining Box, said the partnership with his former employer is similar to its relationship with IBM, where both include technical and comarketing pacts.

Manchanda noted that giving Box customers access to its service in Azure will simplify cross-organization collaboration among employees and their external partners, suppliers and customers. It will also provide secure content management by tapping Box's integrations with more than 1,400 SaaS-based applications, including those offered via Office 365, and will allow organizations to do the same when building custom applications.

The roadmap calls for Box to use Microsoft's Azure Key Vault service, which lets organizations bring and manage their own encryption keys. While Box offers its own encryption service called Box Key Safe, which uses a hardware security module (HSM) for encryption, it won't be making it into the new service. "We plan to use the service that Azure Key Vault provides," he said.

Box also plans, as part of its roadmap, to integrate Microsoft Cognitive Services with its service, allowing customers to automatically identify content, categorize it, run workflows and make it easier for users to find information.

Now available in the United States, Box intends to roll it out throughout Microsoft's 40 Azure regions, and allow customers with data sovereignty requirements to ensure their data doesn't leave the confines of a specific country or locale, Manchanda explained. Asked whether Box plans to support Azure Stack, either deployed on a customer's premises or via a third party managed services provider, Manchanda said that isn't part of the current roadmap -- but didn't rule it out if there's customer demand.

Posted by Jeffrey Schwartz on 11/29/20170 comments

McAfee is filling a void in its security portfolio with its plan to acquire leading cloud access security broker (CASB) provider Skyhigh Networks. The deal to acquire Skyhigh for an undisclosed amount, announced today, will give McAfee one of the most regarded CASB offerings as the company looks to join the fray of vendors blending cloud security, network protection and endpoint management.

Prior to spinning off from Intel earlier this year, McAfee determined it needed to focus on two key threat protection and defense control points: the endpoint and cloud, a strategy Symantec similarly concluded last year by acquiring Blue Coat for $4.6 billion. Cisco also came to that conclusion last year with its acquisition of CloudLock, which it is integrating with its OpenDNS, Talos threat analytics and existing firewall and data loss protection (DLP) offerings.

Microsoft jumped into the CASB arena two years ago with the acquisition of Adallom, which was relaunched last year as Cloud App Security and refreshed in September at the Ignite conference with support for conditional access. It's now offered as an option with Microsoft's Enterprise Mobility + Security (EMS) service. Other popular providers of CASB tools include BitGlass, CipherCloud, Forcepoint, Imperva and Netskope.

Raja Patel, McAfee's VP and general manager for corporate products, said in an interview that customers and channel partners have all asked what the company had planned in terms of offering a CASB solution. "We think there is a large market for CASBs and the capabilities that Skyhigh brings," Patel said. "They were the original CASB player in the marketplace, and they have led the category and really moved the needle in terms of their leadership and evolving the category."

Upon closing of the deal, which is scheduled to take place early next year, Skyhigh Networks CEO Rajiv Gupta will report to McAfee CEO Chris Young and will oversee the combined vendor's cloud security business. Gupta's team, which will join McAfee, will also help integrate Skyhigh Networks' CASB with McAfee's endpoint and DLP offerings. "Combined with McAfee's endpoint security capabilities and operations center solutions with actionable threat intelligence, analytics and orchestration, we will be able to deliver a set of end-to-end security capabilities unique in the industry," Gupta said in a blog post.

Patel said the company plans to let customers bring the policies implemented in their McAfee endpoint protection software and network DLP systems to their cloud infrastructure and SaaS platforms. Another priority is bringing more context to its endpoint tools and integrating the CASB with Web gateways and cloud service providers.

While only 15 percent of organizations used CASBs last year, Patel cited a Gartner forecast that found the market will grow to 85 percent by 2020. "If you look at the exponential growth of people adopting public cloud environments over the past two years, it extends to moving the security posture around it."

Posted by Jeffrey Schwartz on 11/27/20170 comments

Microsoft is adding MariaDB to the list of open source relational database platforms it will bring to Azure. MariaDB will join MySQL and PostgreSQL, announced earlier this year and now available in preview mode, in Azure. In addition to adding it to the menu of open source databases available in Azure, Microsoft has joined the MariaDB Foundation as a Platinum sponsor.

MariaDB is a fork -- or, as the foundation describes it, "an enhanced, drop-in replacement" -- of the MySQL, developed in wake of Oracle's acquisition of Sun Microsystems, which had earlier bought the popular open source database. MySQL Founder Monty (Michael) Widenius developed MariaDB to ensure an option that maintained its open source principles.

Microsoft announced its MariaDB support this week at the annual Connect conference, held in New York. While it's primarily SQL-based, it also has GIS and JSON interfaces and is used by notable organizations including Google, WordPress.com and Wikipedia.

Scott Guthrie, Microsoft's executive VP of cloud and enterprise, told analysts and media that MariaDB has become popular with a growing segment of developers. "Like our PostgreSQL and MySQL, options it's 100 percent compatible with all of the existing drivers, libraries and tools and can take full advantage of the rich MariaDB ecosystem," Guthrie said, during his Connect keynote address.

Microsoft announced MySQL and PostgreSQL as managed services offerings in Azure at its annual Build conference, which took place back in May in Seattle. Since releasing the previews, Microsoft has added PostgreSQL extensions, compute tiers and it's now available in 16 regions and on pace for release in all 40 regions, said Tobias Ternstrom principal group program manager for Microsoft's Database Systems Group, in a blog post.

Ternstrom visited Widenius in Sweden recently, where they discussed adding MariaDB to Azure and ultimately decided to join the foundation and participate in the open source project. "We are committed to working with the community to submit pull requests (hopefully improvements...) with the changes we make to the database engines that we offer in Azure," Ternstrom noted, in a blog post. "It keeps open source open and delivers a consistent experience, whether you run the database in the cloud, on your laptop when you develop your applications, or on-premises."

Microsoft has posted a waitlist for those wanting to test the forthcoming preview.

Posted by Jeffrey Schwartz on 11/20/20170 comments

Microsoft is looking to help remotely distributed development teams collaborate on code in real time with the introduction of Visual Studio Live Share. The new capability, demonstrated at the Microsoft Connect Conference in New York and streamed online, now works with both the Visual Studio IDE and multi-platform VS Code lightweight development tool, editor and debugger.

Visual Studio Share is among several new capabilities the company is adding to its tooling portfolio and suite of Azure services focused on making it easier for organizations to shift to DevOps management and methodologies, support for continuous integration-continuous deployment release cycles and to target multiple platforms and frameworks.

Based in Azure, the new Visual Studio Live Share lets remotely distributed developers create live sessions in which they can interactively share code projects to troubleshoot problems and iterate in real time, while working in their preferred environment and setup. Visual Studio Live Share aims to do away with the current practice of remote development teams exchanging screen images with one another via e-mail or chat sessions using Slack or Teams. Instead it will be enabling live code sharing sessions.

The core Visual Studio Live Share service runs in Azure and allows for sharing of code among developers using both the full Visual Studio IDE or the new lightweight VS Code editor. It allows developers to share code between the two tools and they don't have to be using the same client platform, or even using all of the same programming environments or languages.

"We really think this is a game changer in terms of enabling real-time collaboration and development," said Scott Guthrie, Microsoft's executive VP for cloud and enterprise, speaking during the Connect keynote. "Rather than just screen sharing, Visual Studio Live Share lets developers share their full project context with a bidirectional, instant and familiar way to jump into opportunistic, collaborative programming" he added in a blog post, outlining all of the Connect announcements.

As Chris Dias, Microsoft's Visual Studio group product manager, demonstrated VS Live Share during the keynote, numerous developers shared their approval on Twitter, which Guthrie remarked on as the demo concluded. Julia Liuson, Microsoft's corporate VP for Visual Studio, in an interview at Connect said the reaction on Twitter mapped with the frustration developers typically encounter with the limitation of sharing screen grabs or relying on chat sessions or phone conversations. "It's painful. We hear this all the time, even in our own day interactions," she said.

The company released a limited private preview of the new VS Live Share capability yesterday, but did not disclose how it will be offered, or whether it might be extended to other development environments over time. "We're going to make sure we have the right offering first and will talk later about the business model," Liuson said, adding that some sort of free iteration is planned.

Microsoft introduced multiple features at Connect that will be coming to Visual Studio, as described below.

Azure DevOps Projects: A complete DevOps pipeline running on Visual Studio Team Services, Azure DevOps Projects is available in the Azure Portal in preview. It's aimed at making DevOps the "foundation" for all new projects, supporting multiple frameworks, languages and Azure-hosted deployment targets.

Visual Studio Connected Environment for Azure Container Service (AKS): Building on Microsoft's new AKS offering, Microsoft Principle Program Manager Scott Hanselman demonstrated how developers can edit and debug modern or cloud native apps running in Kubernetes clusters using Visual Studio, VS Code or using a command line interface. Hanselman demonstrated how developers can switch between .NET Core/C# and Node, Visual Studio and VS Code. The service is also in preview.

Visual Studio App Center: The app lifecycle platform that lets developers build, test, deploy, monitor and iterate based on live usage crash analytics telemetry is now generally available. Microsoft describes Visual Studio App Center as a shared environment for multiplatform mobile, Windows and Mac apps, supporting Objective-C, Swift, Java, Xamarin and React Native, connected to any code repository.

Visual Studio Tools for AI: A new modeling capability for Visual Studio is now in preview and will give developers and data scientists debugging and editing for key deep learning frameworks including Microsoft's Cognitive Toolkit, as well as TensorFlow and Caffe to build, train, manage and deploy their models locally and scale to Azure.

Azure IoT Edge: A service to deploy cloud intelligence to IoT devices via containers that run with the company's Azure IoT Edge, Azure Machine Learning, Azure Functions and Azure Stream Analytics is now in preview.

Posted by Jeffrey Schwartz on 11/16/20170 comments

Microsoft and Apache Spark creator Databricks are building a globally distributed streaming analytics service natively integrated with Azure for machine learning, graph processing and AI-based applications.

The new Datrabricks Spark as a service was introduced at Microsoft's annual Connect developer conference, which kicked off today in New York. The new service, available in preview, is among an extensive list of announcements focused on its various SQL and NoSQL database products and services, as well as productivity, cross-platform and added language improvements to Visual Studio and VSCode developer tools, as well as new DevOps capabilities, new machine learning, AI and IoT tooling.

During the opening keynote, Scott Guthrie, Microsoft's executive VP for Cloud and Enterprise, emphasized that Databricks is the creator of, and steward of, Apache Spark, and the new service will enable organizations to build modern data warehouses that support self-service analytics and machine learning using all data types in a secure and compliant architecture.

Databricks has engineered a first-party Spark-as-a-service platform for Azure. "It allows you to quickly launch and scale up the Spark service inside the cloud on Azure," Guthrie said. "It includes an incredibly rich, interactive workspace that makes it easy to build Spark-based workflows, and it integrates deeply across our other Azure services."

Those services include Azure SQL Data Warehouse, Azure Storage, Azure Cosmos DB, Azure Active Directory, Power BI and Azure Machine Learning, Guthrie said. It also provides integration with Azure Data Lake stores, Azure Blob storage and Azure Event Hub. "It's an incredibly easy way to integrate Spark deeply across your apps and drive richer intelligence from it," he said.

Databricks customers have been pushing the company to build its Spark platform as a native Azure service, said Ali Ghodsi, the company's cofounder and CEO, who joined Guthrie on stage. "We've been hearing overwhelming demand from our customer base that they want the security, they want the compliance and they want the scalability of Azure," Ghodsi said. "We think it can make AI and big data much simpler."

In addition to integrating with the various Azure services, it's designed to let those who want to create new data models to do so. According to Databricks, a user can target data regardless of size or create projects with various analytics services including Power BI, SQL, Streaming, MLlib and Graph. "Once you manage data at scale in the cloud, you open up massive possibilities for predictive analytics, AI, and real-time applications," according to a technical overview of the Azure Databricks service. "Over the past five years, the platform of choice for building these applications has been Apache Spark. With a massive community at thousands of enterprises worldwide, Spark makes it possible to run powerful analytics algorithms at scale and in real time to drive business insights."

However, deploying, managing and securing Spark at scale has remained a challenge, which Databricks believes will make the Azure service compelling.

Internally, Databricks is using the Azure Container Services to run the Azure Databricks control-plane and data planes using containers, according to the company's technical primer. It's also using accelerated networking services to improve performance on the latest Azure hardware specs.

Posted by Jeffrey Schwartz on 11/15/20170 comments

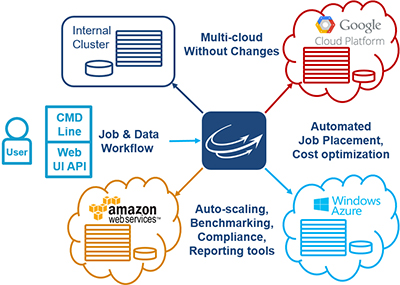

More than half of large enterprises that have implemented Cloud Foundry as their application runtime, orchestration and DevOps environment for business modernization projects are using it across multiple clouds, according to a results of a survey published last month.

Adoption of Cloud Foundry Application Runtime is on the rise among large and midsize enterprises, who are running new or transformed business apps across different clouds, including Amazon Web Services, Microsoft Azure, Google Cloud Platform and OpenStack, as well as in VMware vSphere virtual machines.

The survey of 735 users consisting of developers, architects, IT executives and operators, sponsored by the Cloud Foundry Foundation, revealed that 53 percent are using it across multiple clouds. According to the survey that was conducted by the 70-member consortium, 54 percent of Cloud Foundry Application Runtime users are running it on AWS, followed by 40 percent on VMware's vSphere, 30 percent on Microsoft's Azure, 22 percent on OpenStack and 19 percent on Google Cloud Platform. An additional 17 percent said they are running it on various provider-managed PaaS, including IBM Bluemix.

"Whether companies come to Cloud Foundry early or late in their cloud journey, the ability to run Cloud Foundry Application Runtime across multiple clouds is critical to most users," according to an executive summary of the report based on the survey conducted by research and consulting firm ClearPath Strategies in late August.

Accelerated Development and Deployment Reported

Organizations that use the open-source Cloud Foundry Application Runtime are seeing accelerated application development cycles when building their cloud-native applications, the survey also found. A majority, 51 percent, said It previously took three months to deploy a cloud application with only 16 percent able to do so. Upon moving their applications to Cloud Foundry Application Runtime, 46 percent claim their cloud app development cycles of under a week, and among them 25 percent claimed it took less than a day with only 18 percent reporting application development cycles of more than three months.

Before using Cloud Foundry Application Runtime, 58 percent said cloud applications were developed and deployed manually, 52 percent used custom installed scripts, 38 percent relied on configuration management tools, 27 percent VM images, 20 percent Docker containers and 19 percent Linux packages.

A vast majority, 71 percent, said they are using or evaluating adding container orchestration and management to their Cloud Foundry Runtime environment now that the Cloud Foundry Container Runtime is available. Half of Cloud Foundry Application Runtime users are currently using containers, such as Docker, with another 35 percent evaluating or deploying containers.

The release of Cloud Foundry Container Runtime and the Kubernetes-based container management project has generated significant interest among Cloud Foundry Application Runtime users. Nearly three-quarters (71 percent) of Cloud Foundry Application Runtime users currently using or evaluating container engines, primarily based on Docker or the Kubernetes' rtk, and are now interested in adding container orchestration and management to their Cloud Foundry Application Runtime environment.

A majority, 54 percent, use Cloud Foundry to develop, deploy and manage microservices, with 38 percent using it for their Web sites, 31 percent for internal business applications, 27 percent for software-as-a-service (SaaS) and 8 percent for legacy software transformation.

Early Stages

While Cloud Foundry is a relatively new technology -- only 15 percent have used it for more than three years and 45 percent for less than a year with 61 percent in in the early stages, according to the survey -- a noteworthy 39 percent report they have broadly integrated it already. Also, while 49 percent of those adopting it are large enterprises such as Ford, Home Depot and GE, 39 percent are smaller enterprises and 39 percent are small and medium businesses (SMBs), according to the breakdown of respondents.

The number of applications in deployment are still relatively few. More than half, 54 percent, have only deployed less than 10 apps, with 22 percent claiming between 11 and 50 apps and only 8 percent have deployed more than 500.

Pivotal Cloud Foundry, the subsidiary of Dell Technologies that offers the leading commercial distribution of Cloud Foundry, is among the most widely consumed services in Azure, according to Microsoft. Redmond contributor Michael Otey explains how to deploy it in Azure, which you can find here.

Posted by Jeffrey Schwartz on 11/13/20170 comments

Microsoft is readying a new lightweight database development and management tool that aims to give DBAs and developers common DevOps tooling to manage Microsoft's various on-premises and cloud database offerings. The new Microsoft SQL Operations Studio, demonstrated for the first time at last week's PASS Summit in Seattle, brings together the capabilities of SQL Server Management Studio (SSMS) and SQL Server Data Services (SSDS) with a modern, cross-platform interface.

The company demonstrated the forthcoming tool during the opening PASS keynote, showing the ability to rapidly deploy Linux and Windows Containers into SQL Server, Azure SQL Database and Azure SQL Data Warehouse. The first technical preview is set for release in the coming weeks. SQL Operations Studio lets developers and administrators build and manage T-SQL code in a more agile DevOps environment.

"We believe this is the way of the future," said Rohan Kumar, Microsoft's general manager of database systems engineering, who gave the opening keynote at last week's PASS event. "It's in its infancy. We see a lot of devops experiences, [where] development and testing is being used right on containers, but this is only going to get better and SQL is already prepared for it."

Kumar said Microsoft will release a preview for Windows, Mac and Linux clients within the next few weeks. It will enable "smart" T-SQL code snippets and customizable dashboards to monitor and discover performance bottlenecks in SQL databases, both on-premises or in Azure, he explained. "You'll be able to leverage your favorite command line tools like Bash, PowerShell, sqlcmd, bcp and ssh in the Integrated Terminal window," he noted in a blog post. Kumar added that users can contribute directly to SQL Operations Studio via pull requests from the GitHub repository.

Joseph D 'Antonio, a principal consultant with Denny Cherry and Associates and a SQL Server MVP, has been testing SQL Operations Studio for more than six months. "It's missing some functionality but it's a very solid tool, said D 'Antonio, who also is a Redmond contributor. "For the most part, this is VS code, with a nice database layer."

Posted by Jeffrey Schwartz on 11/06/20170 comments

Microsoft's Power BI can now query 10 billion rows of data, but a forthcoming release will blow that threshold to 1 trillion, a capability demonstrated at this week's annual PASS Summit, where the company also released the first on-premises version with Power BI Report Server.

Microsoft gave Power BI major play at PASS, held in Seattle, where the company also underscored the recently released SQL Server 2017 and its support for Linux and Docker, hybrid implementations of the on-premises database with SQL Azure and its next-generation NoSQL database CosmosDB.

Christian Wade, a Microsoft senior program manager, demonstrated the ability to search telemetry data from the cell phones of 20 million people traveling across the U.S., picking up their location data and battery usage as often as 500 times per day from each user. Though it wasn't actual usage data, Wade demonstrated how long it was taking people to reach various destinations based on their routes by merely dragging and dropping data from the Power BI dashboard interface. Wade queried Microsoft's Spark-based Azure HD Insights service.

"This is what you will be able to do with the Power BI interface and SQL Azure Analysis Services," Wade said during a brief interview following his demonstration. Wade performed the demo during a session focused on Power BI Wednesday, which followed the opening keynote by Rohan Kumar, Microsoft's general manager of database engineering. In the main keynote Wade demonstrated a query against a 9 TB instance with 10 billion rows supported in the current release.

"This is a vision of what's to come, of how we are going to unlock these massive data sets," Wade said, regarding the prototype demo. According to Wade, this new capability demonstrated was the first time anyone was able to use Power BI to perform both direct and in-memory queries against the same tabular data engine,

Kamal Hathi, general manager of Microsoft's BI engineering team, described the new threshold as a key milestone. "We have a history with analysis services and using the technology to build smart aggregations and apply them to large amounts of data," Hathi said in an interview. "It's something we have been working on for many years. Now we are bringing it to a point where we can bring it to standard big data systems like Spark and with the power of Power BI."

While he wouldn't say when it will be available, or if there will be a technical preview, Hathi said it's likely Microsoft will release it sometime next year.

What is now available is the new Power BI Report Server, which brings the SaaS-based capability on-premises for the first time. It still requires the SaaS service, but addresses organizations that can't let certain datasets leave their own environments.

Microsoft is offering the Power BI Report Server only for its Power BI Premium customers -- typically large organizations that pay based on capacity rather than on a per-user basis. The Power BI Report Server lets users embed reports and analytics directly into their apps by using the APIs of the platform, Kumar said during the keynote. "It essentially allows you to create report on-premises using your Power BI desktop tool. And once your report gets created you can either save it on premises to the reporting server [or the] cloud service," Kumar said. "The flexibility is quite amazing."

Posted by Jeffrey Schwartz on 11/03/20170 comments

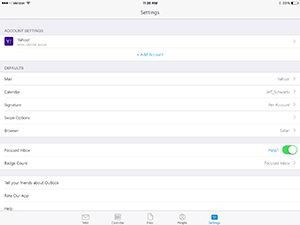

A version of the Microsoft 365 service for small- and mid-size organizations with up to 300 users is now generally available. Microsoft 365 Business, released today, is the last of four versions of the new service announced in July that brings together Office 365, the Enterprise Mobility + Security (EMS) device configuration and management service and Windows 10 upgrades and licenses.

As part of the new release, Microsoft is introducing three new tools for business users called Connections, Listings and Invoicing, on top of previously announced apps, which include a mileage tracker, customer manager and appointment scheduling tool. The tools are available for customers in the U.S., Canada and the U.K. and are included with the $20 per user, per month subscription.

The company today is also releasing Microsoft StaffHub, its new tool for firstline workers designed to help them manage their work days, which is included in Microsoft 365 Business and Office 365 Business Premium subscriptions

Microsoft released the technical preview for the business version back in August and had said it would become generally available this fall. The company has already released Microsoft 365 Enterprise, Microsoft 365 F1 for firstline workers and a version with two licensing options for educational institutions.

The company is betting that the new Microsoft 365 Business option will appeal to the millions of customers who currently pay $12.50 per user per month for Office 365 Business Premium subscription plans. It'll cost them an additional $7.50 per user per month for the new plan, but they'll gain configuration, management and security services, plus Windows 10 and the new apps.

Those who manage Office 365 for Business Premium users will transition to the Microsoft 365 portal. The latter is effectively the same as the Office 365 portal, bringing in the features included with Microsoft Business, said Caroline Goles, Microsoft's director of Office for SMBs.

"We designed it so it looks exactly the same, except it just lights up those extra device cards," she said during a prelaunch demo in New York. "If you manage Office 365, it will be familiar but will bring in those new Microsoft 365 capabilities."

Unlike the enterprise version, Microsoft 365 Business includes a scaled-down iteration of EMS suited for smaller organizations. Garner Foods, a specialty provider of sauces based in Winston-Salem, N.C., is among the first to test and deploy the new service. Already an Office 365 E3 customer, Garner Foods was looking to migrate its Active Directory servers to Azure Active Directory, said COO Heyward Garner, who was present at the New York demo.

"They were able to downgrade most of their Office 365 users and gain the security and management capabilities offered with Microsoft 365," said Chris Oakman, president and CEO of Solace IT Solutions, the partner who recommended and deployed the service for Garner Foods. "It's a tremendous opportunity for small business."

Also now available are three previously announced tools: Microsoft Bookings for scheduling and managing appointments, MileIQ for tracking mileage and Outlook Customer Manager for managing contacts. In addition, Microsoft is adding three new tools: Connections, designed for e-mail marketing campaigns, Listings, for those who want to perform brand engagement on Facebook, Google, Bing and Yelp and Invoicing to generate bills to customers with integration into QuickBooks. These apps can all be managed in the Microsoft Business Center and the company is considering additional tools for future release.

Posted by Jeffrey Schwartz on 11/01/20170 comments

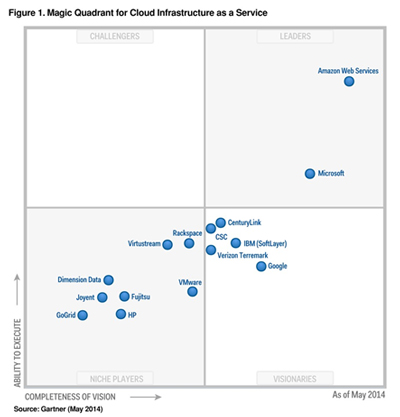

In a move to make Google's public cloud services more appealing to enterprise customers, the company and Cisco are partnering to bring hybrid cloud infrastructure that's compatible with the Google Cloud Platform (GCP). The pact, announced today, will enable workloads to run on Cisco UCS hyper-converged infrastructure hardware and the GCP.

The partnership is a major boost for Google as it looks to take on Amazon Web Services (AWS) and Microsoft, which both offer hybrid cloud solutions. Both have a wide lead on Google with the world's largest cloud footprints and infrastructure. Microsoft is hoping to maintain its lead over Google and gain ground on AWS with its new hybrid cloud solution, Azure Stack, which is now just starting to ship from Dell EMC, Hewlett Packard Enterprise and Lenovo. Cisco is also taking orders for its Azure Stack solution, which is set for imminent release.

Now that Cisco will also offer infrastructure compatible with GCP, Cisco is widening its cloud reach, while Google is gaining significant extension into enterprises. "Applications in the cloud can take advantage of on-premises capabilities (including existing IT systems)," said Kip Kipton, VP of Cisco's Cloud Platform and Solution Group, in a blog post announcing the pact. "And applications on-premises can take advantage of new cloud capabilities."

Cisco HyperFlex HX-Series systems will enable hybrid workloads to run on-premises and in GCP. The hybrid GCP offering is based on Kubernetes, the open source container orchestration and management platform that will provide lifecycle management, support for hybrid workloads and policy management. Kubernetes now integrates with Cisco's software-defined networking architecture, just upgraded earlier this month with the third release of Cisco's Application Centric Infrastructure (ACI).

The new ACI 3.0 includes improved network automation, security and multi-cloud support. Now that ACI offers Kubernetes integration, customers can deploy workloads as microservices in containers. Cisco said the Kubernetes integration also provides unified networking constructs for containers, virtual machines and bare-metal hardware and lets customers set ACI network policy.

Cisco's hybrid cloud offering also will include the open source Istio service management tooling. Itsio connects, manages and secures microservices. According to a description on its Web site, Istio manages traffic flows between microservices, enforces access polices and aggregates telemetry data without requiring changes to the code within the microservices. Running on Kubernetes, Istio also provides automated HTTP, gRPC, WebSocket and TCP load balancing and various authentication and security controls.

The Cisco offering will also include the Apigee API management tool. Apigee, a leading provider of API management software, was acquired by Google last year. It enables legacy apps to run on-premises and connect to the cloud via the APIs.

"We're working together to deliver a consistent Kubernetes environment for both on-premises Cisco Private Cloud Infrastructure and Google's managed Kubernetes service, Google Container Engine," said Nan Boden, Google's head of global technology partners for GCP, in a blog post published by Cisco. "This way, you can write once, deploy anywhere and avoid cloud lock-in, with your choice of management, software, hypervisor and operating system." Boden added that Google will provide a cloud service broker to connect on-premises workloads to GCP services for machine learning, scalable databases and data warehousing.

The partnership with Cisco promises to make GCP a stronger candidate for enterprises to consider moving workloads to the Google public cloud, though it's not the first. Among some notable partnerships, Google earlier this year announced Nutanix will run a GCP-compatible implementation of Kubernetes on its hyper-converged systems. And at VMworld, Google and Pivotal Cloud Foundry launched the Pivotal Container Service (PCS) to provide compatibility between Kubernetes running vShpere and the Google Container Engine. However, that VMworld announcement was overshadowed by VMware's biggest news of its annual conference, the plan to offer its VMware Cloud on AWS service.

While Cisco is offering customers an alternative to Azure Stack with its new Google partnership, Microsoft has made significant investments in support for Kubernetes orchestration. In addition to its Azure Container Service (ACS) with support for Kubernetes, Microsoft yesterday launched the preview of its managed Kubernetes service, called AKS.

Planned for release in the latter part of 2018, testing for the GCP-compatible Cisco offering will begin early in the year.

Posted by Jeffrey Schwartz on 10/25/20170 comments

If existing high-performance computing (HPC) isn't enough for you, Microsoft is bringing the supercomputing capabilities provided by Cray to its Azure public cloud.

This is a noteworthy deal because Cray has been regarded for decades as the leading provider of supercomputing systems. Cray's supercomputers are capable of processing some of the most complex, high-performance and scientific workloads performed. The two companies today announced what Cray described as an "exclusive strategic alliance" aimed at bringing supercomputing capability to enterprises.

While the term "exclusive" is nebulous these days, this pact calls for the two companies to work together with customers to offer dedicated Cray supercomputers running in Azure datacenters for such workloads as AI, analytics and complex modeling and simulation "at unprecedented scale, seamlessly connected to the Azure cloud," according to Cray's announcement.

The deal marks the first time Cray is bringing its supercomputers to a cloud service provider, according to a statement by Peter Ungaro, the company's president and CEO. The two companies will offer the Cray XC and Cray CS supercomputers with its ClusterStor storage systems for dedicated customer provisioning in Azure datacenters and offered as Azure services.

"Dedicated Cray supercomputers in Azure not only give customers all of the breadth of features and services from the leader in enterprise cloud, but also the advantages of running a wide array of workloads on a true supercomputer, the ability to scale applications to unprecedented levels, and the performance and capabilities previously only found in the largest on-premise supercomputing centers," according to Ungaro.

Cray's systems will integrate with Azure Virtual Machines, Azure Data Lake storage, Microsoft's AI platform and Azure Machine Learning (ML) services. The Cray Urika-XC analytics software suite and Microsoft's the CycleCloud orchestration service that Microsoft now offers following its August acquisition of Cycle Computing, can be used for hybrid HPC management.

The fact that Microsoft would want to bring Cray into the Azure equation is not surprising given CEO Satya Nadella's focus on bringing supercomputer performance to the company's cloud, a priority he demonstrated last year at the Ignite conference in Atlanta. In last year's Ignite keynote, Nadella revealed some of the supercomputing functions Microsoft had quietly built into Azure including an extensive investment in field programmable gate arrays (FPGAs) throughout the Azure network backbone, bringing 25Gbps backbone connectivity, up from 10Gbps, combined with GPU nodes.

Nadella stepped it up a notch in his opening keynote at the recent Ignite gathering held last month in Orlando, where he revealed extensive research and development effort focused on trying to one day offer quantum computing. The ability to offer this form of high-performance computing requires breakthroughs in physics, mathematics and software programming that are still many years away from achievement. While IBM and others have long showcased some of their R&D efforts, Microsoft' revealed it too has had been working on quantum computing for many years.

Nadella and his team of researchers said Microsoft will release some free tools by year's end that will let individuals experiment with quantum computing concepts and programming models. The pact with Cray will bring supercomputing processing capabilities to Azure that will solve the most complex challenges in climate modeling, precision medicine, energy, manufacturing and other scientific research, according to Jason Zander, Microsoft's corporate VP for Azure.

"Microsoft and Cray are working together to bring customers the right combination of extreme performance, scalability, and elasticity," Zander stated in a blog post. While it's not immediately clear to what extent, if any, Cray will be working with Microsoft on quantum computing, it's a safe bet that they will do so at some level, if not now, then in the future.

Posted by Jeffrey Schwartz on 10/23/20170 comments

Microsoft has given Azure Stack the green light to run on systems powered by Intel's next-generation Xeon Scalable Processors, code-named "Purley." By validating Azure Stack for Intel's new Purley CPUs, enterprises and service providers can run Microsoft's cloud operating system at much greater scale and expansion capability than the current Xeon CPUs, code-named Intel Xeon E5 v4 family ("Broadwell").

The first crop of Azure Stack appliances, which include those that customers have used over the past year with the technical previews, are based on the older Broadwell platform. Some customers may prefer them for various reasons, notably since gear with the latest processors cost more. Organizations may be fine with older processors, especially for conducting pilots. But for those looking to deploy Azure Stack, the newer generation might be the way to go.

Azure Stack appliances equipped with the new Purley processors offer improved IO, support up to 48 cores per CPU (compared with 28) and provide 50 percent better memory bandwidth (up to 1.5TB), according to a blog post by Vijay Tewari, Microsoft's principle group program manager for Azure Stack.

Intel and Microsoft have worked to tune Azure Stack with the new CPUs for over six months, according to Lisa Davis, Intel's VP of datacenter, enterprise and government and general manager of IT. In addition to the improved memory bandwidth and increased number of cores, the new processors will offer more than 16 percent greater performance and 14 percent higher virtual machine capacity, compared with the current processors, Davis said in a blog post.

The validation of the new CPUs comes three weeks after Microsoft announced the official availability of Azure Stack from Dell EMC, Hewlett Packard Enterprise and Lenovo, enabling customers and service providers to replicate the Azure cloud in their respective datacenters or hosting facilities. Cisco, Huawei and Wortmann/Terra are also readying Azure Stack appliances for imminent release.

The first units available are based on the older Broadwell processor. Tewari noted some of the vendors will offer them for the next year. "For customers who want early as possible adoption, Broadwell is a good fit because that's what's going to be off the truck first," Aaron Spurlock, senior product manager at HPE, said during a meeting at the company's booth at Ignite. "For customers who want the longest possible lifecycle on a single platform, [Purley] might be a better fit. But in terms of the overall user experience it's going to be greater [on the new CPUs] 90 percent the time."

Paul Galjan, Dell EMC's senior director of product management for hybrid cloud solutions, said any organization that wants the flexibility to scale in the future will find the newer processors based on the company's new PowerEdge 14 hyper-converged server architecture a better long-term bet. Systems based on the PowerEdge 14 will offer a 153 percent improvement in capacity, Galjan said in an interview this week.

"It is purely remarkable the amount of density we have been able to achieve with the 14g offering," Galjan said. One of the key limitations of systems based on the Broadwell platform is that they'll lack the ability to expand nodes on a cluster, a capability Microsoft will address in 2018. But it'll require the new Intel CPUs. Galjan said most customers have held off on ordering systems based on Azure Stack, awaiting the new processors from Intel for that reason.

"That's one of the reasons we are being so aggressive about it," he said. "Azure Stack is a future looking cloud platform and customers are looking for a future looking hyper-converged platform." Galjan compared in a blog post the differences between Azure Stack running on its PowerEdge 13 and the new PowerEdge 14 systems.

Lenovo this week also officially announced support for the new Xeon Scalable Processor with its ThinkAgile SX for Azure Stack appliance and is the first to support the Intel Select program early next year, which includes additional testing designed to ensure verified, workload-optimized and tuned systems.

Select Solutions, a program announced by Intel earlier this year, is a system evaluation and testing process designed to simplify system configuration selection for customers, according to Intel's Davis. It targets high-performance applications that use Azure Stack with an all-flash storage architecture.

Posted by Jeffrey Schwartz on 10/20/20170 comments

Looking to distance itself further from the highest-performing MacBook Pros, Microsoft is unleashing its most powerful Surface PCs to date with the launch of the new Surface Book 2. Microsoft showed off the newest Surface Book 2 today as an extra surprise tied with the release of the Windows 10 Fall Creators Update, which comes with support for new mixed reality and improvements for IT pros including enhancements to the console.

The new Surface Book 2 will be available Nov. 16, initially from the Microsoft Store, with either 13.5- or 15-inch displays and will be up to five times more powerful than the original Surface Book, which Microsoft launched two years ago. The 13.5-inch model will start at $1,499 with the larger unit starting at $2,499, available with either dual- or quad-core Intel 8th Generation Core processors and the current NVIDIA GeForce GTX GPUs.

Engineered to process 4.3 trillion math operations per second, the newest Surface Books are for those who perform compute-intensive processes such as video rendering, CAD, scientific calculations and compiling code at high speeds. Also targeting gamers, the 15-inch model will also include a wireless Xbox Controller. It has a 95-watt power supply and can render 1080p at 60 frames per second.

"This thing is 'Beauty and the Beast,'" said Panos Panay, corporate VP for devices overseeing Microsoft's entire hardware lineup, during a briefing with Windows Insiders and media, where we got to spend some time with the new units. "There's no computer, no laptop, no product that's ever pushed this much computational power in this mobile form factor. It's quite extraordinary, the performance."

Panay emphasized that the Surface Book Pro 2 aims to bring out the best in the new Windows 10 Fall Creators Update, Office 365, digital inking and mixed reality. Like its predecessor, the Surface Book 2 screen detaches so it can be used as a tablet or users can fold it back into studio mode. It sports what Panay said is the thinnest LCD available, with a 10-point multitouch display. It also supports a newly refined Surface Pen and the company's Surface Dial.

In addition to the five times power boost over the original Surface Book of two years ago (the 13.5-inch is four times more powerful), Panay said the newest version is twice more powerful than the latest MacBook Pro and boasts 70 percent greater battery life in video playback mode. Microsoft is claiming a 17-hours battery life (5 hours when used as a tablet), though laptops from Microsoft or anyone else rarely reach those maximums. Nevertheless, it is designed to ensure all-day use in most conditions.

The 13.5-inch model weighs 3.38 pounds, with the 15-inch unit weighing 4.2 pounds with the keyboard attached. "There has never been this much computational power in a mobile form factor this light," Panay said in a blog post announcing the Surface Book 2. "You can show off your meticulously designed PowerPoint deck or complex Pivot tables in Excel with Surface Book 2's stunningly vibrant and crisp PixelSense Display with multi-touch, Surface Pen, and Surface Dial on-screen support. You won't believe how much the colors and 3D images will pop in PowerPoint on these machines."

The 15-inch model offers just shy of 7 million pixels, or 260 DPI, which Microsoft claims is 45 percent more than the MacBook Pro. The 13.5-inch model produces 6 million pixels at 267 DPI (3000x2000).

Among the various configurations, they are available with a choice of Intel 7th or 8th Generation Core i5 or i7 processors and the option of dual- and quad-core processors. Depending on the model, customers can choose between 8GB or 16GB of RAM with 256GB, 512GB or 1TB SSD storage.

Posted by Jeffrey Schwartz on 10/17/20170 comments

Nearly a year after rolling out its Azure Functions serverless compute option for running event-driven, modern PaaS apps and services, Microsoft has given it a cross-platform boost. The company announced it had ported the Azure Functions service to the new .NET Core 2.0 framework during the Ignite conference in Orlando, Fla., late last month. On the heels of that release, Microsoft made available a public preview of its Java runtime for Azure Functions during last week's JavaOne conference in San Francisco.

Azure Functions provides elastic compute triggered by events within any service in Azure or third-party service, in addition to on-premises infrastructure, according to Microsoft. By porting it to .NET Core 2.0, both the Azure Functions Core Tools and runtime are now cross-platform, Microsoft announced at Ignite, though acknowledged in the Sept. 25 post that there are some known issues and functional gaps.

Java support in Azure Functions has been a top request, according to the announcement posted last week by Nir Mashkowski, partner director for the Azure App Service. "The new Java runtime will share all the differentiated features provided by Azure Functions, such as the wide range of triggering options and data bindings, serverless execution model with auto-scale, as well as pay-per-execution pricing," Mashkowski noted.

The preview includes a new Maven plug-in, a tool for building and deploying Azure Functions from that environment, he noted. "The new Azure Functions Core Tools will support you to run and debug your Java Functions code locally on any platform," he said.

Until now, Azure Functions supported C#, F#, JavaScript (Node.js), PowerShell, PHP, Python, CMD, BAT and Bash. In addition, the new Azure Functions is open source and available on GitHub. Azure Functions integrates with SaaS applications and a list of interfaces and supports authentication via standard OAuth providers including Azure Active Directory, a Microsoft account, Facebook, Google and Twitter.

Microsoft also posted some five-minute tutorials that demonstrate how to build and deploy Java app services and serverless functions. Microsoft also held two sessions at JavaOne that describe how to build and deploy serverless Java apps in Azure that are now available for replay.

Posted by Jeffrey Schwartz on 10/13/20170 comments

If there was any hope that Microsoft had any plans to come out with any new phones based on Windows 10 Mobile, or add new features to that version of the OS this year, HP and Microsoft both appear to have dashed them.

The chatter about the all-but-forgotten Windows Phone emerged a week ago when The Register reported that HP will no longer sell or support its Elite x3 Windows-based phone after the end of 2019 and would only offer whatever inventory is still available. The report quoted Nick Lazardis, who heads HP's EMEA business, during the Canalys Channels Forum. Noting that HP had insisted as recently as August that it was committed to the platform, and specifically the Windows Continuum feature, Lazardis attributed the change to Microsoft's shift in strategy with Windows Phone.

HP's Elite X3 3-in-1 device received positive reviews, including one published by Redmond magazine earlier this year. However, Samsung's release of the S8 phone and DexStation dock, allowing users to dock their phones and run Windows-based remote desktops and virtual apps, has proven a strong option to those who want Windows on a smartphone device.

Asked to confirm HP's plan, a company spokeswoman responded: "We will continue to fully support HP Elite x3 customers and partners through 2019 and evaluate our plans with Windows Mobile as Microsoft shares additional roadmap details," according the e-mailed statement. "Sales of the HP Elite x3 continue and will be limited to inventories in country. HP remains committed to investing in mobility solutions and have some exciting offerings coming in 2018."

In wake of the HP report, Joe Belfiore Corporate VP in Microsoft's Operating Systems Group, posted a series of Twitter responses on Sunday night about Windows Phone support. One question was about whether it was time to give up on using Windows Mobile.

"Depends who you are," he tweeted. "Many companies still deploy to their employees and we will support them!" But, he continued: "As an individual end-user, I switched platforms for the app/hw diversity. We will support those users too! Choose what's best 4 u."

Responding to a tweet asking directly about the future for Windows Phone, Belfiore stated what was until now largely presumed: "Of course we'll continue to support the platform. bug fixes, security updates, etc. But building new features/hw aren't the focus," he said with regret (based on his emoji).

If that left ambiguity, a response from a Microsoft spokesperson shouldn't. "We get that a lot of people who have a Windows 10 device may also have an iPhone or Android phone, and we want to give them the most seamless experience possible, no matter what device they're carrying," according to the spokeswoman. "In the Fall Creators Update, we're focused on the mobility of experiences and bringing the benefits of Windows to life across devices to enable our customers to create, play and get more done. We will continue to support Lumia phones such as the Lumia 650, Lumia 950 and Lumia 950 XL, as well as devices from our OEM partners."

Posted by Jeffrey Schwartz on 10/11/20170 comments

Amazon Web Services yesterday announced that it is the "preferred cloud provider" for General Electric, one of the world's largest industrial conglomerates. However, what was not stated in the announcement is that it only pertains to GE's internal IT apps. GE is moving forward with its plans announced last year to run its Predix industrial exchange on Microsoft Azure.

Responding to my query about the nature of yesterday's announcement, issued only by AWS, a GE spokeswoman clarified the company's plans. "The AWS announcement refers to our internal IT applications only," she stated.

The plan to run GE Predix, the giant industrial cloud platform aimed at building and operating Internet of Things (IoT) capabilities, on Azure was announced at Microsoft's Worldwide Partner Conference (WPC) in July of 2016. Predix is a global exchange and hub designed to gather massive quantities of data from large numbers of sensors and endpoints and apply machine learning and predictive analytics to the data to optimize operations and apply automation. The company uses it for its own customers and partners.

Jeffrey Immelt, the chairman and CEO of GE who recently retired, joined Microsoft CEO Satya Nadella on stage during the keynote session kicking off last year's WPC, where he described GE's plans to integrate Predix with the Azure IoT Suite and Cortana Intelligence Suite.

"For our Predix platform, our partnership with Microsoft continues as planned," according to the GE spokeswoman. "By bringing Predix to Azure, we are helping our mutual customers connect their IT and OT [operational technology] systems. We have also integrated with Microsoft tools such as Power BI (which we demonstrated at our Minds + Machines event last year) and have a roadmap for further technical collaboration and integration with additional Microsoft services."

GE will have more to say about that at this year's annual Minds + Machines conference, scheduled to take place at the end of this month in San Francisco.

Posted by Jeffrey Schwartz on 10/06/20170 comments

Microsoft and NetApp are working to deliver a native version of the storage provider's Network File System (NFS) for Azure. The new Enterprise NFS Service is based on NetApp's flagship Data ONTAP storage operating and management platform and will be available for public preview in early 2018.

Both companies inked their latest of many partnerships over the years to let enterprises move their enterprise storage workloads to Microsoft Azure. The new Azure Enterprise NFS Service was announced at NetApp Insight, the company's annual customer and partner conference.

The announcement was overshadowed by the fact that the conference is taking place at the Mandalay Bay hotel in Las Vegas, the site of Sunday night's deadly shooting massacre. While the preconference sessions were cancelled on Monday because the hotel was still on lockdown, it resumed yesterday and was marked by a moment of silence.

Administrators will be able to access the new service from the Azure console, which NetApp said will appeal to cloud architects and storage managers seeking to bring NFS services natively to Azure for workloads such as database-oriented analytic workloads, e-mail and disaster recovery. In a statement by Anthony Lye, senior VP of Microsoft's cloud business unit, the solution will use NFS to provide "visibility and control across Azure, on-premises and hosted NFS workloads."

The offering will enable the provisioning and automation at scale of NFS services using RESTful APIs, with added data protection offered through the ability to create on-demand, automated snapshots. The service will support both V3 and V4 workloads running in Azure as well as hybrid deployments, according to NetApp. It will also include integration with various Azure services including SQL Server and SAP Hana for Azure.

NetApp also said the pack calls for integrating Data ONTAP software with Azure Stack, which CEO George Kurian said in a recorded video presentation will speed the migration of enterprise applications to Azure Stack and Azure.

Kurian also said the two companies are integrating NetApp's recently launched all-flash-based FabricPool technology and said it "manages cold data by tiering in a cost-effective manner to the cloud and integration of fabric pools together with Azure Blob Storage. He added that it "gives customers a really capable hybrid cloud data service and allows them to optimize their own datacenters," he said.

Cloud Control for Office 365 now supports Azure Storage and will soon have availability in EMEA and APAC regions, allowing local instances of Exchange, OneDrive and SharePoint. The two companies are working to provide more extensive integration with Cloud Control for Office 365 and NetApp AltaVault archiving platform, enabling customers to choose between hot, cool, and cold storage options in Azure for backup and disaster recovery requirements.

Posted by Jeffrey Schwartz on 10/04/20170 comments

As the world today awoke to the news of last night's horrific mass shooting that originated from a 32nd floor room of the Mandalay Bay hotel in Las Vegas, killing at least 58 and injuring more than 550, many IT pros had just arrived or were on their way to NetApp's Insight conference.

The NetApp conference was set to begin today with preconference technical sessions but as the shooting unfolded, the hotel was evacuated and all of today's activities were cancelled. NetApp later in the day said it had decided to resume the conference tomorrow for those who already attended with the keynote session to be kicked off by President and CEO George Kurian.

"I am shocked and saddened by the tragic event that occurred in Las Vegas at the Mandalay Bay last night," Kurian said in a statement. "I am sure you all share these sentiments. My heard and the hearts of thousands of NetApp employees break for the loved ones of those affected by the terrible events. NetApp will stand strong in the face of senseless violence and continue with the conference for those who want to attend."

Also kicking off this week is the Continuum Conference, an event for managed services providers, taking place further down the Las Vegas Strip at the Cosmopolitan. It too is set to go on, as reported by SMBNation's Harry Brelsford.

Some expressed mixed opinions on whether NetApp should resume the conference where the hotel remains an investigation scene and attendees were either looking to help and donate blood, while others as of last evening still were unable to return to their rooms.

This tragedy, which has shocked the world, is also unnerving to many IT professionals who find themselves in Las Vegas many times a year for large conferences. Las Vegas hosts hundreds of conferences of all sizes every year, and it is the site of many major tech gatherings. Many of you, myself included, were just at the Mandalay Bay just over a month ago for VMworld 2017. Many other tech vendors and conference organizers including Veritas, Okta, Cisco, Hewlett Packard Enterprise, DellEMC and VMware, among others have held major conferences in Las Vegas.

TechMentor, produced by Redmond magazine parent 1105 Media was last held in Las Vegas in early 2016. The upcoming TechMentor conference is scheduled to take place in Orlando, Fla., Nov. 12-17 as part of the Live! 360 cluster of conferences and will return to the Microsoft campus in Redmond next summer as it did this year. After years of steering its conferences away from Las Vegas, Microsoft recently announced next year's Inspire partner conference in Las Vegas.

"My heart and thoughts are with all the people, families and responders in Las Vegas impacted by this horrific and senseless violence," Microsoft CEO Satya Nadella tweeted. VMware CEO Pat Gelsinger, pointed to a GoFundMe page posted by Steve Sisolak, Clark County Commission chair from Las Vegas for victims. "Our thoughts and prayers this morning are with the victims and families of Las Vegas shooting," Gelsinger tweeted.

NetApp is one of the largest providers of enterprise storage hardware and software with $5.52 billion in revenues for its 2017 fiscal year, which ended April 28. Many storage experts attending Microsoft's Ignite conference, which wrapped up on Friday, had signaled they were headed to Las Vegas for the NetApp event. Software and cloud providers listed as major sponsors of the NetApp technical event include Amazon Web Services, Cisco, Fujitsu, Google Cloud, IBM Cloud, Intel, Microsoft, Rackspace, Red Hat, VMware and many others, according to the company's sponsorship roster.

The shooter is alleged to have targeted an outdoor festival where he fired round after round for more than 10 minutes at the crowd. It is believed to be the worst shooting by an individual, in terms of the number of deaths and casualties, in U.S. history. People have been asked to donate blood if they can, and according to reports, attendees at the NetApp conference, and other events taking place are doing that, and helping out however they can.

Unfortunately, what has transpired in Las Vegas could happen in many places in the U.S. and abroad. Hotels may now have to consider scanning the bags of guests just as airlines now do, and we need to remain aware of our surroundings, which we've become conditioned to do since the attacks of Sept. 11, 2001.

Posted by Jeffrey Schwartz on 10/02/20170 comments

Microsoft created some interesting buzz at this year's Ignite conference with the news that it is putting Bing at the center of its artificial intelligence and enterprise search efforts.

The new Bing for Business will be a key deliverable from the new AI Research Group Microsoft formed a year ago this week, led by Harry Shum, which brought together Microsoft Research with the company's Bing, Cortana, Ambient Computing and Robotics and Information Platform groups.

Bing for Business brings the Microsoft Graph to the browser, allowing employees to conduct personalized and contextual search incorporating interfaces from Azure Active Directory, Delve, Office 365 and SharePoint. Li-Chen Miller, partner group program manager for AI and Research at Microsoft, demonstrated Bing for Business in the opening keynote session at Ignite, showing how to discover her organization's conference budget.

Using machine reading and deep learning models, Bing for Business went through 5,200 IT and HR documents. "It didn't just do a keyword match. It actually understood the meaning of what I was asking, and it actually found the right answer, the pertinent information for a specific paragraph in a specific document and answered my question right there," Miller said. "The good news is Bing for Business is built on Bing and with logic matching, it could actually tell the intent of what I was trying to do."

Miller added that organizations deploying Bing can view aggregated but anonymized usage data. "You can see what employees are searching for, clicking on and what they're asking, so you can truly customize the experience," she said. It can also be used with Cortana.

The idea behind Bing for Business is it takes multiple approaches to acquiring information within an enterprise -- such as from SharePoint, a file server and global address book -- and applies all the Active Directory organizational contexts, as well as Web search queries, to render intelligent results, said Dave Forstrom, Microsoft's director of conversational AI, during an interview at Ignite.

"If you're in an enterprise that has set this up, now you can actually work that into your tenant in Office 365 and then it's set up through your Active Directory for authentication in terms of what you have access to," Forstrom said.

Quite a few customers are using it now in private beta, according to Forstram. The plan is to, at some point, deliver it as a service within Office 365.

Seth Patton, general manager of Microsoft's Office Productivity Group, said in a separate interview that the Microsoft Graph brings together the search capabilities into a common interface. It also includes Microsoft's Bot Framework.

"Being able to have consistent results but contextualized in the experience that you're in when you conduct the search is just super powerful," Patton said. "We've never before been able to do that based on the relevance and the contextual pieces that the Graph gives."

Posted by Jeffrey Schwartz on 09/28/20170 comments

Rackspace will secure Hyper-V workloads via its managed security service offering and has announced its Microsoft Azure offering is now PCI certified. The company, one of the world's largest managed services provider, is talking up the new Hyper-V protection capabilities at this week's Microsoft Ignite conference, taking place in Orlando, Fla.

The Hyper-V protection extends across the company's Rackspace Managed Security (RMS) service. Also, a new Rackspace Cloud Replication for Hyper-V will be offered under the umbrella of its Rackspace portfolio of Microsoft services, which provides overall threat protection using analytics and remediation across the company's managed services offering. The managed Rackspace Cloud Replication for Hyper-V offering is based on Microsoft's Azure Site Recovery and offers replication, storage and failover of Hyper-V virtual machines.

While Rackspace said the new offering lets organizations use the managed service to target Microsoft Azure rather than on-premises infrastructure, it also provides an alternative to moving those Hyper-V workloads into an infrastructure-as-a-service scenario, said Jeff DeVerter, CTO of Microsoft technologies at Rackspace, during an interview at Ignite.

"As excited as the world is about the cloud, not every workload is ready to be made into a modern application, and so customers can actually have a single tenant using Hyper-V and solve the problem of getting out of the datacenter, which most companies want to do today," DeVerter said. "But they don't have to take on the added expense of running an IaaS inside of Azure, and IaaS workload tends to cost more in azure than it does in a tenant."

DeVerter said running them in Hyper-V solves the problem of moving those workloads out of on-premises datacenters. Rackspace can work with customers over time and starting to extend those application out into Azure, if transforming the application is the ultimate goal. Rackspace had already offered protection of workloads for VMware-based VMs.

Rackspace also used Ignite to announce that its managed Microsoft Azure service is now has PCI certified for those running workloads that carry payment data. Microsoft Azure is already PCI compliant. But because Rackspace manages the workloads, it too had to secure the certification.

Posted by Jeffrey Schwartz on 09/27/20170 comments

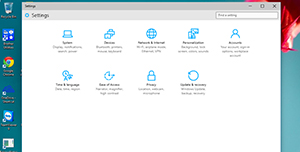

The Azure management portal isn't just for Microsoft's public cloud. Microsoft kicked off its annual Ignite conference in Orlando, Fla. this week announcing that Azure Stack appliances from Dell EMC, Hewlett Packard Enterprise and Lenovo are now available, along with news of bringing PowerShell, change tracking, update management, log analytics and simplified disaster recovery to the Azure portal.

Azure Stack appliances are the cornerstone of Microsoft's long-stated hybrid cloud strategy to bring its Azure cloud in line with the same portal management experience and proved the ability to build and provision instances and applications with the use of common APIs. "Azure Stack also enables you to begin modernizing your on-premises applications even before you move into the public cloud," said Scott Guthrie, Microsoft's executive VP for cloud and infrastructure, speaking in a keynote session at Ignite today.

"The command and control [are] identical," said Sid Nag, Gartner's research director for cloud services, during an interview at Ignite following the Guthrie's session. "If I have a craft, I don't have to learn new skills. I can transition very smoothly without a learning curve."

However, like any major new piece of infrastructure, despite significant interest, the pace and number of deployments remain to be seen, according to Nag. "Clients have been looking for an onramp to the public cloud, but they are not ready to commit," Nag said.

Microsoft maintains that enterprises should embrace the hybrid path it has championed for some time, but the company is also apparently giving them a nudge by bringing the Azure portal to their world, whether they use the public cloud. Adding these new capabilities brings the portal even to those not using Azure Stack. During the session, Corey Sanders, Microsoft's director of Azure compute, demonstrated the new features coming to the Azure portal.

PowerShell Built into Azure Portal: PowerShell is now built into the Azure portal, aimed at simplifying the creation of virtual machines. "It's browser based and can run on any OS or even from an iPhone," Sanders said. "If you are familiar with PowerShell, it used to take many, many, commands to get this going. Now it takes just one parameter," he said. "With that, I put in my user name and password and it creates a virtual machine, so you don't have to worry about the other configurations unless you want to." Sanders said IT pros can use classic PowerShell WhatIf queries to validate what a given command will do.

Change Tracking: When running a virtual machine, it is tracking every change on the VM including every file, event and registry change. It can scan a single machine or an entire environment, letting the IT pro discover all changes and investigate anything that requires attention.

Log Analytics: Administrators can now call on a set of prebuilt operations and set of statistics to discover the number of threats exist. It looks beyond the built-in antimalware, letting the administrator go into the analytics designer to create queries that, Sanders said, are simple to write. "They are ery SQL-like and allow me to do very custom thinvgs," he said. For example, it can query over last seven days the processor time of all the virtual machines in a specific subscription. It can group them by computer and display a time chart that specifies spikes of all the CPUs timed across those seven days.

Update Management: Administrators looking to see what updates or patches have been installed, or are awaiting installation can use this new feature in the Azure portal. It displays details of what the updates include, allows the administrator to choose which ones to act on. Sanders emphasized this works across an entire Azure or on-premise infrastructure of Windows and Linux machines.

Disaster Recovery: Noting that planning how to back up infrastructure and ensure a workable recovery plan is complex, Sanders said the site recovery capability in the Azure portal lets the administrators pick a target region and it will provide a picture of how a failover scenario will actually appear. "The key point here is this isn't just a single machine. You can do this across a set of machines, build a recovery plan across many machines, do the middleware and actually run scripts according to that plan," he said.

Microsoft said these features will appear in preview mode today. The company hasn't disclosed a final release date.

Posted by Jeffrey Schwartz on 09/25/20170 comments