In-Depth

First Look: Microsoft's Resilient File System Version 2

The Resilient File System (ReFS) v2 in Windows Server 2016 TP4 still isn't faster than NTFS -- not surprising at this stage of its development, though its block-cloning feature is highly optimized for virtualized workloads.

- By Paul Ferrill

- 02/08/2016

When Microsoft introduced its new on-disk file system with Windows 8 and Windows Server 2012, it wasn't meant to replace NTFS in its initial release. The Resilient File System, or ReFS, still isn't ready to completely take over as the default unless you need a data store meant to support Windows Hyper-V disk images. In that case, Microsoft really does want you to use the next iteration of the file system -- ReFS v2 -- as it offers a number of advantages and advancements with significant performance improvements.

Storage Spaces was also a part of the Windows 8/Windows Server 2012 release and provides multi-disk file resiliency, which is essentially software RAID. Storage Spaces offers three options: simple, mirror and parity. Simple provides no file resiliency and is roughly equivalent to RAID 0 on a single disk. Mirror is basically the same as RAID 1 and creates an exact copy of all data on two or three physical disks. Parity is essentially equivalent to RAID 5 and distributes data across multiple disks using parity as a way to detect errors. Storage Spaces will work with either NTFS or ReFS as the on-disk format.

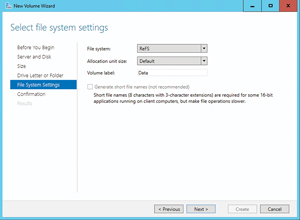

ReFS v1 included a design feature called integrity streams that makes it possible to detect and correct file system corruption on the fly. Unfortunately, this feature didn't play well with Hyper-V virtual disks and could significantly impact performance. The work around is to disable this feature for both Windows Server 2012 R2 and the technical preview (TP) versions of Windows Server 2016. The process for creating a new ReFS volume hasn't changed (see Figure 1) and happens during the new volume creation process. ReFS v2 will be used automatically if you're running Windows Server 2016 TP4.

[Click on image for larger view.]

Figure 1. The process of creating volumes hasn't changed in version 2.

[Click on image for larger view.]

Figure 1. The process of creating volumes hasn't changed in version 2.

Key Features

One of the biggest advantages to an ReFS-formatted volume over NTFS shows up when an error occurs. When a Storage Spaces pool uses ReFS as the underlying on-disk format it can leverage the new features to greatly improve the repair process. While CHKDSK can take a very long time on a large NTFS volume due to the sequential nature of the process, the same operation on an ReFS volume can be accomplished quickly due to the ability to run multiple parallel operations across the entire volume.

Windows Server 2016 TP4 is the first preview release with ReFS v2 available as a disk format option. Microsoft provided a first look of the key technology pieces of ReFS v2 at last fall's Storage Networking Industry Association (SNIA) conference in a paper presented by Principal Development Lead JR Tipton. In that paper he outlined a number of improvements with direct application to virtualization including fast provisioning and diff merging, improved storage tiering, efficient use of erasure coding, read caching, block cloning, plus a laundry list of file system improvements.

Of interest to Hyper-V customers is the block cloning feature, which has been highly optimized for virtualization workloads. Where this comes into play in a big way has to do with checkpointing or the taking of a snapshot. ReFS has the ability to clone any block of one file into any other block of another file. This makes it possible to perform a metadata-only operation, which will perform a copy-on-write only when needed. Using these techniques drastically improves the overall performance and helps reduce redundant data. When you delete a Hyper-V VM checkpoint it's much easier to write old and new data to a new VHD in order to discard the "parent" information. Block cloning makes this operation significantly faster.

ReFS v2 also uses the concept of cluster "bands" to group multiple chunks of data together for efficient I/O. This lends itself well to data tiering and the moving of data between tiers. It's much more efficient to move large chunks of data than to move many small chunks. Small writes accumulate in the fast storage tier until the point where an entire band of data can be written sequentially to disk. Sequential writes are always the most efficient and band transfers are always sequential. Other operations can take place against a band of data to optimize performance.

Speed

Creating a new 127GB VHDX file on an NTFS volume took 12 minutes and 47 seconds (see Figure 2). With the same disk (a 2TB SAS drive) formatted using ReFS, it happens almost as fast as you can click the Finish button on the wizard. That's because ReFS simply allocates the space for a file in the metadata and doesn't actually clear any existing data on the disk. Creating a disk of any size on a ReFS v2 volume takes the same amount of time.

[Click on image for larger view.]

Figure 2. The wizard shows the speed of creating a VHDX file.

[Click on image for larger view.]

Figure 2. The wizard shows the speed of creating a VHDX file.

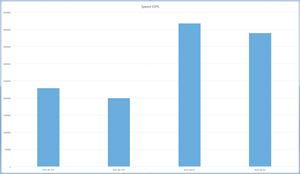

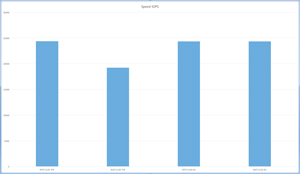

Another aspect of the speed question concerns the performance of the file system under different types of load. Microsoft has started using the DiskSpd utility to measure and publish performance of various workload types on storage subsystems. In order to test the raw speed of the two file systems I created identical volumes on a Dell R930 using four NVMe SSD drives. Each volume was created by selecting the Simple option using the New Volume wizard from Server Manager. The virtual disks were created to use the maximum amount of space with one formatted using NTFS and the second with ReFS.

Two DiskSpd profiles were used with small (8K) and large (512K) block size. Results are shown in Figure 3 (8K is on the top and 512K is on the bottom) with an obvious speed advantage to Windows Server 2012 R2 and NTFS. This isn't really surprising as Microsoft traditionally holds off until the later stages of the development process to do performance optimization. It also doesn't show some of the real speed advantages from ReFS when performing both new virtual disk creation and disk snapshot merging.

[Click on image for larger view.]

Figure 3. Performance levels using 8K (top) and 512K (bottom) block sizes comparing ReFS and NTFS on Windows Server 2012 R2 and Windows Server 2016 TP4.

[Click on image for larger view.]

Figure 3. Performance levels using 8K (top) and 512K (bottom) block sizes comparing ReFS and NTFS on Windows Server 2012 R2 and Windows Server 2016 TP4.

Bottom Line

Windows Server 2016 is still a work in progress and hasn't been through the performance tuning phase yet. Microsoft has invested a great deal of effort to make ReFS a file system worthy of serving as the default for Hyper-V workloads and for hosting virtual hard drives. While speed is important it isn't necessarily the only measure of performance, especially when you take error recovery into consideration.

If your organization is a heavy user of checkpoints or snapshots you could stand to benefit greatly from using ReFS as the file system of choice. The same goes for frequent creation of virtual hard disks. ReFS v2 doesn't directly compete with Offloaded Data transfers (ODX) but does have some of the same functionality at the block level. ODX was envisioned to reduce the amount of traffic on a network caused by copy operations with both source and target on the same storage device.

Editor's note: The print edition of Redmond magazine and an earlier version of this article published online incorrectly and regrettably described ReFS as Reliable File System rather than Resilient File System.

About the Author

Paul Ferrill, a Microsoft Cloud and Datacenter Management MVP, has a BS and MS in Electrical Engineering and has been writing in the computer trade press for over 25 years. He's also written three books including the most recent Microsoft Press title "Exam Ref 70-413 Designing and Implementing a Server Infrastructure (MCSE)" which he coauthored with his son.