In-Depth

SQL Server 2016 Preview Reveals Path to Azure and Big Data

The next version of the Microsoft flagship database promises to add dynamic extensions to Azure and real time links between structured and unstructured data.

The next release of Microsoft SQL Server will be a major upgrade to the relational database platform. SQL Server 2016 introduces substantial new capabilities that promise to advance organizations to manage data for business intelligence and analytics. It also is notably more suited to run both on-premises and in the Microsoft Azure cloud.

While most relational database needs have long been met by SQL Server, Oracle, IBM DB2, and MySQL, business intelligence and analytics have become an evolving target in recent years, with Microsoft occasionally providing leadership in a very competitive marketplace. Combined with the recently released Power BI 2.0 (see "First Look, Power BI 2.0"), perhaps Microsoft has shown it wants to recapture the lead.

Among the new features Microsoft introduced in the Community Technical Preview 2.4 of SQL Server 2016 are a new Query Store, support for JSON documents, row-level security for filter predicates (SELECT), dynamic data masking, AES encryption for Endpoints and Always-On support for three synchronous replicas. The following is a breakdown of the improvements to analytics/BI and the new Azure support, respectively:

Analytics and Business Intelligence

- PolyBase: a real-time link between structured and unstructured data

- Enhanced speed with in-memory OLTP to support operational analytics

- Non-clustered Columnstore indexes on a rowstore with a unique key

- Temporal tables: built-in support for audit history tables and slowly changing dimensions

- Native support for "R" analytic applications

- More than 50 new DAX commands

- Parallel processing of tabular tables with multiple partitions

- Master data services (MDS) improvements, including faster Excel interaction

- Easier setup for Analysis Services for SharePoint PowerPivot mode

- Complete overhaul of the Reporting Services report building process

Azure-Related enhancements

- Backup to Azure improvements

- Stretch database: dynamic storage and query access of older data in Azure

- Always Encrypted technology: for increased confidence in cloud-based databases

Configurations

The editions for SQL Server 2016 CTP 2.4 are similar to prior releases, placed into three groups:

- Principal: Enterprise, Business Intelligence, Standard

- Specialized: Web

- Breadth editions: Developer, Express (three different Express editions)

The minimum server OS is Windows Server 2012 and it requires a Windows 8 or higher client. For the preview, Windows 10 was listed as a supported option only for SQL Server Standard, but not the Developer edition.

To get started, I installed it on a clean, but updated instance of Windows Server 2012 R2. Testers will come across the familiar SQL Server Installation Center main page with a similar look and feel.

Analytics and Business Intelligence

Over the years, Microsoft's advancement with analytics and business intelligence has come in spurts. The company came on strong back in 1999, with the introduction of SQL Server 7 OLAP Analysis Services. It was so strong that the query language MDX became the standard for multi-dimensional engines. A few years later came improvements to analysis services and SQL Server Integration Services (SSIS), a good integration tool. And soon after, came Reporting Services, a usable, if not fabulous reporting tool. Now, after a long quiet period, Microsoft has lately demonstrated a renewed emphasis on analytics. With SQL Server 2012, Tabular was introduced as part of Analysis Services, while a columnstore index was added to the core database engine. SQL Server 2014 made additional improvements, and now with SQL Server 2016 Microsoft has continued to make improvements to the analytics side of the business. And, finally, a long overdue update to Reporting Services lets developers create reports in HTML5 that can be rendered in modern browsers -- and not just in Internet Explorer. Unfortunately, the multi-dimensional side of Analysis Services has languished.

PolyBase

As a platform, Hadoop provides an excellent tool to collect and then make discoveries against unstructured and semi-structured data. But once the discoveries have been made, they still reside in Hadoop. Special connectors such as Apache Sqoop allow Extract, Transform and Load (ETL) processes to move the data to SQL Server, but they require some finesse to work. Doable, not seamless and it required the physical movement of data.

First introduced in the Microsoft Analytic Platform System (APS, formerly, Parallel Data Warehouse), PolyBase provides a direct, real-time bridge between the relational world and Hadoop. MVP James Serra noted in a blog post last year that PolyBase was similar to setting up and using a linked server (with the goal of seamlessly joining an organization's relational data with the unstructured world of Hadoop. And, not to omit Azure, PolyBase also has connectors into Azure blobs.

But, PolyBase is not simply a physical bridge. It's a sophisticated query engine designed to use T-SQL against Hadoop while bypassing the MapReduce protocol when appropriate, allowing analysts to focus on the data using SQL rather than the more complex MapReduce protocols.

Installing PolyBase is straightforward. It's just another feature selection that has two software requirements. Windows Server 2012 R2 already has the .NET Framework 4.0 installed, so that just leaves the Java SE Runtime Environment (JRE 7.51 or higher) requirement. My installation used the JRE file: jre-8u51-windows-x64.exe.

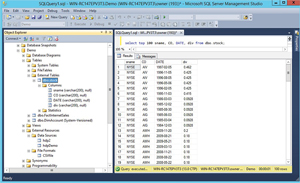

Setting up PolyBase is a more complicated series of steps. There are no wizards and the documentation is in its early stages. First you have to change the server configuration for the ‘hadoop connectivity' setting. Next, the PolyBase yarn-site.xml file needs to be updated with a value from your Hadoop system if your Hadoop system is YARN-based. With that done, you'll follow a three-step process to first create an external data source, then an external file format, and finally an external table that uses the external data source and the external file format you just created. And if all goes well, you'll have an external table setup, using your newly created external data source and external file format. After a few attempts, I was able to correctly setup the table and run a SELECT query against it. The external table process overlays a virtual table schema in PolyBase against a raw file stored in Hadoop. It's really more like a view because all the data stays in Hadoop. When I ran a SELECT query against the external table it returned a row set from the file stored on my Hortonworks Hadoop server (see Figure 1).

[Click on image for larger view.]

Figure 1. Configuration and query results from an external HortonWorks Hadoop Server.

[Click on image for larger view.]

Figure 1. Configuration and query results from an external HortonWorks Hadoop Server.

PolyBase is difficult to set up, and at least for the preview, the error messages were not always helpful but that's not the point. Direct, real-time dynamic access and interaction between relational data stores, data warehouses, and Hadoop and Azure storage is here. You can only hope that Microsoft will continue to improve this product and that it will only get better.

Operational Analytics

Historically, operations and reporting have been at odds with each other. Row-based, normalized relational databases form the backbone of a solid transactional system, built on the Atomicity, Consistency, Isolation, Durability (ACID) protocol. But reporting against a transactional system has been difficult, and can slow down a transactional system to a crawl. Reporting, now commonly referred to as business intelligence, is designed around a star schema optimized for fast analysis: Two approaches with two database designs requiring a complex set of ETL systems to move operational data to the reporting system. Real-time it is not.

In-Memory OLTP

Part of Microsoft's solution is to rework how the database engine works with table data in-memory, but still maintain transactional ACID. There are other in-memory systems on the market, but if you look closely, you'll notice that most have ripped out the ACID support. First introduced to us as part of SQL Server 2014, in-memory optimized tables gave us a first look at how Microsoft will evolve its existing transactional, ACID-supported systems for massive amounts of memory. With the 2016 release some of the upgrades include support for ALTER operations, complete support for collations and code pages, storage improvements and support for Multiple Active Result Set (MARS) connections.

Columnstore

A second part of operational analytics is the columnstore index. Introduced with SQL Server 2012, you could create a columnstore index. A good start, but limitations held back usage. In short order, SQL Server 2014 was released. Now a columnstore index can be clustered or nonclustered, and it's updatable. Excellent for a data warehouse, but not for operations. Operational tables need constraints. Now, with SQL Server 2016, you can combine a rowstore table with a UNIQUE key index with a nonclustered columnstore index.

Temporal Tables

With a name like Temporal, it's easy for this enhancement to get overlooked. But for anyone who's spent days writing and testing triggers to maintain audit and history tables for a transactional system, this enhancement is for you.

The key to temporal tables is the built-in ability to maintain a history of data changes to a table. Using the temporal table feature, you no longer have the need to write custom triggers to maintain an audit history of changes to a customer address table or a slowly changing dimension. Defined as part of the ANSI SQL 2011 standard, SQL Server 2016 now includes this feature.

In a temporal table, the start and end date columns are SYSTEM_TIME columns, with no ability to directly INSERT or UPDATE the values. For audit history, its perfect, but not for a business rules table. In a rules table, you need to specifically define the start and end date for a record. Perhaps in the future we'll get an option that allows us to either use the current system date, or to define the effective date of the record. The temporal table feature is available in both the CREATE TABLE and ALTER TABLE statements, so existing tables can be easily converted. Also note that a temporal table represents a permanent audit history of the data changes. This is very different from change data capture (CDC), and is not intended to replace CDC. They serve different functions.

R

Earlier this year Microsoft acquired Revolution Analytics, and with that acquisition, we now have the initial steps that incorporate R into SQL Server. Data scientists will create R models in Visual Studio and then upload, manage and run them directly in SQL Server.

New DAX Commands

More than 50 new DAX commands already available in Power BI Desktop are part of the CTP 2.4 preview (up from 20 DAX commands in CTP 2.2). Most are statistical and math-related, but there are a few general and date functions being added such as DATEDIFF, CALENDAR, ISEMPTY, GROUPBY, NATURALINNERJOIN and NATURALLEFTOUTERJOIN (click here).

Azure and the Cloud

Many of the enhancements in this preview extend to the cloud. PolyBase also has the ability to hook directly into your Azure blob storage. And like the Hadoop configuration, it, too, requires manual manipulation of an XML file, and the script creation of data sources, external file formats and external tables. Further extending the Azure connection, Microsoft has made improvements to its Backup to Microsoft Azure that supports block blobs instead of page blobs.

Always Encrypted

Technically, the Always Encrypted technology is a security upgrade. And its usefulness spans both on-premise databases, as well as those hosted in the cloud. But with accelerating migration of databases and systems to the cloud, security is at the top of requirements. The Always Encrypted feature gives you column-level security control over sensitive data, controlled by the application, so sensitive data is unavailable to DBAs and systems administrators. So if it is in the cloud, the cloud administrators do not have access to the encryption key.

Stretch

Stretch Database technology is positioned as a cloud-based way to automatically archive historical table data directly into Azure -- without losing user access to the data -- and ideally an economical alternative to adding more hardware. The stretch process is handled automatically and internally to the database, so no adjustments to client or user access is needed.

Setup is a multi-step process. The database server must be enabled using the server option ‘remote data archive'. Next, enable the database for stretch and, finally, the identified table. So, with a few clicks and a sign-on into Azure, the archival process should begin. Admin procedures include pausing and resuming the archive process, backup and restore, and most important, a process to unwind the stretch process and migrate the data back to an on-premises server. Undoubtedly, data access to the Azure cloud will be slower, but the cost trade-off could be justified.

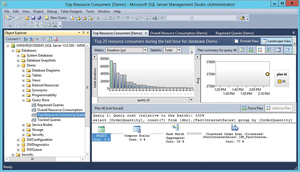

Query Store

Diagnosing query performance problems can be one of the most difficult tasks to perform for a DBA. And at best, the procedure cache has only the most recent execution plans. Ideally, a history of how a query's execution plan changed over time would be ideal. That's what the Query Store does (see Figure 2). Enabling this configuration option at the database level effectively creates a persistent database container that holds a history of past execution plans per query, along with their performance metrics. But it would be cruel if the Query Store just presented all this query information without any tools to act on it. Fortunately, the Query Store feature provides the Force Plan option. Either in SQL Server Management Studio (SSMS), or using the proc sp_query_store_force_plan, you can force the query to use a specific plan. While buried deep in the What's New pages with a slightly misunderstood name (for a DBA), Query Store could be one of the most exciting enhancements for 2016.

[Click on image for larger view.]

Figure 2. Query Store Database Property page.

[Click on image for larger view.]

Figure 2. Query Store Database Property page.

Analysis Services

Analysis Services has had a few enhancements. Perhaps the most important is parallel processing for tabular tables with two or more partitions. There are more than 50 new DAX functions and they added a third Analysis Services Server installation mode called PowerPivot Mode.

Reporting Services

With the release of Power BI 2.0, the expectation that Microsoft would update the somewhat abandoned Reporting Services was distant. Yet, as the CTP versions have advanced, the pending changes to Reporting Services have started to unfold. A major change in CTP 2.4 provides the ability to create reports that support HTML5 with the ability to work in all the modern browsers, not just Internet Explorer. Plus, the standalone Report Builder has a similar look and feel to the Power BI Desktop. It is clearly targeted at power users and analysts, not just IT developers. Perhaps subtle, but this is a big move for Microsoft toward the self-service BI space.

Integration Services

For SSIS, the main enhancement is the ability to establish a centralized SSIS catalog into a single user database that can then be incorporated into an AlwaysOn Availability group. Other features include the ability to do an incremental package deployment, and improvements to how version upgrades manage package layouts, annotations and connection managers.

Master Data Services

Master Data Services has a number of upgrades, with the primary one being on performance. Using data compression and some other techniques, Microsoft's marketing literature claims the Excel add-in will provide a 15x performance improvement. Other improvements include better security, transaction log maintenance, custom indexes, a revision history for members and enhanced troubleshooting and manageability.

Multiple Temp DB files

As part of the initial server configuration, you have easier control over the number of temp DB files to be created. The default is the lower of eight or the number of cores. Unfortunately, you only have one Temp DB directory setting, placing all of the temp DB files in the same directory.

So, is SQL Server 2016 -- as Microsoft describes it, "the biggest leap forward in Microsoft's data platform history?" That is up to the user to decide, but there are some great features, especially if your needs focus on analytics, business intelligence or extending to Azure.