In-Depth

Scale the Datacenter with Windows Server SMB Direct

RDMA networking has enabled high-performance computing for years, but Windows Server 2012 R2 with SMB Direct is bringing it to the mainstream.

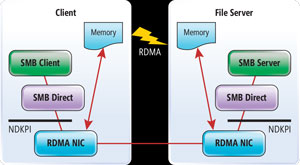

File-based storage has grown tremendously over the last several years, far outpacing block storage, even as both grow at double-digit rates. Cloud datacenters are deploying file-based protocols at an accelerating pace for virtualized environments, as well as database infrastructure deployed for Big Data applications. The introduction of Server Message Block (SMB) 3.0 with the efficiency and performance of the SMB Direct protocol, has opened new opportunities for file storage in Windows-based datacenters. SMB Direct, a key component of SMB 3.0, can utilize networking based on the Remote Direct Memory Access (RDMA) protocol to deliver near-SAN-level performance and availability with integrated data protection and optimized data transfer between storage and server (see Figure 1).

[Click on image for larger view.]

Figure 1. RDMA networking allows high-speed client to file service data transfers.

[Click on image for larger view.]

Figure 1. RDMA networking allows high-speed client to file service data transfers.

RDMA is a specification that has long-provided a means of reducing latency in the transmission of data from one point to another, by placing the data directly into final destination memory, thereby eliminating unnecessary CPU and memory bus utilization. Used primarily for high-performance computing (HPC) for more than a decade, RDMA is now on the cusp of becoming a mainstream means of providing a scalable and high-performance infrastructure. A key factor fueling its growing use is Windows Server 2012 R2 offering several RDMA networking options. I'll review and compare those options within Windows Server 2012 R2 environments.

Windows Scale-Out File Services

Windows Server 2012 R2 provides massive scale to transform datacenters into an elastic, always-on cloud-like operation designed to run the largest workloads. The server OS provides automated protection and aims to offer cost-effective business continuity to ensure uptime. Windows Server 2012 R2 provides a rich set of storage features letting IT managers move to lower-cost industry-standard hardware rather than purpose-built storage devices, without having to compromise on performance or availability. A vital storage capability in Windows Server 2012 R2 is the Scale-Out File Server (SOFS), which allows the storage of server application data, such as Hyper-V virtual machine (VM) files, on SMB file shares. All files shares are online on all nodes simultaneously. This configuration is commonly referred to as an active-active cluster configuration.

A SOFS allows for continuously available file shares. Continuous availability tracks file operations on a highly available file system so that clients can fail over to another node of the cluster without interruption. This is also known as Transparent Failover.

The Role of SMB Direct

The SMB 3.0 protocol in Windows Server 2012 R2 utilizes the Network Direct Kernel (NDK) layer within the Windows Sever OS to leverage RDMA network adapters (see Figure 2). Using RDMA enables storage that rivals costly and infrastructure-intensive Fibre Channel SANs in efficiency, with lower latency, while operating over standard 10 Gbps and 40 Gbps Ethernet infrastructure. RDMA network adapters offer this performance capability by operating at a line rate with very low latency thanks to CPU bypass and zero copy (the ability to write directly to the memory of the remote storage node using RPCs). In order to obtain these advantages, all transport protocol processing must be performed in the adapter hardware, completely bypassing the host OS.

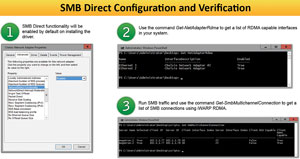

[Click on image for larger view.]

Figure 2. RDMA Networking Configuration on Windows Server 2012 R2.

[Click on image for larger view.]

Figure 2. RDMA Networking Configuration on Windows Server 2012 R2.

With NDK, SMB can perform data transfers direct from memory, through the adapter, to the network, and over to the memory of the application requesting data from the file share. This capability is especially useful for I/O-intensive workloads such as Hyper-V or SQL Server, resulting in remote file server performance comparable to local storage.

In contrast, in traditional networking, a request from an application to a remote storage location must go through numerous stages and buffers (involving data copies) on both the client and server side, such as the SMB client or server buffers, the transport protocol drivers in the networking stack, and the network card drivers.

With SMB Direct, the RDMA NIC transfers data straight from the SMB client buffer, through the client NIC to the server NIC, and up to the SMB server buffer, and vice versa. This direct transfer operation allows the application to access remote storage at the same performance as local storage.

Windows Server 2012 provides built-in support for using SMB Direct with Ethernet RDMA NICs, including iWARP (RDMA/TCP), and RoCE NICs (RDMA/UDP), to support high-speed data transfers. These NICs implement RDMA in hardware so that they can transfer data between them without involving the host CPU. As a result, SMB Direct is extremely fast with client-to-file server performance almost equaling that of using local storage.

RDMA NICs offload the server CPU, resulting in more efficient Microsoft virtualized datacenter installs. Windows Server 2012 SMB Direct 3.0 over RDMA provides higher performance by giving direct access to the data that resides on a remote file server, while the CPU reduction enables a larger number of VMs per Hyper-V server, resulting in CapEx and OpEx savings in power dissipation, system configuration and deployment scale throughout the life of the installation. Native system software support for RDMA networking in Windows Server 2012 R2 simplifies storage and VM management for enterprise and cloud IT administrators, with no network reconfiguration required.

Live migration is an important VM mobility feature and improving the performance of live migration has been a consistent focus for Windows Server. In Windows Server 2012 R2, Microsoft took these performance improvements to the next level. Live migration with RDMA is a new feature; it delivers the highest performance for migrations by offloading data transfers to RDMA NIC hardware.

iWARP: RDMA over TCP/IP

iWARP is an implementation of RDMA using the ubiquitous Ethernet-TCP/IP networking as the network transport. iWARP NICs implement a hardware TCP/IP stack that eliminates all inefficiencies associated with software TCP/IP processing, while preserving all the benefits of the proven TCP/IP protocol. On the wire, iWARP traffic is thus identical to other TCP/IP applications and requires no special support from switches and routers, or changes to network devices. Thanks to the hardware offloaded TCP/IP, iWARP RDMA NICs offer high-performance and low-latency RDMA operation that's comparable to the latest InfiniBand speeds, and native integration within today's large Ethernet-based networks and clouds.

iWARP is able to dramatically improve upon the most common and widespread Ethernet communications in use today and deliver on the promise of a single, converged Ethernet network for carrying LAN, SAN, and RDMA traffic with the unrestricted routability and scalability of TCP/IP. Today, 40 Gbps Ethernet (40 GbE) iWARP controllers and adapters are available from Chelsio Communications, while Intel Corp. has also announced plans for availability of iWARP Ethernet controllers integrated within upcoming Intel server chipsets.

The iWARP protocol is the open Internet Engineering Task Force (IETF) standard for RDMA over Ethernet. iWARP adapters are fully supported by the OpenFabrics Alliance Enterprise Software Distribution (OFED), with no changes needed for applications to migrate from specialized OFED-compliant RDMA fabrics such as InfiniBand to Ethernet.

Initially aimed at high-performance computing applications, iWARP is also now finding a home in datacenters thanks to its availability on high-performance 40 GbE NICs and increased datacenter demand for low latency, high bandwidth, and low server CPU utilization. It has also been integrated into server OSes such as Microsoft Windows Server 2012 with SMB Direct, which can seamlessly take advantage of iWARP RDMA without user intervention.

InfiniBand

InfiniBand is an I/O architecture designed to increase the communication speed between CPUs, devices within servers and subsystems located throughout a network. InfiniBand is a point-to-point, switched I/O fabric architecture. Both devices at each end of a link have full access to the communication path. To go beyond a point and traverse the network, switches come into play. By adding switches, multiple points can be interconnected to create a fabric. As more switches are added to a network, aggregated bandwidth of the fabric increases.

High-performance clustering architectures have provided the main opportunity for InfiniBand deployment. Using the InfiniBand fabric as the cluster inter-process communications (IPC) interconnect may boost cluster performance and scalability while improving application response times. However, using InfiniBand requires deploying a separate infrastructure in addition to the requisite Ethernet network. The added costs in acquisition, maintenance and management have prompted interest in Ethernet-based RDMA alternatives such as iWARP.

Because it's layered on top of TCP, iWARP is fully compatible with existing Ethernet switching equipment that's able to process iWARP traffic out-of-the-box. In comparison, deploying InfiniBand requires environments where two separate network infrastructures are installed and managed, as well as specialized InfiniBand to Ethernet gateways for bridging between the two infrastructures.

RDMA over Converged Ethernet (RoCE)

The third RDMA networking option is RDMA over Converged Ethernet (RoCE), which essentially implements InfiniBand over Ethernet. RoCE NICs are offered by Mellanox Technologies. Though it utilizes Ethernet cabling, this approach does suffer from deployment difficulty and costs due to requiring support for complex and expensive Ethernet "lossless" fabrics and Data Center Bridging (DCB) protocols. In addition, RoCE for the longest time lacked routability support, which limited its operation to a single Ethernet subnet.

Instead of using pervasive TCP/IP networking, RoCE relies instead on InfiniBand protocols at Layer 3 (L3) and higher layers in combination with Ethernet at the Link Layer (L2) and Physical Layer (L1). RoCE leverages Converged Ethernet, also known as DCB or Converged Enhanced Ethernet as a lossless physical layer networking medium. RoCE is similar to the Fibre Channel over Ethernet (FCoE) protocols in relying on networking infrastructure with DCB protocols. However, such support has been viewed a significant impediment to FCoE deployment, which raises similar concerns for RoCE.

The just-released version 2 of the RoCE protocol will get rid of the IB network layer, replacing it with the more commonly used UDP (connectionless) and IP layer to provide routability. However, RoCE v2 does not specify how lossless operation will be provided over an IP network, or how congestion control will be handled. RoCE v2 currently suffers from an inconsistent premise that continues to require DCB for Ethernet, while no longer operating within the confines of one Ethernet network.

Putting It All Together

While InfiniBand has been the most commonly deployed RDMA fabric, economical and technical requirements make Ethernet the preferred unifying network technology. InfiniBand over Ethernet, which is also known as RoCE, has been going through gyrations as it attempts to port the incompatible InfiniBand stack to the very different Ethernet world. In contrast, iWARP is a stable and mature standard, ratified by the IETF in 2007. iWARP was designed to leverage TCP/IP over Ethernet from the outset, which allows it to operate over cost-effective, regular Ethernet infrastructure and deliver on the promise of convergence, with Ethernet transporting LAN, SAN and RDMA traffic over a single wire. Today's iWARP NICs offer latency and performance that are essentially equivalent to InfiniBand even at the micro-benchmark level.

The use of iWARP use is rising in cloud environments, and has been selected as the RDMA transport in the recently launched Microsoft Cloud Platform System (CPS), a turnkey scalable private cloud appliance. CPS is a complete solution consisting of up to four racks of Dell hardware running Windows Server 2012 R2, with the hypervisor, the management tools, and the automation needed for a large-scale, software-defined datacenter. The CPS networking infrastructure includes an Ethernet fabric and an iWARP-enabled storage fabric, which utilizes the SMB Direct protocol for high-efficiency, high-performance storage networking. With solutions shipping today over 40 GbE and integration within upcoming Intel server chipsets, iWARP appears to be the right choice of RDMA over Ethernet.