News

Nonprofit Advocacy Group Software Freedom Conservancy Recommends Ditching Github

The nonprofit organization Software Freedom Conservancy (SFC) recommends users stop using the Microsoft-owned GitHub source code repository platform and its newly released Github Copilot.

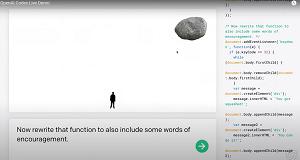

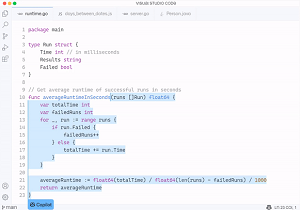

GitHub Copilot is an "AI pair programmer" coding assistant that uses advanced code-completion capabilities to create code snippets or entire programs through machine automation.

[Click on image for larger view.] GitHub Copilot (source: GitHub).

[Click on image for larger view.] GitHub Copilot (source: GitHub).

Using cutting-edge AI tech (OpenAI Codex), GitHub Copilot recently reached General Availability status, offered at $10/month.

[Click on image for larger view.] Turning Words into Code (source: OpenAI).

[Click on image for larger view.] Turning Words into Code (source: OpenAI).

It has wowed developers with its ability to complete a coder's typing intentions and even create entire projects -- like a simple game -- just from typed-in commands. The new product also provide whole-line code suggestions, complete methods, boilerplate code, whole unit tests and even complex algorithms.

[Click on image for larger, animated GIF view.] GitHub Copilot in Animated Action (source: GitHub).

[Click on image for larger, animated GIF view.] GitHub Copilot in Animated Action (source: GitHub).

Yet it has also sparked negative backlash arising from legal, ethical,security and other concerns, especially from the Free Software Foundation (FSF), which last year deemed GitHub Copilot to be "unacceptable and unjust."

Now, another open source-focused organization, Software Freedom Conservancy has piled on. Like the FSF, the group is a strong advocate of strict free and open source software (FOSS).

In a June 30 blog post, SFC listed many grievances about GitHub's behavior, especially pertaining to the release of a paid service based on GitHub Copilot, whose AI model is trained on top-quality GitHub source code repos.

"Launching a for-profit product that disrespects the FOSS community in the way Copilot does simply makes the weight of GitHub's bad behavior too much to bear," SFC said in the post.

What's more: "We are ending all our own uses of GitHub, and announcing a long-term plan to assist FOSS projects to migrate away from GitHub."

As far as that long list of grievances, the group has set up a dedicated site to feature them and other justifications for leaving GitHub, appropriately titled "Give Up GitHub!"

On that list, GitHub Copilot complaints get top billing:

Copilot is a for-profit product -- developed and marketed by Microsoft and their GitHub subsidiary -- that uses Artificial Intelligence (AI) techniques to automatically generate code interactively for developers. The AI model was trained (according to GitHub's own statements) exclusively with projects that were hosted on GitHub, including many licensed under copyleft licenses. Most of those projects are not in the "public domain", they are licensed under FOSS licenses. These licenses have requirements including proper author attribution and, in the case of copyleft licenses, they sometimes require that works based on and/or that incorporate the software be licensed under the same copyleft license as the prior work. Microsoft and GitHub have been ignoring these license requirements for more than a year. Their only defense of these actions was a tweet by their former CEO, in which he falsely claims that unsettled law on this topic is actually settled. In addition to the legal issues, the ethical implications of GitHub's choice to use copylefted code in the service of creating proprietary software are grave.

SFC said it won't mandate that its existing member projects move off the platform at this time, but it won't accept new member projects that do not have a long-term plan to migrate away from GitHub. It promised to provide resources to support any member projects that choose to migrate and help however else it can.

The lengthy June 30 post lists three GitHub Copilot-related questions that SFC reportedly asked Microsoft (which owns GitHub), to which it has not received answers for a year and which Microsoft has allegedly formally refused to answer:

- What case law, if any, did you rely on in Microsoft & GitHub's public claim, stated by GitHub's (then) CEO, that: "(1) training ML systems on public data is fair use, (2) the output belongs to the operator, just like with a compiler"? In the interest of transparency and respect to the FOSS community, please also provide the community with your full legal analysis on why you believe that these statements are true.

- If it is, as you claim, permissible to train the model (and allow users to generate code based on that model) on any code whatsoever and not be bound by any licensing terms, why did you choose to only train Copilot's model on FOSS? For example, why are your Microsoft Windows and Office codebases not in your training set?

- Can you provide a list of licenses, including names of copyright holders and/or names of Git repositories, that were in the training set used for Copilot? If not, why are you withholding this information from the community?

The group's boilerplate description reads: "Software Freedom Conservancy is a nonprofit organization centered around ethical technology. Our mission is to ensure the right to repair, improve and reinstall software. We promote and defend these rights through fostering free and open source software (FOSS) projects, driving initiatives that actively make technology more inclusive, and advancing policy strategies that defend FOSS (such as copyleft)."

In yesterday's announcement, SFC acknowledged that "We expect this particular blog post will generate a lot of discussion."

About the Author

David Ramel is an editor and writer at Converge 360.