In-Depth

Network Control in the Azure Cloud

A new Network Controller and the same software-defined networking (SDN) stack in the Microsoft Azure cloud are among the significant SDN capabilities coming to Windows Server 2016.

- By Michael Otey

- 02/02/2016

Microsoft's quest to bring more automation to the datacenter took a key step forward with the release of Windows Server 2012 and a year later with the R2 version. Combined with the introduction of Hyper-V Network Virtualization in the server OS and with System Center Virtual Machine Manager, Microsoft had the makings of its first functional software-defined networking (SDN) stack. SDN has emerged as an important function for IT organizations looking to shift network intelligence from proprietary hardware to software. Now that Microsoft's goal is to "software define everything," the company is looking to deliver the multitenant architecture that drives the Azure public cloud to the datacenter. By doing so, this will allow enterprises to scale their systems infinitely with hybrid infrastructures.

The focus with Windows Server 2016 is cloud-first, where functionality and code from the Azure platform are brought down into the on-premises Windows Server platform. Like you might expect, the new release breaks ground in a number of important areas including UI changes, server virtualization enhancements, Windows Server and Hyper-V container support, and a new Nano server installation option. One of the key features of Windows Server 2016 will include a new SDN stack -- the same one that drives the Azure cloud. Like many other components of the server OS, Microsoft is looking to blur the lines between what powers the datacenter and Azure. A look at Windows Server 2016 TP4 shows some significant advances in SDN.

SDN changes the way you deploy, manage and run your network infrastructure. SDN technologies are essential to help you move toward a more agile and scalable software-defined datacenter (SDDC) environment. Let's take a closer look at how the SDN capabilities in Windows Server 2016 have improved over the last version of the server OS.

SMB 3.0 Protocol Enhancements

There were a number of significant changes in the new release of the Server Message Block (SMB) 3.0 that accompanied Windows Server 2012 that Microsoft has carried over to Windows Server 2016. Performance-wise the two most important changes are SMB Multichannel and SMB Direct. SMB Multichannel provides the ability to direct SMB traffic over multiple network connections proving increased performance and resiliency. SMB Direct takes advantage of Remote Direct Memory Access (RDMA) to increase network performance over RDMA-compliant NICs by bypassing a large portion of the TCP/IP stack and performing remote memory access. Other significant SMB 3.0 features include SMB Transparent Failover, SMB Scale Out and SMB Encryption.

Hyper-V Extensible Switch

The Hyper-V extensible switch is a virtual L2 network switch that enables you to connect your VMs to external networks, as well as to each other. The switch provides the ability for third parties to extend the virtual switch with extensions that monitor or filter network traffic, as well as replace the switch-forwarding logic.

Inbox NIC Teaming

Before Windows Server 2012, NIC teaming was only supported by a very limited number of NIC vendors and products. Windows Server 2012 brought NIC teaming into the box and it was supported even across NIC for heterogeneous vendors. NIC teams can consist of up to 32 NICs and can be used on physical servers or VMs. NIC teaming continues in Windows Server 2016, but it has also been enhanced with Hyper-V Switch Embedded Teaming that I will cover later in this article.

Hardware Offloads

Windows Server 2012 also introduced several features that enabled you to offload network processing into the NIC. Dynamic Virtual Machine Queue (VMQ) load balances traffic across multiple CPUs. Software Virtual Receive Side Scaling (vRSS) enables VMs to use multiple vCPUs to process network traffic. Single Root I/O Virtualization (SR-IOV) enables high-performance networking by allowing VMs to communicate directly with hardware NICs bypassing the layers of Hyper-V networking and the Virtual Switch.

Built-in Switch Management Using OMI

Open Management Infrastructure (OMI) is an open source protocol for physical network switch management that's built in to Windows Server. It's standards-based and can provide switch management for multiple vendors including Arista Networks Inc., Cisco Systems Inc. and others. You can perform OMI switch management using Windows PowerShell or Virtual Machine Manager.

Windows Server Gateway (WSG)

WSG is delivered as a part of Windows Server or a dedicated virtual appliance. The WSG enables virtual networks to connect to non-virtualized network environments like external physical networks, the Internet and Azure.

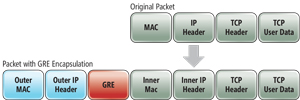

Hyper-V Network Virtualization

Network virtualization is a core foundation technology that helps enable SDN. Hyper-V Network Virtualization was first introduced with Windows Server 2012. Like computer virtualization, network virtualization enables you to abstract the logical network from the physical hardware network infrastructure. Virtual networks enable the movement of VMs between hosts with no network configuration changes as well as to replace the network isolation capabilities provided by VLANs which must be managed at the physical switch level. Windows Server 2016 network virtualization is built on the Network Virtualization Generic Routing Encapsulation (NVGRE) protocol, which can isolate these virtual networks from one another by tunneling layer 2 packets over layer 3 networks. It allows multiple different subnets to be virtualized and simultaneously run across the same underlying fabric. Virtual networks can span all of your Hyper-V virtualization hosts. VMs can run on any host and Hyper-V Network Virtualization enables them to be independent of the underlying physical network infrastructure. NVGRE warps the original network packet with a new external MAC address and outer IP header (see Figure 1).

[Click on image for larger view.]

Figure 1. The network virtualization overview.

[Click on image for larger view.]

Figure 1. The network virtualization overview.

Unlike VLANs, Hyper-V Network Virtualization is all configured in software and it can be managed by tools like Virtual Machine Manager and Windows PowerShell. Also, unlike VLANs, virtual networks are highly scalable. Today's high-end switches support a maximum of 4,096 VLANs, while Hyper-V Network Virtualization supports up to 16 million virtual networks. Hyper-V Network Virtualization enables the Windows administrator to perform many of the functions that were previously in the network administrator's domain. For instance, Virtual Machine Manager lets you model the multiple tiers of your application including all of the required networking, then deploy it all together as a service.

IPAM Improvements

Windows Server 2016 has several improvements in IP Address Management (IPAM). First introduced with Windows Server 2012, IPAM lets you do away with IP management via Excel spreadsheets. Instead, IPAM enables you to manage your IP addresses with the help of your Windows infrastructure. Included as a Windows Server 2016 Feature, IPAM has improved integrated with both DNS and DHCP. It can track the activity of IP address as well as IP utilization, trending and auditing across one or more data centers. IPAM can provide unified IP management for both physical and virtual networks through its integration with SCVMM.

IPAM uses scheduled Windows tasks in order to collect IP data from your infrastructure servers. In Windows Server 2016, IPAM enables delegated administration via Role-Based Access Control (RBAC). It also includes support for handling IPv4 /32 and IPv6 /128 subnets and finding free IP address subnets and ranges in an IP address block. Windows Server 2016 IPAM supports DNS resource record collection, DNS zone management for both domain-joined Active Directory-integrated and file-backed DNS servers, as well as multiple AD forests.

New Network Controller

The biggest change in Windows Server 2016 SDN support is the new Network Controller. The Network Controller is intended to manage all of the different network services and virtual appliances that are in use in your network infrastructure. It's a centralized programmable management tool that's capable of managing both the virtual and physical networks and devices in your environment. It integrates with Virtual Machine Manager or it can be programmatically be controlled using Windows PowerShell or its REST API -- essentially providing declarative configuration. The Network Controller can be deployed for test and lab scenarios on a single VM. In a production environment you can deploy it as a cluster either on at least three physical systems or on three VMs on separate hosts. The clustered deployment is recommended by Microsoft because the Network Controller is such a pivotal and vital part of your network management infrastructure. The network management capabilities of the Network Controller are quite extensive. Some of the network aspects you can manage with the new Network Controller include:

- Fabric Management: IP subnets, vLANS, L2 and L3 switches, host NICs

- Firewall Management: Allow/Deny rules, firewall rules for virtual switch, rules for incoming/outgoing traffic

- Network Topology: Automated discovery of network elements

- Service Chaining: Rules of redirecting traffic

- Software Load Balancer: Central configuration of the Software Load Balancer Network Virtual Function

- Network Monitoring: Physical and virtual, activity, fault location, SNMP, link state and peer status, device health, network loss, System Center Operations Manager integration

- Virtual Network Management: Deploy Hyper-V Network Virtualization, deploy Hyper-V Virtual Switches, Deploy vNICs, distribute network policies, support for NVGRE and VXLAN

- Windows Server Gateway Management: Deploy and configure WSGs, IPSec and GRE VPN, L3 forwarding, BGP routing, logging configuration and state changes

The new Network Controller takes a central role in Windows Server 2016 networking, as shown in Figure 2.

[Click on image for larger view.]

Figure 2. The Windows Server 2016 Network Controller.

[Click on image for larger view.]

Figure 2. The Windows Server 2016 Network Controller.

Everything you have in your network infrastructure runs through the Network Controller. It can control physical routers and switches, as well as Hyper-V VMs, virtual switches and virtual networks. There's a southbound API that can reach out and discover network devices, detect service configurations and gather information about your network. There's also a northbound API with a REST interface that enables you to gather information from the Network Controller. The northbound API also lets you configure and manage your network, as well as deploy new devices.

New Network Function Virtualization

While its name makes it easy to confuse with Network Virtualization, Network Function Virtualization is actually a different entity. Network Function Virtualization is focused on the functions that are being provided by your network. For instance, load balancing, firewalls and routers all provide specific types of network functions. Load balancing, firewall and service chaining are all network functions Microsoft will include with Windows Server 2016.

Windows Server 2016 is designed to run Network Function Virtualization services as virtual appliances in Hyper-V and also to integrate them into the Windows Server 2016 management stack. Virtual appliances are quickly becoming the preferred way that these Virtual Network Functions are being provided. There are several virtual network appliances available today in the Azure Marketplace.

A couple of the Network Virtualization Functions included with Windows Server 2016 are the new Software Load Balancer (SLB), and the Datacenter Firewall. The SLB and the Datacenter Firewall functions are not the same as the older Network Load Balancer (NLB) and Windows Firewall that were included in Windows Server 2012. Instead, they're new technologies derived from Azure that have been tested at cloud scale. The new SLB offers high-throughput between multiple Multiplexer (MUX) instances and virtual networks. Unlike the older NLB technology, the SLB doesn't have to reside on a specific host. It can be moved between hosts. It provides multi-tenant support and can be deployed using Virtual Machine Manager.

The Datacenter Firewall is a distributed highly scalable stateful multitenant firewall. Datacenter Firewall policies are deployed to the Hyper-V Virtual Switch and firewall policies can follow VM as they're moved between hosts. The Virtual Switch port host agent is guest OS-agnostic and the firewall rules are configured in each Virtual Switch port -- independent of the host. The Windows Server Gateway (WSG) -- which provides virtual network to non-virtual networks -- is another Network Virtualization Function provided by Windows Server 2016.

Hyper-V Switch Embedded Teaming (SET)

With Windows Server 2016 NIC teaming is integrated into the Hyper-V Virtual Switch. The teaming is switch independent. There's no need for static ports or LACP (Link Aggregation Control Protocol) configuration. You can manage the Hyper-V SET using Windows PowerShell or Virtual Machine Manager. It cannot be managed using the older NIC teaming GUI. The new Hyper-V SETs are much easier to create. There's no need to first create the NIC team in Server Manager and then bind it to a Hyper-V Virtual Switch. With the new SET feature the NIC teaming configuration is a one-step process. The following shows the Windows PowerShell code to create a new SET:

New-VMSwitch –name SETswitch –NetAdapterName "MyNIC1", "MyNIC2" –EnableEmbeddedTeaming $true

The teaming capability is a part of the Hyper-V switch providing greater simplicity and scalability. You can create up to eight uplinks in a SET. However, all of the NICs must be from the same manufacturer. The Hyper-V Virtual Switch must be created with the SET option -- you cannot convert existing Hyper-V Virtual Switches to use the new SET capability.

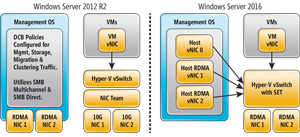

Converged Networking and Hyper-V Virtual Switch RDMA Support

Windows Server 2012 introduced a new Remote Direct Memory Access (RDMA) support as part of its SMB 3.0 enhancements. RDMA provided the ability to significantly improve NIC performance by offloading part of the required TCP/IP network processing from the CPU into the NIC itself. However, Windows Server 2012 RDMA support was limited to physical NICs that were managed by the host OS. Windows Server 2016 extends RDMA support to Hyper-V Virtual Switch, essentially enabling you to virtualize RDMA. Windows Server 2012 did not allow you to bind a Hyper-V Virtual Switch to an RDMA-enabled NIC without disabling the RDMA functionality in that NIC. Windows Server 2016 Hyper-V Virtual Switch support for RDMA enables VMs to directly take advantage of the RDMA performance advantages. Plus, with this RDMA support, NICs can be shared by vNICs created in the host OS enabling you to simply your host networking configuration (see Figure 3).

[Click on image for larger view.]

Figure 3. Hyper-V Virtual Switch with Switch Embedded Teaming and RDMA.

[Click on image for larger view.]

Figure 3. Hyper-V Virtual Switch with Switch Embedded Teaming and RDMA.

In Figure 3 you should note that in Windows Server 2012 four host NICs would've been required to provide RDMA networking for the host and NIC teaming for the VMs. Windows Server 2016 will introduce new support for vNICs in the Windows Server 2016 host and both the host vNICs and VM vNICs provide support for RDMA. This Hyper-V Virtual Switch must have SET enabled. The new Hyper-V Switch support for RDMA allows both the host and VMs to share the RDMA support in the physical NICs enabling you to reduce and converge the required host infrastructure. Microsoft states that the Hyper-V Virtual Switch support for RDMA provides near-native RDMA performance to the vNICs. The following example illustrates how you can create a Hyper-V SET-enabled Virtual Switch with two NICs using Windows PowerShell:

New-VMSwitch –name MyRDMAswitch –NetAdapterName "MyNIC1" "MyNIC2" –EnableEmbeddedTeaming $true

AddVMNetworkAdapter –SwtchName MyRDMAswitch –Name SMBRDMA_1

AddVMNetworkAdapter –SwtchName RDMAswitch –Name SMBRDMA_2

Enable-NetAdpaterRDMA "vEthernet (SMBRDMA_1)", "vEthernet (SMBRDMA_2)"

PacketDirect (PD)

PacketDirect is a replacement for Network Driver Interface Specification (NDIS), which is the Windows Server network driver interface. NDIS has been a staple in the Windows OS for the past 20 years or more. While reliable, NDIS was never designed to cope with the 100GB network speeds that today's fastest networks are approaching. PacketDirect enables complaint applications to tell the network what they need, thereby increasing the efficiency of the network stack. Like you might guess, the applications must be network- and PacketDirect-aware to take advantage of PacketDirect. PacketDirect is a technology that originated in Linux and is utilized in load balancers and firewalls.

Other Important SDN Additions

Some of the other notable improvements in Windows Server 2016 SDN include:

- Support for VXLAN for the network virtualization encapsulation layer

- Virtual Machine Multi-Queue to enable 10G+ performance

- Hyper-V Virtual Switch now uses the same flow control engine as Azure

- Support for standardized protocols including: REST, JSON, OVSDB, WSMAN/OMI, SNMP, NVGRE/VXLAN

The Network Is the Computer

Just as Sun Microsystems predicted years ago, the network is the computer. Networking is the foundation of today's datacenter. The upcoming release of Windows Server 2016 promises to deliver a number of significant new SDN enhancements that provide more control and flexibly to network management. The role of networking in Windows Server 2016 is clearly expanding and in many ways the Windows Server administrator will be working in the same space that was traditionally the providence of the network administrator.