News

Microsoft Unveils Open Source AI Security Tool PyRIT

In the realm of IT security, the practice known as red teaming -- where a company's security personnel play the attacker to test system defenses -- has always been a challenging and resource-intensive task. Microsoft aims to streamline and enhance this process for generative AI systems with its latest offering.

Last week, the company released its internal generative AI red teaming tool, dubbed PyRIT, on GitHub for public use. Microsoft first developed PyRIT (short for Python Risk Identification Toolkit) in 2022 as a "set of one-off scripts" that its own red teams used on generative AI systems, including Copilot. Now, it said in its blog, PyRIT is "a reliable tool in the Microsoft AI Red Team's arsenal."

Inside PyRIT

PyRIT automates time-consuming red team tasks, such as the creation of malicious prompts in bulk, so organizations can spend more time investigating and buttressing their systems' weakest points. It is not, Microsoft emphasized, a replacement for human red teaming.

There are five main pillars that make up PyRIT, including a score generator that rates the quality of an AI system's outputs. Per Microsoft's blog, those five components are as follows:

- Targets: PyRIT supports a variety of generative AI target formulations -- be it as a web service or embedded in application. PyRIT out of the box supports text-based input and can be extended for other modalities as well. PyRIT supports integrating with models from Microsoft Azure OpenAI Service, Hugging Face, and Azure Machine Learning Managed Online Endpoint, effectively acting as an adaptable bot for AI red team exercises on designated targets, supporting both single and multi-turn interactions.

- Datasets: This is where the security professional encodes what they want the system to be probed for. It could either be a static set of malicious prompts or a dynamic prompt template. Prompt templates allow the security professionals to automatically encode multiple harm categories -- security and responsible AI failures -- and leverage automation to pursue harm exploration in all categories simultaneously. To get users started, our initial release includes prompts that contain well-known, publicly available jailbreaks from popular sources.

- Extensible scoring engine: The scoring engine behind PyRIT offers two options for scoring the outputs from the target AI system: using a classical machine learning classifier or using an LLM endpoint and leveraging it for self-evaluation. Users can also use Azure AI Content filters as an API directly.

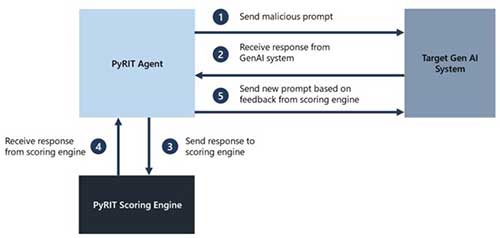

- Extensible attack strategy: PyRIT supports two styles of attack strategy. The first is single-turn; in other words, PyRIT sends a combination of jailbreak and harmful prompts to the AI system and scores the response. It also supports multiturn strategy, in which the system sends a combination of jailbreak and harmful prompts to the AI system, scores the response, and then responds to the AI system based on the score. While single-turn attack strategies are faster in computation time, multiturn red teaming allows for more realistic adversarial behavior and more advanced attack strategies.

- Memory: PyRIT's tool enables the saving of intermediate input and output interactions providing users with the capability for in-depth analysis later on. The memory feature facilitates the ability to share the conversations explored by the PyRIT agent and increases the range explored by the agents to facilitate longer turn conversations.

Using PyRIT, Microsoft was able to red team "a Copilot system" -- from defining a harmful category to test for, to creating thousands of malicious prompts, to grading the AI's outputs -- in hours instead of weeks.

[Click on image for larger view.]

How PyRIT works. Source: Microsoft

[Click on image for larger view.]

How PyRIT works. Source: Microsoft

Red Teaming Generative AI vs. Everything Else

PyRIT is designed for generative AI systems, not "classical" AI systems or traditional (non-AI) software. This distinguishes it from Counterfit, an earlier red teaming tool that Microsoft developed internally before also releasing it to GitHub in 2021.

"Although Counterfit still delivers value for traditional machine learning systems, we found that for generative AI applications, Counterfit did not meet our needs, as the underlying principles and the threat surface had changed," Microsoft said. "Because of this, we re-imagined how to help security professionals to red team AI systems in the generative AI paradigm and our new toolkit was born."

When it comes to red teaming, generative AI is a far cry from non-generative AI. Microsoft identified three main differences that warranted the creation of a tool like PyRIT that's meant specifically for generative AI systems:

- No one generative AI system is built exactly like the other. Their input and output data formats can be different. Some are standalone tools while others are add-ons to existing solutions. The wide variety of generative AI systems means red teams have to test for many different combinations of risks, architectures and data types -- a "tedious and slow" process using red teaming strategies.

- Unlike other software systems, generative AI is unlikely to consistently produce the same output from the same input. Traditional, software-oriented red teaming strategies don't account for generative AI's "probabilistic nature."

- Besides security risks, generative AI is also a Pandora's Box of ethical risks. Traditional red teaming strategies typically only test for the former, not the latter. An effective generative AI red teaming strategy, Microsoft argued, should be able to test for both simultaneously. (It's worth noting that Microsoft has particularly rigorous and extensive "responsible AI" standards for its products.)

"PyRIT was created in response to our belief that the sharing of AI red teaming resources across the industry raises all boats," said Microsoft. "We encourage our peers across the industry to spend time with the toolkit and see how it can be adopted for red teaming your own generative AI application."