Joey on SQL Server

Microsoft Ignite 2023: Data Analytics Platform Fabric Analytics Now Available

Microsoft's latest offering will also continue the company's trend of strong AI integration hooks.

- By Joey D'Antoni

- 11/15/2023

Wednesday, at the Microsoft Ignite conference in Seattle, Microsoft made numerous announcements about data platform solutions. The biggest of those announcements is the new Fabric Analytics software-as-a-service offering, which is now generally available. You can read my initial discussion of Fabric from Microsoft Build in May 2023 here. I wanted to dive deeper into some of the additional Fabric-related news from Ignite, but first, let's talk about some of the common challenges faced in data warehousing and analytical solutions.

As I'm writing this, I'm about to be on a client call to discuss moving terabytes of data around to build a new data warehouse structure -- this is inherently the biggest challenge of a business intelligence project, whether extract, transform, and load (ETL) patterns are facilitated by tools like SQL Server Integration Services, or more modern extract, load, and transform (ELT) solutions are enabled by data lakes.

The hardest part of data warehouse projects is moving data out of operational stores like point-of-sale (POS) systems or enterprise resource planning (ERP) systems into a data warehouse structure. The reason why ELT/ETL development is challenging is that there are many source systems. Those systems change over time, and development must chase those changes while, at the same time, try not to impact the performance of the source system.

Another area for improvement of ETL/ELT is an inherent data latency built into the process. One observation I have from years of consulting is that businesses that implement a data warehouse will start by doing a nightly load process to ingest data from those operational systems. Over time, business leadership tends to want to reduce the latency of that data, meaning refreshes become more frequent. This business requirement will commonly start with a four-hour refresh cycle, and then, in many cases, a demand for real-time data ensues. While changing a batch process to the four-hour window typically doesn't require many ETL changes, real-time data means that a lot of engineering work needs to happen to stream data from those operational stores into the warehouse.

Since changing to a live data model is complex, organizations may take several suboptimal approaches. The most common one is to do live reporting from the operational system. While this may sound like an acceptable idea, the problem is that users running reports against the ERP or POS impact the performance and operation of those operational systems. Running reports from live operational systems is not good; however, it is a typical customer pattern.

Microsoft has tried to address this several times over the years. SQL Server has a Change Data Capture (CDC) feature that reads the transaction log and captures changes to specified tables. This pattern is not unique to SQL Server; other databases also have various methods of streaming changing data out of the database. As part of the Fabric announcements at Ignite, Microsoft has introduced Mirroring in Microsoft Fabric, allowing for near real-time replication from heterogeneous data sources and ultimately landing in OneLake as Delta tables, the lingua franca of Fabric. Other than the slightly unfortunate name (mirroring is/was the original SQL Server disaster recovery mechanism, which shares a similar foundation with this feature), the concept of being able to easily stream data in real-time from a wide variety of data sources into a standard format that analytical tools can digest is one of the holy grails of business analytics.

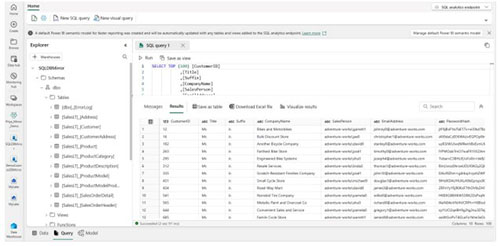

After mirroring a database into Fabric, users can access it using the data warehouse experience in Fabric, which includes a SQL Analytics endpoint, which houses the metadata of the Delta tables and can query those Delta tables using transact-SQL (T-SQL).

[Click on image for larger view.]

Figure 1. A look at Microsoft's Fabric service.

[Click on image for larger view.]

Figure 1. A look at Microsoft's Fabric service.

The mirroring announcement at Ignite will include Azure SQL DB, Cosmos DB, and Snowflake as data sources in private preview. Microsoft plans to add data sources for SQL Server, Azure MySQL, Azure PostgreSQL and MongoDB in 2024.

It wouldn't be a product announcement 2023 if it didn't talk about AI, and the Fabric GA announcement is no different. While there are some vague references in the product announcements to data being the foundation of AI and how Fabric can help your organization build better AI solutions, there are also some actual features, including the announcement of Copilot for Fabric for Power BI, Data Factory, Data Engineering and Data Science experiences. While I have not been testing this, I have been testing the T-SQL Copilot experience in Azure Data Studio. I have been reasonably impressed at the recent improvements I have observed while using the T-SQL Copilot service.

One of the other announcements around Fabric that I was happy to see was an integration with Microsoft's governance solution, Purview. Fabric will now support applying sensitivity labels to data and to take advantage of data loss prevention (DLP) and sensitive information types (SIT), which can help administrators protect against internal and external data exfiltration attacks. Microsoft has also simplified the auditing experience in Fabric by automatically capturing user and admin actions in their audit logs and integrating with Purview.

There were also several other data platform announcements at Ignite. Azure PostgreSQL has some improvements around IO performance and leverages V2 managed disk, which should offer better overall performance. The Managed Instance service now has a free trial offering and, more importantly, is adding two key features—the ability to start and stop the service to reduce costs for development environments and the support for spanning availability zones.

Finally, Microsoft has lowered the Azure SQL Database hyperscale costs by 35 percent. This assessment is purely my speculation, but commonly, when you see a significant price shift like that, it aims to move customers from an existing service tier onto another tier. In this case, Hyperscale might be a better fit for workloads than General Purpose Azure SQL DB, even if your database is at most five terabytes.

Conference season always brings many announcements that we must digest as analysts and technology professionals. Sometimes, we see a lot of fluff, but other solutions can be game changers. Suppose Microsoft can get mirroring to work consistently with the variety of data sources they are promising. In that case, a game-changing feature can make Fabric a more attractive platform.

About the Author

Joseph D'Antoni is an Architect and SQL Server MVP with over two decades of experience working in both Fortune 500 and smaller firms. He holds a BS in Computer Information Systems from Louisiana Tech University and an MBA from North Carolina State University. He is a Microsoft Data Platform MVP and VMware vExpert. He is a frequent speaker at PASS Summit, Ignite, Code Camps, and SQL Saturday events around the world.