Joey on SQL Server

Clear Connection: Building APIs for Databases

You don't need to be a software developer to quickly create an API that will retrieve the information you need.

- By Joey D'Antoni

- 05/05/2023

One of the recent announcements at the SQLBits conference in Wales in March was the public preview of a new GitHub project from Microsoft called the Data API Builder. It allows developers to quickly build APIs for Azure SQL Database and several other databases, including popular open source databases like MySQL, PostgreSQL and the Microsoft cloud NoSQL database Cosmos DB. In discussions with my clients and other folks at conferences, one of the complaints I've heard is that building Restful APIs against databases should be more accessible. If you've ever had this complaint, the Data API Builder is the tool for you.

I'll be the first to admit that I'm not a great software developer. While I write SQL, PowerShell, bash and various scripts for my job, I won't build an end-to-end application. Despite this, I created a small application using the Data API Builder. I was incredibly impressed by the simplicity of building and running an API for an Azure SQL Database.

In January, I was looking at Reddit, specifically the Azure subreddit, and someone asked a question about finding a way to get all the resource data around Azure Virtual Machines, specifically the type of machine, the number of CPUs, the amount of RAM and various other performance metrics. It took me a little while and some help from friends, but I got a PowerShell script together that gathered all of the data from each Azure region and returned all that information.

While that was handy, a giant list of PowerShell output wasn't helpful, so I needed to persist the data. While I could have gone directly into an Azure SQL Database, I had decided that I wanted to write an article on this. I used the convertto-json PowerShell function and persisted it to Azure Blob Storage. Because Azure periodically changes the VMs available in each region, I needed to run this regularly. These changes meant I put my PowerShell script into Azure Automation to run on a weekly schedule. While I could have just written data into Azure SQL Database directly from there, that wasn't the most compelling option for this article.

You have several choices for various serverless tasks on the Azure platform. The most developer-focused one is Azure Functions. Additionally, there is Automation, as well as my personal favorite, Logic Apps. Logic Apps is also a "serverless" construct that consists of a trigger and an action. In my case, I used an Azure Blob Storage trigger, which would act as my Automation job wrote a file to my storage container. The action was to execute a stored procedure in my Azure SQL database to ingest the data into my Azure SQL DB, which can ingest data from Azure Blob Storage.

My initial architecture of this led to a minor disaster -- the trigger was on the container where I was writing my file output, which meant every time a new region was processed, the stored procedure would execute. This redundant processing caused a lot of duplicate rows and blocking. I resolved this by creating a new storage container called "status," where I write one 10-byte file at the end of my PowerShell process, which corrected my data loading problem. You'll note that I used a cursor and dynamic SQL in my stored procedure, both anti-patterns. While I don't endorse you writing that SQL into your application code, because of the way Azure SQL DB talks to Azure Blob Storage, that was my best option for ingesting data, and I know the data set will not grow substantially, meaning the performance impact will be minimal.

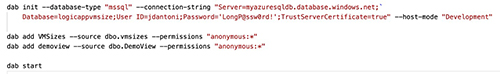

Now that data is in this database; you can use the Data API Builder. First, I cloned the GitHub repository to my local machine and used a SQL login to connect to my database. After I cloned the repo, I used the commands shown in the below screenshot:

[Click on image for larger view.] Figure 1.

[Click on image for larger view.] Figure 1.

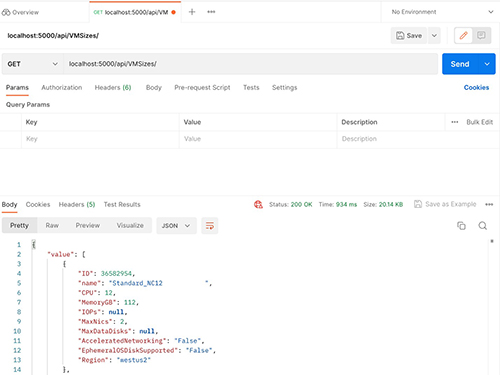

The first command adds my connection string to the dab-config.json file, and the second and third commands define a table and a view (in a recent presentation, an attendee asked if this worked with a view, so that was an additional test), which also get added to the file. The last command dab start runs the data api service locally on my Mac. Using Postman, a Rest API tool, I make API calls from my local machine to my database, as shown below.

[Click on image for larger view.] Figure 2.

[Click on image for larger view.] Figure 2.

At this point, I knew I wanted to deploy my application to the Internet, so I built a Docker container using the included Dockerfile in the git repository and deployed it to an Azure Container Repository. With a couple of tweaks from there, I was able to deploy that container to an Azure App Service. The main one was that I had to store that config file in Azure File Storage to persist the file for the container.

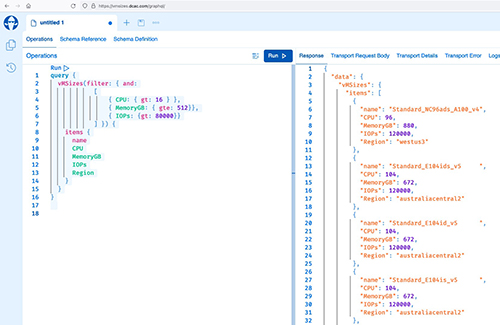

[Click on image for larger view.] Figure 3.

[Click on image for larger view.] Figure 3.

Once deployed to the app service, my application was now accessible on the Internet—where in the previous screenshot, I'm using a GraphQL query (which is also a component of the data api builder), which is filtering the list of VMs to the West US2 region with twenty or more vCPUs, more than one gigabyte of memory, and greater than 80000 I/O operations per second (the measure of disk performance). While my application doesn't have an actual front end beyond the GraphQL interface, it has already been convenient when I need to see what VM sizes are available in which region and to compare various performance characteristics.

I didn't cover security. You may have noticed I used a SQL login with a username and password in my local application example. However, all the connections to databases, storage accounts, Logic Apps and Web Apps all have Azure Active Directory managed identities, which means no passwords are needed for all those services to communicate with each other.

The Data API Builder makes building an API on top of your database quick and easy. I was very impressed by the ease of use I could get my service up and running both locally and online in Azure. I showed it to a couple of my clients who develop applications, and the services simplicity blew them away.

About the Author

Joseph D'Antoni is an Architect and SQL Server MVP with over a decade of experience working in both Fortune 500 and smaller firms. He is currently Principal Consultant for Denny Cherry and Associates Consulting. He holds a BS in Computer Information Systems from Louisiana Tech University and an MBA from North Carolina State University. Joey is the co-president of the Philadelphia SQL Server Users Group . He is a frequent speaker at PASS Summit, TechEd, Code Camps, and SQLSaturday events.