While it took longer than planned, Rackspace, the first OpenStack co-sponsor and co-contributor along with NASA, says its cloud compute service is now based on the OpenStack platform. That means Rackspace has transitioned its existing Cloud Servers compute service to OpenStack, though it had originally gunned to have that work done by the end of last year. Customers can start utilizing it May 1.

It's always a good thing that the key sponsor is eating its own dog food. But OpenStack is a work in progress with much functionality to be added. The OpenStack Project’s backers are meeting this week in San Francisco for the OpenStack Design Summit and Conference.

Some beg to differ but the OpenStack effort appears to have the most momentum of the variety of open source efforts in play with 155-plus sponsors and 55 active contributors. It just gained two new heavyweights with IBM and Red Hat acknowledging they are on board.

The news comes as another OpenStack supporter, Hewlett-Packard, last week launched HP Cloud Services. Dell today also launched its Emerging Solutions Ecosystem, a partner program to bring interoperable and complimentary tools to its Dell Apache Hadoop and Dell OpenStack-Powered Cloud solutions.

The first three Dell partners are Canonical, which is using Ubuntu's Linux distribution to power Dell's OpenStack solution, cloud infrastructure vendor enStratus and Mirantis, a provider of OpenStack infrastructure and apps. Mirantis said it has already completed a number of private cloud deployments with Dell. Mirantis provides custom solutions that work with Dell's OpenStack-based hardware, software and reference architecture.

Yet despite these latest milestones for OpenStack, critics argue it is far from mature. Perhaps the most prominent critic is member Citrix, which two weeks ago broke ranks from OpenStack and released to the Apache Software Foundation an alternative, CloudStack. While OpenStack is an alternative to Amazon, CloudStack is aiming to provide interoperability with it.

Another open source cloud operating platform that supports compatibility with Amazon is Eucalyptus, which last month inked an agreement with Amazon enabling it to license the Amazon Machine Interface APIs. On the heels of that agreement, Eucalyptus yesterday announced it raked in $30 million in Series C funding from Institutional Venture Partners, joining investors Benchmark Capital, BV Capital, and New Enterprise Associates, bringing its grant total up to $55 million.

Eucalyptus and Amazon don't support OpenStack. Rackspace CEO Lanham Napier called out Amazon in a CRN interview as a proprietary cloud provider that locks its customers in.

Meanwhile, the OpenStack Project is looking ahead. The group earlier this month issued its latest release cycle of the platform, called Essex. The new release adds support for a Web-based dashboard, code-named Horizon, for managing OpenStack clouds and an identity and authentication system, code-named Keystone.

The next release on the agenda is Folsom, which will add a number of new features, many of which are being determined at this week's OpenStack Summit. A key area of focus will be on a project code-named Quantum, which will provide advanced networking, said Jonathan Bryce, chairman of the OpenStack Project Policy Board and co-founder Rackspace Cloud.

"That will bring some really great capabilities for managing networks and not just routing traffic to virtual machines and back out to the Internet but creating complex network configurations that let you do more advanced security, that lets you do quality of service, VLAN management, really the kinds of things that enterprises want to do," Bryce said. The release goal for Folsom is this Fall.

Also on the open source front, the VMware led Cloud Foundry initiative last week celebrated its first anniversary, by announcing it has successfully recruited partners ranging from service providers to ISVs to build PaaS-based services and private cloud solutions based in its stack. The latest are Cloud9, Collabnet, ServiceMesh, Soasta and X.commerce.

Cloud Foundry last week also demonstrated the deployment of an application to four Cloud Foundry-based clouds. Each took only a few minutes and didn't require code changes. Cloud Foundry also open-sourced under an Apache license BOSH, a tool to facilitate the deployment and management of instances to Cloud Foundry. So far it has received support from VMware for vSphere and Amazon Web Services (AWS).

Partners supporting Cloud Foundry can build platform as a service (PaaS) clouds that run on premises, off site or by service providers and is viewed as a competitor to other PaaS offerings such as Microsoft's Windows Azure, Google App Engine and Salesforce.com's Heroku and Force.com.

Update: Based on clarifications from companies mentioned in this blog, we've corrected the fact that Rackspace Cloud Servers already supported object storage services, code-named Swift. Rackspace Cloud Servers on May 1 will support OpenStack compute, storage and networking services. It also clarifies the fact that new in the OpenStack Essex release is a Web-based dashboard, code-named Horizon, and identity services, code-named Keystone. Swift is not part of Essex. -- JS.

Posted by Jeffrey Schwartz on 04/19/2012 at 1:14 PM0 comments

Amazon Web Services today launched the AWS Marketplace, an online store that lets enterprise users procure cloud-based development tools, business applications and infrastructure from software suppliers.

This is a major step forward for the leading provider of cloud services in that the company is not only offering enterprises an alternative to running compute and storage infrastructures in their own datacenters but now Amazon is using its cloud infrastructure to become a major software-as-a-service (SaaS) distributor.

Amazon customers can rent applications by the hour or by the month and run them on the AWS portfolio of cloud services and pay for them on the same bill. Already on board are well-known players such as 10gen, CA, Canonical, Couchbase, Check Point Software, IBM, Microsoft, Red Hat, SAP and Zend, as well as open source purveyors Wordpress, Drupal and MediaWiki, said Amazon CTO Werner Vogels, in a blog post.

The move is the latest effort by Amazon to extend the breadth of its enterprise cloud offerings and its reach. Just yesterday, Amazon launched a new partner program that it plans to roll out this year, aimed at certifying ISVs, systems integrators and consultants. In addition to distributing apps from major software providers, Vogels underscored it will democratize the distribution of wares from lesser-known developers.

"The way businesses are buying applications is changing," Vogles said. "There is a new generation of leaders that have very different expectations about how they can select the products and tools they need to be successful. It has had a true democratization effect; no longer does the dominant vendor in a market automatically get chosen."

Amazon has grouped the marketplace into three categories: software infrastructure (development, app servers, databases, data caching, networking, operating systems and security), developer tools (issue and bug tracking, monitoring, source control and testing) and business software (business intelligence, collaboration, content management, CRM, e-commerce, HPC, media, project management and storage and backup).

The products are distributed as Amazon Machine Images (AMIs), which run on EC2 instances. Customers can search for products, which are each described by a "detail" page, which provides information such as an overview, ratings, versioning data, support offerings and a link to the end user license agreement (EULA) as well as pricing.

Mimicking the "Click-to-Buy" model of its online consumer store, users can "Click-to-Launch" applications in the AWS Marketplace. "We've streamlined the discovery, deployment, and billing steps to make the entire process of finding and buying software quick, painless, and worthwhile for application consumers and producers," wrote Amazon Web Services technical evangelist Jeff Barr, on the AWS blog.

Certainly Amazon is not the first to launch a marketplace selling software for enterprises. But given its footprint as growing presence as a provider of cloud infrastructure, not to forget the huge success of its consumer retail site, Amazon could become could become a key player in the sales and procurement of enterprise apps, dev tools and infrastructure.

Posted by Jeffrey Schwartz on 04/19/2012 at 1:14 PM0 comments

Not always seen as the most partner-friendly cloud provider, Amazon Web Services (AWS) today took a key step toward trying to reverse that perception.

The company has formed the AWS Partner Network, a program aimed at supporting a wide cross-section of partners, including ISVs, third-party cloud providers (including SaaS and PaaS), systems integrators, consulting firms and managed services providers (MSPs).

"Partners are an integral part of the AWS ecosystem as they enable customers and help them scale their business globally," said AWS technology evangelist Jinesh Varia in a blog post announcing the new program. "Some of our greatest wins, particularly with enterprises, have been influenced by our partners. This new global program is focused on providing members of the AWS partner ecosystem with the technical information, and sales and marketing support they need to accelerate their AWS-based businesses."

The move comes as one of AWS' largest rivals, Rackspace Hosting, has expanded its partner program this year amidst the growing influence of the OpenStack Project it has championed.

But Amazon's actions also reflect the unrelenting growth of its cloud business, which will need experienced partners to help expand its footprint. In the latest measure of how expansive AWS is becoming, one estimate suggests it is carrying 1 percent of Internet traffic, according to a report in Wired today. Citing a study by startup DeepField Networks and some of its network provider partners, Wired determined one-third of all Internet users will access content or data residing on at least one AWS cloud.

To cover the various constituencies, Amazon has created two partner categories: technology and consulting. Depending on the requirements they meet, partners will qualify for one of three tiers: registered, standard and advanced. Certified partners will be able to display an AWS logo and receive a listing in the AWS Partner Directory and $1,000 credits for service and premium support.

The program is currently in beta and Amazon plans to have it up and running later in the year, though existing partners can prequalify for the standard and advance tiers now. To do so, partners must submit Amazon's APN Upgrade form.

Posted by Jeffrey Schwartz on 04/18/2012 at 1:14 PM0 comments

While public and private clouds are changing the economics and delivery model of computing, IBM hopes to shake up the status quo of how systems are configured and supplied.

Big Blue on Wednesday said it will deliver a new class of hardware and software this quarter that it believes will reshape how servers, storage, networks, middleware and applications are packaged, set up and managed in datacenters run by enterprises, hosting facilities and cloud service providers.

IBM describes PureSystems as "expert integrated systems" because they consist of turnkey, multicore servers that are tightly bundled with storage and network interfaces and feature automated scaling and virtualization. The company will also offer versions of PureSystems bundled with middleware including IBM's WebSphere and DB2 database. And IBM is offering, through its partners, models tuned with various vertical and general-purpose applications. The systems will all include a single management interface.

Gearing up for this week's launch, IBM told partners at its PartnerWorld Leadership conference in New Orleans in late February of the coming rollout of what insiders internally called "Project Troy."

The company is initially releasing two models. The first is PureFlex System, designed for those looking to procure raw, virtual compute infrastructure in a turnkey bundle. The systems include caching, scaling and monitoring software. "Essentially it's focused on the infrastructure as a service level of integration of all the hardware and system resources, including cloud, virtualization and provisioning, which will take standard virtual images as patterns for effective deployment," said Marie Wieck, general manger of IBM Software Group's Application and Integration Middleware business unit.

The second offering, PureApplication System, builds on top of PureFlex by including IBM's WebSphere and DB2. With the addition of those components, the PureApplications System offers platform-as-a-service capabilities, Wieck said. Both offerings will be delivered as a single SKU and offer a single source of support.

The bundling, or datacenter-in-a-box approach, itself is not what makes PureSystems unique though. Cisco, Dell, Hewlett-Packard and Oracle all offer converged systems. But IBM appears to be upping the ante with its so-called "scale-in" systems design by which all of those components and applications are automatically configured, scaled and optimized.

IBM also has teamed up with 125 key ISVs, including Dassault Systèmes, EnterpriseDB, Fiserv, SAP, SAS, Sophos and SugarCRM, who are enabling their apps to be customized and tailored using what IBM calls "patterns of expertise" that embed automated configuration and management capabilities.

With these embedded technologies, IBM claims customers can get PureSystems up and running in one-third the amount of time it takes to set up traditional systems, while substantially reducing the cost of managing those assets, which it says now can consume on average 70 percent of an organization's operational budget.

Designed with cloud computing principles in mind, IBM said the new PureSystems automatically scale up or down CPU, storage and network resources. IBM offers a common management interface for all of the infrastructure and applications. The company claims the cost of licensing apps is more than 70 percent less than when purchased with traditional systems.

"This is a whole different discussion, they are creating packages that perform like never seen before," said analyst Joe Clabby, president of Clabby Analytics. "In effect, IBM has distilled decades of hardware, middleware and software design and development experience into PureSystems, resulting in solutions with deeply integrated system and automated management capabilities," added analyst Charles King of PundIT, in a research note.

"The company is also offering additional integration related to specific applications and software packages that can be implemented at the factory, so PureSystems are ready out of the box for quick and easy deployment. In essence, IBM has taken what were once (and for most other vendors, still are) highly customized, usually high-priced solutions and made them into standardized SKUs that can be easily ordered, deployed and implemented to address serious existing and emerging IT challenges."

The PureSystems line will also appeal to ISVs and systems integrators that want to deliver their software as a service, according to Wieck. "It's not just the integration of the hardware components that is as compelling as the integrated management across the environments and the resources that are applied and the software necessary to manage those resources," she said.

PureSystems were brought together by a cross-section of IBM's business units, including its Tivoli systems management group, Rational development tools unit, which is the software business that develops its WebSphere infrastructure platform and DB2 and its server and storage divisions. IBM acquired the networking components from Blade Network Technologies in 2010.

The systems are also available in a number of configurations, powered by the company's own Power processing platform running Linux or IBM's AIX implementation of Unix, or Intel-based CPUs that can run Linux or Microsoft's Windows Server. Customers can configure the systems with a number of virtualization options including VMware, KVM, Microsoft Hyper-V and IBM PowerVM, as well as any virtual machine that supports the Open Virtualization Format (OVF) standard.

Entry-level systems consisting of 96-core CPUs will start at $100,000. High-performance configurations with up to 608 cores will run into the millions of dollars.

Posted by Jeffrey Schwartz on 04/12/2012 at 1:14 PM0 comments

Microsoft today said the All India Council for Technical Education (AICTE) has agreed to deploy Office 365 across 10,000 technical colleges and educational institutions throughout India, making the cloud service available to 7 million students and 500,000 faculty members.

Colleges that are part of AICTE will start using the Live@edu hosted e-mail, Office Web Apps, instant messaging and SkyDrive storage service over the next six months, and ultimately Office 365 as the version for educational institutional services rolls out. Office 365 will give students and faculty in colleges across India access to Exchange Online e-mail and scheduling, SharePoint Online and Lync Online.

While Microsoft is touting AICTE as its biggest cloud customer to date, there's only one problem: The company is not making any money on the deal. Do you even call it a deal when the customer isn't paying anything? It's not that Microsoft gave AICTE any special deal. It offers Live@edu free of charge to any K-12 educational institution and all colleges and universities.

"Even though it is provided for free, we see it as a value for Microsoft and for the school system," said Anthony Salcito, Microsoft's VP of worldwide education, in an interview. "This is allowing the governing body and school leaders to have a communication pipeline to a broad set of students and not only improve collaboration across the system but provide a rich set of tools for students in the cloud. That would be difficult using traditional models [software]."

Of course, both Google and Microsoft have been courting schools to deploy their cloud suites for several years for obvious reasons. The goal: Get students hooked and that's what they'll want to use in the workplace in the future.

Also, there is some revenue potential for those educational institutions choosing premium editions of Office 365. Microsoft's Plan A3 includes a copy of Office Professional Plus (the premises version of the software that works with Office 365) and other features, including voicemail (priced at $2.50 for students and $4.50 for faculty and staff). And for those wanting voice capability, Plan A4 includes everything in the A3 plan plus voice capability (priced at $3.00 for students and $6.00 for faculty and staff). Microsoft last month revised its pricing for those services.

Posted by Jeffrey Schwartz on 04/12/2012 at 1:14 PM0 comments

More than a year after promising to offer a public cloud service, Hewlett-Packard today is officially jumping into the fray.

After an eight-month beta test period, the company said its new HP Cloud Services, a portfolio of public cloud infrastructure offerings that includes compute, object storage, block storage, relational database (initially MySQL) and identity services, will be available May 10. As anticipated, HP Cloud Services will come with tools for Ruby, Java, Python and PHP developers. While there is no shortage of infrastructure as a service (IaaS) cloud offerings, HP's entrée is noteworthy because it is one of the leading providers of computing infrastructure.

The IT industry is closely watching how HP will transform itself under its new CEO Meg Whitman, and the cloud promises to play a key role in shaping the company's fortunes. As other key IT players such as Dell, IBM and Microsoft also offer public cloud services, critics have pointed to HP's absence. The rise of non-traditional competitors such as Amazon Web Services and Rackspace in recent years has also put pressure on HP, whose servers, storage and networking gear will become more dispensable by its traditional customer base as it moves to cloud services.

Considering more than half of HP's profits come from its enterprise business, the success of HP Cloud Services will be important to the company's long-term future of providing infrastructure, presuming the growing trend toward moving more compute and storage to public clouds continues. By the company's own estimates, 43 percent of enterprises will spend between $500,000 to $1 million per year on cloud computing (both public and private) through 2020. Of those, nearly 10 percent will spend more than $1 million.

HP Cloud Services will also test the viability of the OpenStack Project, the widely promoted open source cloud computing initiative spearheaded by NASA and RackSpace and now sponsored by 155 companies with 55 active contributors. Besides Rackspace, HP is the most prominent company yet to launch a public cloud service based on OpenStack. Dell has also pledged support for OpenStack.

But with few major implementations under its belt, the OpenStack effort last week came under fire when onetime supporter Citrix pulled back in favor of its recently acquired CloudStack platform, which it contributed to the Apache Software Foundation. While Citrix hasn't entirely ruled out supporting OpenStack in the future, the move raised serious objections to its current readiness.

Biri Singh, senior VP and general manager of HP Cloud Services told me he didn't see the Citrix move as a reason for pause. Rather, he thought Citrix's move will be good for the future of open source IaaS. "You are seeing the early innings of basic raw cloud infrastructure landscape being vetted out," Singh said. "I think a lot of these architectural approaches are very similar. CloudStack is similar to the compute binding in OpenStack, which is Nova. But OpenStack has other things that have been built out and contributed [such as storage and networking]."

The promise of OpenStack is its portability, which will give customers the option to switch between services and private cloud infrastructure that support the platform. "Being on OpenStack means we can move between HP and Rackspace or someone else," says Jon Hyman, CIO of Appboy, a New York-based startup that has built a management platform for mobile application developers.

Appboy is an early tester of HP Cloud Services. Hyman said he has moved some of Appboy's non-mission critical workloads from Amazon to HP because he believes HP will offer better customer service and more options. "With Amazon Web Services, the support that you get as a smaller company is fairly limited," Hyman said. "You can buy support from Amazon but we're talking a couple of hundred dollars a month at least. With HP, one of the big features they are touting is good customer support. Having that peace of mind was fairly big for us."

The other compelling reason for trying out HP Cloud Services is the larger palette of machine instances and configurations. For example, Hyman wanted machines with more memory but didn't require any more CPU cores. However in order to get the added RAM with Amazon, he had to procure the added cores as well. With HP, he was able to just add the RAM without the cores.

Nonetheless Amazon is also responding by once again lowering its overall prices and offering more options, Hyman has noticed. "They are driving down costs and making machine configurations cheaper," he said. Hyman is still deciding whether to move more workloads from Amazon to HP and will continue testing its service. "Depending on how we like it we will probably move over some of our application servers once it is more battle tested," he said.

Today's launch is an important milestone for HP's cloud effort. Singh said the company is emphasizing a hybrid delivery model, called HP Converged Cloud, consisting of premises-based private cloud infrastructure, private cloud services running in HP datacenters and now the public HP Cloud Services. HP offers the option of letting customers manage its cloud offerings or they can be managed by the company's services business.

"It's not just raw compute infrastructure or compute and storage, it's a complete stack," Singh said. "So when an enterprise customer says 'I have a private cloud environment that is managed by HP, now if I need to burst into the public cloud for additional bandwidth or if I need to build out a bunch of new services, I can essentially step into that world as well.' What HP is trying to do is deliver a set of solutions across multiple distributed environments and be able to manage them in a way that is fairly unique."

With its acquisitions last year of Autonomy and Vertica, HP plans to offer a suite of cloud-based analytics services that leverage Big Data, Singh said. On the database side, HP is initially offering MySQL for structured data and plans NoSQL offerings as well.

Initially HP Cloud Services will offer Ubuntu Linux for compute with Windows Server slated to come later this year.

A dual-core configuration with 2 GB of RAM and 60 GB of storage will start at 8 cents per hour. Singh characterized the pricing of HP Cloud Services as competitive but doesn't intend to wage a price war with Amazon (although since we spoke, HP announced it is offering 50 percent off its cloud services for a limited time). "I think the market for low cost WalMart-like scale clouds is something a lot of people out there can do really well, but our goal is to really focus on providing a set of value and a set of quality of service and secure experience for our customers that I think there is definitely a need for," Singh said.

Singh also pointed out while HP will compete with Amazon, it also sees Amazon as a partner. "We work with them on a bunch of things and they are a customer," he said. "I think there is plenty of room in the market, and we have focused our efforts on people looking for a slightly alternative view on how basic compute and storage is provisioned."

Posted by Jeffrey Schwartz on 04/10/2012 at 1:14 PM0 comments

Citrix turned heads Tuesday when it announced it's contributing the CloudStack cloud management platform it acquired last summer from Cloud.com to the Apache Software Foundation with plans to release its own version of that distribution as the focal point of its cloud infrastructure offering.

The bombshell announcement didn't have to state the obvious: Citrix is dumping its previously planned support for OpenStack, the popular open source cloud management effort led by NASA and Rackspace. While Citrix didn't entirely rule out working with OpenStack in the future, there appears to be no love lost on Citrix's part.

While the announcement didn't even mention OpenStack, Peder Ulander, vice president of marketing for Citrix's cloud platforms group said in an e-mailed statement that OpenStack was not ready for major cloud implementations, a charge that OpenStack officials refuted.

"Our initial plan was to build on top of the OpenStack platform, adopting key components over time as it matured," Ulander noted. "While we remain supportive of the intent behind OpenStack and will continue to integrate any appropriate technologies into our own products as they mature, we have been disappointed with the rate of progress OpenStack has made over the past year and no longer believe we can afford to bet our cloud strategy on its success."

So where does OpenStack fall short? The biggest problem is proven scale in production, Ulander said. "It's been a full year since we first joined OpenStack, and they still don't have a single customer in production," he said. "Despite all the good intentions, the fact remains that it is not ready for prime time. During the same year, CloudStack has seen hundreds of customers go into full production. These customers include some of the biggest brands in the world, collectively generating over $1 billion in cloud revenue today. No other platform comes close."

Jonathan Bryce, chairman of the OpenStack Project Policy Board and co-founder Rackspace Cloud, told me Tuesday that's simply not true. OpenStack has a number of customers in production such as Deutsch Telecom, the San Diego Supercomputing Center and MercadoLibre, a large e-commerce site in Buenos Aires that runs about 7,000 virtual machines on OpenStack. "We've got a broad range of users," Bryce said.

Another reason Citrix decided to focus on CloudStack was its compatibility with Amazon Web Services APIs. "Every customer building a new cloud service today wants some level of compatibility with Amazon," Ulander noted. "Unfortunately, the leaders of OpenStack have decided to focus their energy on establishing an entirely new set of APIs with no assurance of Amazon compatibility (not surprising, since OpenStack is run by Rackspace, an avowed Amazon competitor). We do not believe this approach is in the best interest of the industry and would rather focus all our attention on delivering a platform that is 'Proven Amazon Compatible.'"

But in his most stinging rebuke of OpenStack, Ulander questioned whether it will adhere to true open source principles. "Rather than build OpenStack in a truly open fashion, Rackspace has decided to create a 'pay-to-play' foundation that favors corporate investment and sponsorship to lead governance, rather than developer contributions," he said. "We believe this approach taints the openness of the program, resulting in decisions driven by the internal vendor strategies, rather than what's best for customers."

When I asked Bryce about Ulander's "pay-to-play" charge, he said the process to move stewardship from the auspices of Rackspace to an independent foundation is moving along and will be completed this year. Bryce denied any notion that OpenStack had a "pay for play" model.

"That's just false," Bryce said. "If you look at the process, look at how many contributors there are to the projects. "We are far and away the most inclusive in terms of the number of companies and the number of contributors who are making code submissions. We have large and small startups and big enterprise software companies who are all actively engaged in OpenStack."

But some say OpenStack is still immature relative to CloudStack. Gartner analyst Lydia Leong described it in a blog post as "unstable and buggy and far from feature complete." By comparison, she noted, CloudStack is, at this point in its evolution, a solid product -- it's production-stable and relatively turnkey, comparable to VMware's vCloud Director (some providers who have lab-tested both even claim stability and ease of implementation are better than vCD)."

Forrester analyst James Staten agreed adding that OpenStack, appeared to be a drag on Citrix. "Ever since Citrix joined OpenStack its core technology has been in somewhat of a limbo state," Staten said in a blog post. "The code in cloudstack.org overlaps with a lot of the OpenStack code base and Citrix's official stance had been that when OpenStack was ready, it would incorporate it. This made it hard for a service provider or enterprise to bet on CloudStack today, under fear that they would have to migrate to OpenStack over time. That might still happen, as Citrix has kept the pledge to incorporate OpenStack software if and when the time is right but they are clearly betting their fortunes on cloudstack.org's success."

Timing was clearly an issue, he pointed out. "For a company that needs revenue now and has a more mature solution, a break away from OpenStack, while politically unpopular, is clearly the right business decision."

Despite Citrix's decision to pull the rug out from OpenStack, Bryce shrugged it off, at least in his response to my questions. "I don't by any means think that it's the death knell for OpenStack or anything like that," Bryce said. "I think the Apache Software Foundation is a great place to run open source projects and we will keep working with the CloudStack software wherever it makes sense."

When it comes to open source cloud computing platforms, OpenStack continues to have the momentum with well over 155 sponsors and 55 active contributors and adopters including AT&T, (Ubunto distributor) Canonical , Cisco, Dell, Hewlett-Packard, Opscode and RightScale.

Analysts say Citrix had good business reasons for shifting its focus on CloudStack. Among them, Gartner analyst Leong noted was despite effectively giving away most of its CloudStack IP, it should help boost sales of its XenServer, "plus Citrix will continue to provide commercial support for CloudStack," she noted. "They rightfully see VMware as the enemy, so explicitly embracing the Amazon ecosystem makes a lot of sense."

Do you think it makes sense? Drop me a line at [email protected].

Posted by Jeffrey Schwartz on 04/04/2012 at 1:14 PM0 comments

There's a growing trend by providers of public cloud services to offer secure connectivity to the datacenter. The latest provider to do so is Verizon's Terremark, which this week rolled out Enterprise Cloud Private Edition.

Terremark, a major provider of public cloud services acquired last year by Verizon for $1.4 billion, said its new offering is based on its flagship platform but designed to run as a single-tenant environment for its large corporate and government clients that require security, perhaps to meet compliance requirements.

The company described Enterprise Cloud Private Edition as an extension of its hybrid cloud strategy, allowing customers to migrate workloads between dedicated infrastructure and public infrastructure services.

"Our Private Edition solution is designed to meet the strong customer demand we've seen for the agility, cost efficiencies and flexibility of cloud computing, delivered in a single-tenant, dedicated environment," said Ellen Rubin, Terremark's vice president of Cloud Products, in a statement.

Using Terremark's CloudSwitch software, customers can integrate their datacenters with its public cloud services, the company said, adding its software allows customers to migrate workloads to other cloud providers.

Posted by Jeffrey Schwartz on 04/03/2012 at 1:14 PM0 comments

Looking to provide tighter integration between its public cloud services and enterprise datacenters, Amazon Web Services has inked an agreement with Eucalyptus Systems to support its platform.

While Eucalyptus already offers APIs designed to provide compatibility between private clouds running its platform and Amazon's Elastic Compute Cloud (EC2) and Simple Storage Service (S3), the deal will provide interoperability blessed and co-developed by both companies.

The move, announced last week, is Amazon's latest effort to tie its popular cloud infrastructure services to private clouds. Amazon in January released the AWS Storage Gateway, an appliance that allows customers to mirror their local storage with Amazon's cloud service.

For Eucalyptus, it can now assure customers that connecting to Amazon will work with the company's consent. "You should expect us to deliver more API interoperability faster, and with higher accuracy and fidelity," said Eucalyptus CEO Marten Mikos in an e-mail. "Customers can run applications in their existing datacenters that are compatible with popular Amazon Web Services such EC2 and S3."

Enterprises will be able to "take advantage of a common set of APIs that work with both AWS and Eucalyptus, enabling the use of scripts and other management tools across both platforms without the need to rewrite or maintain environment-specific versions," said Terry Wise, director of the AWS partner ecosystem, in a statement. "Additionally, customers can leverage their existing skills and knowledge of the AWS platform by using the same, familiar AWS SDKs and command line tools in their existing data centers."

While the companies have not specified what deliverables will come from the agreement or any timeframe, Mikos said they are both working closely. Although Eucalyptus does not disclose how many of its customers link their datacenters to Amazon, that is one of Eucalyptus' key selling points.

Eucalyptus claims it is enabling 25,000 cloud starts each year and that it is working with 20 percent of the Fortune 100 and counts among its customers the U.S. Department of Defense, the Intercontinental Hotel Group, Puma, Raytheon, Sony and a number of other federal government agencies.

While Eucalyptus bills its software as open source, critics say it didn't develop a significant community, an problem the company has started to remedy with the hiring of Red Hat veteran Greg DeKoenigsberg as Eucalyptus's VP of Community.

Eucalyptus faces a strong challenge from other open source private cloud efforts, including the VMware-led CloudFoundry effort and the OpenStack effort led by Rackspace Hosting and NASA and supported by the likes of Cisco, Citrix, Dell, Hewlett-Packard and more than 100 other players.

These open source efforts, along with other widely marketed cloud services such as Microsoft's Windows Azure, are all gunning to challenge Amazon's dominance in providing public cloud infrastructure. And they are doing so by forging interoperability with private clouds.

Since Amazon and Eucalyptus have shown no interest in any of those open source efforts to date, this marriage of convenience could benefit both providers and customers who are committed to their respective offerings.

Posted by Jeffrey Schwartz on 03/28/2012 at 1:14 PM1 comments

Cloud infrastructure automation vendor Opscode this week said it has received $19.5 million in Series C funding led by Ignition Partners and joined by backers Battery Ventures and Draper Fisher Jurvetson. In concert with the investment, Ignition partner John Conners, the onetime Microsoft CFO, has joined Opscode's board.

The funding will help expand Opscode's development efforts as well as sales and marketing initiatives. The company is aggressively hiring developers at its Seattle headquarters as well as its new development facility in Raleigh, N.C.

Opscode's claim to fame is its Hosted Chef and Private Chef tools, designed to automate the process of building, provisioning and managing public and private clouds, respectively. Chef is designed for environments where continuous deployment and integration of cloud services is the norm. "It's a tool for defining infrastructure once and building it multiple times and iterating through the build process lots of different configurations," said Opscode CEO Mitch Hill in an interview.

The company claims adoption of its open source Chef cookbooks has grown tenfold since September 2010 with an estimated 800,000 downloads. Its open source community of 13,000 developers has produced 400 "community cookbooks," which cover everything from basic Apache, Java, MySQL and Node.js templates to more specialized infrastructures.

In addition to open source environments, there are also Chef cookbooks for proprietary platforms such as Microsoft's IIS and SQL Server. Opscode said some major customers are using Chef, including Fidelity Investments, LAN Airlines, Ancestry.com and Electronic Arts.

"Virtually all of our customers are using more complex stacks. Many are open source stacks or mixed proprietary and open source stacks, and what we see time and time again is the skills to manage these environments don't exist in the marketplace," Hill said.

"The skills are hard to build. You can't go to school to learn how to do this stuff. You have computer science grads who graduate as competent developers and systems guys but infrastructure engineers who know how to operate at the source, scale and complexity level of the world is operating at are hard to find. That's our opportunity. We feel that Chef is a force multiplier for any company that is trying to do [cloud] computing at scale."

Opscode said it will host its first user conference on May 15 to 17 with presentations by representatives of Fidelity, Intuit, Hewlett-Packard and Joyent, Ancestry.com and Fastly, among others.

Posted by Jeffrey Schwartz on 03/27/2012 at 1:14 PM0 comments

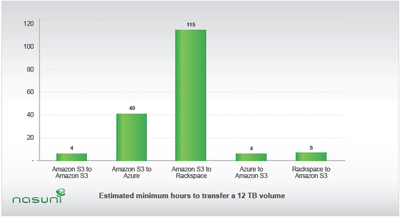

Test results published by cloud storage provider Nasuni this week suggest it's easier to move terabytes of data to Amazon Web Services S3 service than to Microsoft's Windows Azure or Rackspace's Cloud Files.

Nasuni found that moving 12 TB blocks of data consisting of approximately 22 million files to Amazon S3 (or between S3 storage buckets) took only four hours from Windows Azure and five hours from Rackspace Cloud Files. Going the other way though took considerably longer -- 40 hours to Windows Azure and just under a week to Rackspace Cloud Files from Amazon's S3.

[Click on image for larger view.] |

| Estimated minimum hours to transfer a 12 TB volume. (Source: Nasuni) |

Officials at Nasuni found the results surprising and wondered if the reason was due to the fact that Microsoft and Rackspace throttle down the bandwidth when writing data to their respective services. In the case of Windows Azure, Nasuni was able to reach peak bandwidth rates of 25 Mbps, which was deemed good, but it dropped off significantly during peak hours, said Nasuni CEO Andres Rodriguez.

"Our suspicion is that Microsoft is throttling the maximum performance to a common data set, to make sure that the quality of service is maintained to the rest of the customers, who are sharing their piece of infrastructure," Rodriguez said. "That's a good thing for everyone. It's not a great thing for those trying to get a lot of performance out of their storage tier."

While acknowledging it was his own speculation and Nasuni company had not conferred with Microsoft or Rackspace about the issue, a Microsoft spokeswoman denied Rodriguez's theory. "Microsoft does not throttle bandwidth, ever," she said. While Microsoft looked at the report, the company declined further comment, noting it doesn't have "deep insight" into Nasuni's testing methods.

Ironically, Windows Azure performed well in a December test by Nasuni, in which it ranked fastest when it came to writing large files. Amazon, Microsoft and Rackspace all performed the best in that December shootout, which is why they were singled out in the current test. (Also, Amazon is currently Nasuni's preferred storage provider.)

Conversely, Nasuni was able to move files from Windows Azure to Amazon much faster; when moving from Windows Azure to Amazon S3, Amazon received data at more than 270 Mbps, resulting in the 12 TB of data moving in approximately four hours. "This test demonstrated that Amazon S3 had tremendous write performance and bandwidth into S3, and also that Microsoft Azure could provide the data fast enough to support the movement," the report noted.

Nasuni acknowledged that writes are typically slower than reads in most storage systems and external bandwidth is far more limited than internal bandwidth. Also, Nasuni noted in the report explaining the results that the limit it hit could have either have been Amazon's write limit just as much as Microsoft's read limit -- both the result of their respective bandwidth capacity and infrastructure.

Engineers at Nasuni also noted they were surprised at the limits of Amazon's EC2 in regard to how many machines a customer can run by default -- only 20 machines (for more you have to contact Amazon). To bypass that limit, Nasuni combined machines from multiple accounts.

For its part, Rackspace officials were miffed as to why it took so much time to move data from Amazon to the Rackspace Cloud Files service. "The results were surprising to us but we are making efforts to understand how the test was run and understand where some of that limitation might have been coming from," said Scott Gibson, the company's director of product for big data. Unlike Microsoft, Gibson acknowledged there are cases when Rackspace does put some limits on requests. But he said the company just completed a significant hardware upgrade in mid-February to alleviate those situations. Nasuni conducted the tests between Jan. 31 and Feb. 8.

Gibson was skeptical that this was a burning problem among Rackspace customers. "I wouldn't say it's horribly common," he said. "We do have [some] customers who move large amounts of data between datacenters. If that level of performance was the norm, we would probably hear about it loud and clear."

Rodriguez insisted he had no axe to grind with either provider. In fact he suggested he'd like to see both companies and others be able to offer the ability to move large amounts of data between providers to give Nasuni the most flexibility to offer higher levels of price performance and redundancy. He emphasized the purpose of the test was to gauge how long it would take to move large blocks of data among the providers.

When conducting the tests, Nasuni moved data between the providers via encrypted HTTPS machines. The company did not store any data, which was encrypted at the source, on disks in transit. Nasuni scaled from one machine to 40 and saw higher error rates from the providers as the loads increased, though ultimately the transfers were completed after a number of retries, the report noted.

It would be hard to conclude from one test that anyone wanting to move large blocks of data from provider to provider would experience similar results, but it also points to the likelihood that swapping between providers is not going to be a piece of cake.

Posted by Jeffrey Schwartz on 03/22/2012 at 1:14 PM0 comments

While software-as-a-service applications such as Cisco WebEx, Google Apps, Microsoft Office 365 and Salesforce.com, among others, are becoming a popular way of letting organizations deliver apps to their employees, they come with an added level of baggage: managing user authentication.

Every SaaS-based app has its own login and authentication mechanism, meaning users have to separately sign into those systems. Likewise, IT has no central means of managing that authentication when an employee joins or leaves a company (or has a change in role). While directories such as Microsoft's Active Directory have helped provide single sign-on to enterprise apps, third parties such as Ping Identity, Okta and Symplified offer tools that provide connectivity to apps not accessible via AD, including SaaS-based apps. But those are expensive and complex, hence typically used by large enterprises.

Ping Identity this week said it will start offering a service in April called PingOne that will provide an alternative and/or adjunct to PingFederate, the company's premises-based identity management platform. PingOne itself is Saas-based, it will run in a cloud developed by Ping and distributed to Amazon Web Services EC2 service.

In effect PingOne, which will start at $5,000 and cost $5 per user per month, will provide single sign-on to enterprise systems and SaaS-based apps alike via Active Directory or an organization's preferred LDAP-based authentication platform. Ping claims it has 800 customers using its flagship PingFederate software, 90 percent of which are large enterprises.

But smaller and mid-sized enterprises, though they typically run Active Directory, are reticent to deploy additional software to add federated identity management, and in fact many have passed on Microsoft's own free add-on, Active Direction Federation Services (ADFS).

With PingOne, customers won't need to install any software, other than an agent in Active Directory which connects it to the cloud-based PingOne. "With that one connection to the switch you can now reach all your different SaaS vendors or applications providers," said Jonathan Buckley, VP of Ping's On-Demand Business. "This changes federation, which has been a one-to-one to one networking game."

By moving federated identity management to the cloud, organizations don't need administrators who are knowledgeable about authentication protocols like OAuth, OpenID and SAML, Buckley added. "The tools are designed with a junior to mid level IT manager in mind," he said.

Ping is not the first vendor to bring federated identity management to the cloud – Covisint offers a vertical industry portal that offers single sign-on as has Symplified and Okta. But Buckley said Ping is trying to bring federated identity management to the masses.

"The model they are pursuing is a very horizontal version of what a number of folks have done in a more vertical space with more limited circles of trust," said Forrester Research analyst Eve Maler. "I think they are trying to build an ecosystem that is global and that could be interesting if they attract the right players on the identity-producing side and the identity-consuming side, namely a lot of SaaS services."

Another effect of these cloud-based identity management services is their potential to lessen the dependence on Active Directory, said Gartner analyst Mark Diodati. PingOne promises to make that happen via its implementation of directory synchronization, Diotati said.

PingOne looks closely at an enterprise's Active Directory and detects any changes, and if so replicates them to PingOne. Specifically, if someone adds a user to an LDAP group, or moves him or her to a new organizational unit or gives it a specific attribute, that change will automatically replicate to PingOne.

"The ability to do that directory synchronization, getting identities into the hosted part of it, and also the single sign-on, are extremely difficult to do without on-premises components to pull it off," Diodati said.

Ping stresses that passwords and authentication data are not stored in the cloud. But it stands to reason many companies will have to balance the appeal of simplifying authentication and management of access rights to multiple SaaS-based apps with the novelty of extending that function into the cloud. Do you think customers will be reluctant to move federated identity management to the cloud or will they relish the simplification and cost reduction it promises? Drop me a line at [email protected].

Posted by Jeffrey Schwartz on 03/21/2012 at 1:14 PM0 comments