When it comes to integrating disparate information sources and providing master data management (MDM), Informatica is regarded as one of the leading suppliers of software to large enterprises. So it bears pointing out that as the company holds its annual Informatica World conference this week in Las Vegas, it's announcing an update to its flagship Informatica Platform that will facilitate the integration of big data with legacy and cloud-based repositories.

Informatica last year dipped its toe into the world of big data by releasing HParser, a connector that can read and write data in to and out of a Hadoop-based data store. While that marked a key step into world of open-source data management, Informatica is now making a push that aims to have broader appeal to its customer base. With the release of the new Informatica 9.5 Platform, scheduled for next month, the company is making it easier for broad set of developers that have little or no Hadoop programming expertise.

The problem is most Hadoop development today requires hand coding of databases using such interfaces as the Java MapReduce API via new scripting languages such as Hive and Pig, which doesn't lend itself to a broad ecosystem, explained Girish Pancha, Informatica's executive VP and chief product officer. "That's unsustainable in the long run. The cost of ownership when you hand code to these systems is going to skyrocket," Pancha said.

With the Informatica 9.5 platform and the new HParser, the company is promising improved data transformation capabilities. HParser parses software without requiring developers to write, test or transform any code. Developers can parse files regardless of their format within a Hadoop-based repository.

"We have solved the puzzle of having tooling that can help you effectively do data integration, data transformation and data parsing without having to write code," Pancha said. "Historically we've done that with IBM, Oracle and Teradata so why not do it for Hadoop?" The move to Hadoop of course stems from growing interest among enterprises in the low-cost, open-source data management platform that is taking the cloud computing and software industry by storm.

With its Hadoop announcement, Informatica said it is supporting a number of key distributions including Cloudera Enterprise, Hortonworks Data Platform, MapR Technologies' MapR and Amazon Web Services' Elastic Map Reduce. Pancha said Informatica already has a handful of customers that have run some large scale Hadoop deployments to Amazon's EMR. But he acknowledges though a number of large enterprises are interested in Hadoop, only a handful have significant efforts afoot to date.

The Informatica 9.5 Platform covers all of the company's products including the Informatica Cloud, its platform as a service offering that provides integration between premises and cloud-based software as a service (SaaS) offerings.

Leveraging its core MDM competency, the new Informatica 9.5 Platform also adds support for so-called "interactional data." That of course includes content from social media such as Twitter and Facebook, as well as information generated from e-commerce and collaboration sites, where companies increasingly are looking to parse and analyze data coming from those and other non-traditional sources.

"It gives a fourth dimension view of these objects that allow better decisions and insight into customers," Pancha said. "With this interactional data, you can consider the influence a customer has. If they have a large network of friends or are active on social media you may want to treat them differently than if they are not."

In addition to marrying MDM with these new data types, the new Informatica 9.5 Platform introduces the notion of a master data timeline, which keeps track of what's known about an object (say a person or a product) and provides historical context. This allows an individual to query what was known about a specific entity at any point in time, according to Pancha.

In July, Informatica will release the beta of an update to the new platform, dubbed Informatica 9.5.1, slated to ship by year's end. The new release will target information lifecycle management, known as ILM, by offering improved data management capabilities. It'll among other things offer improved compression capabilities making it better suited, and more affordable, for data archiving.

This week's launch of the new Informatica platform follows the release two weeks ago of Informatica Cloud Spring 2012. While the company for some time has offered cloud integration connectors to popular SaaS and premises-based applications and repositories, the company is now extending its outreach to developers and partners.

To accomplish that, Informatica released its Cloud Connector Toolkit, designed to let partners develop their own tools and connectors and offer them on Informatica's marketplace and Cloud Integration Templates, aimed at ISVs that require commonly used integration connectors.

Also, Informatica will announce partnerships in the coming months to extend its marketplace of connectors to the ISV community. Thus far, Informatica has partnerships with Astadia, Good Data, Microstrategy, Newmarket International, Silverline, TargetX, Ultimate Software and Xactly.

Posted by Jeffrey Schwartz on 05/17/2012 at 4:59 PM0 comments

Opstera, a startup company launched back in January, is launching a free cloud app monitoring tool that will initially measure the quality of service (QoS) of Microsoft's Windows Azure platform and ultimately other platform as a service (PaaS) offerings.

The Bellevue, Wash.-based company's CloudGraphs will provide continual workload tests against Windows Azure. It will scan the various Windows Azure datacenters throughout the world roughly every 15 minutes. The online tool will determine latency and other service level characteristics drilling into specific datacenters and services offered as part of the Windows Azure platform, such as compute storage and content delivery network. The dashboard will provide real-time and historical information.

Paddy Srinivasan, Opstera's CEO, said the company is offering the service free hoping to build community support for its monitoring technology. "We have gotten great feedback from the Azure influencer community and the MVPs, and we feel that if we open it up to the community they can build around it and start contributing more sophisticated tests using our infrastructure," Srinivasan said. "We want to increase community contributions so we are opening it up so the tests become more sophisticated and meaningful."

By building such a community, Srinivasan is hopeful it will fuel its commercial offerings. Its initial service, AzureOps, monitors more than 100 million Windows Azure metrics per month, according to the company. AzureOps provides a detailed view of complete cloud solutions, including third party services running atop of Azure.

Asked why Windows Azure customers would prefer such a service to Microsoft's own management offerings including the recently released System Center 2012, Srinivasan said AzureOps is completely cloud-based, meaning it doesn't require an investment in the software. But more importantly, it's able to monitor Azure metrics that System Center can't at this point, he noted.

The company was formed by the founders of Cumulux, Microsoft's 2011 Cloud Partner of the Year. Onetime Microsoft Windows Azure team members, they launched Cumulex in 2008 and sold the consulting and app deployment part of the business to Aditi Technologies. After that deal closed late last year, the Cumulux founders launched Opstera, where they decided to focus on application performance management.

So far, the company claims it has 150 paying customers for its service, which costs between $50 and $800 per month. The company is backed by bootstrap funding from the Cumulux buyout and $650,000 from some undisclosed angel investors.

Posted by Jeffrey Schwartz on 05/17/2012 at 1:14 PM1 comments

Hewlett-Packard released an open beta of its public cloud HP Cloud Services and it's already got the support of more than three dozen partners. HP Cloud Services is an Infrastructure as a Service offering targeted at enterprises, developers and ISVs who are looking for an off-premises alternative for compute and storage capacity.

Available for testing immediately is HP Cloud Compute, HP Cloud Object Storage (announced earlier this week) and the HP Content Delivery Network, all of which will be made available on a usage basis.

HP's public cloud services clearly aim to compete against the IaaS portfolio offered by industry leader Amazon Web Services. The HP public cloud offerings are based on the open source platform OpenStack, designed to allow interoperability among public cloud providers that support the platform, such as Rackspace, and compatible private cloud infrastructure.

The 37 vendors that announced support for HP Cloud Services include application vendors Otopy, PXL and SendGrid; database suppliers CloudOpt, EnerpriseDB and Xeround; development and testing companies SOASTA and Spirent; management vendors BitNami, CloudSoft, enStratus, Kaavo, RightScale, ScaleXtreme, Smartscale Systems and Standing Cloud; mobile providers FeedHendy and Kinvey; New Relic, which offers a monitoring solution; PaaS platform providers ActiveState, CloudBees, Corent Technology, CumuLogic, Engine Yard and GigaSpaces; security vendors Dome9 and SecludIT; and storage suppliers CloudBerry Lab, Gladinet, Panzura, Riverbed Technology, SME Storage, StorSimple, TwinStrata and Zmanda.

Rounding off the list is infrastructure management vendor Opscode and billing provider Zuora. For its part Opscode, which supplies the popular Chef cloud infrastructure management portfolio, said it's offering complete integration with HP Cloud Services to provide infrastructure automation via its Open Source Chef, Hosted Chef and Private Chef solutions. Using Opscode's plug-in for HP Cloud services, Opscode said customers can build and manage HP Cloud Compute instances.

Riverbed said its Whitewater cloud storage gateways will work with HP Cloud Object Storage, aimed at those who want to provide disaster recovery alternatives to traditional tape-based backup. And RightScale is adding HP Cloud Services to the portfolio of services and cloud infrastructure its solution can manage. With HP's new cloud service, RightScale now manages six public cloud providers and three private IaaS platforms, including OpenStack, Citrix CloudStack and the Amazon-compatible Eucalyptus.

Another management supplier, Standing Cloud, said HP Cloud Services is another alternative for hosting its marketplace for developers, IT pros and ISVs. The Standing Cloud Marketplace provides on-demand application hosting, installation and management. With security vendors such as Dome9 announcing support for HP Cloud Services, customers will have options for ensuring protection, a key concern among enterprise customers. The Dome9 cloud security service will integrate with HP Cloud Services, via an API key that enables the cloud server security and policy-based automation.

There are many other examples but the point is HP is trying to come out of the gate showing that it has lined up a broad partner ecosystem.

Do you think HP is going to give Amazon a run for its money? Leave a comment here or drop me a line at [email protected].

Posted by Jeffrey Schwartz on 05/10/2012 at 1:14 PM0 comments

Open source vendor Red Hat Wednesday said it plans to extend its platform as a service (PaaS) OpenShift operating environment to enterprises building private and hybrid clouds.

Red Hat's goal is to broaden the use of open source PaaS from early adopters -- so called "do it yourself" devops groups that have more autonomy to develop and deploy greenfield apps than many enterprises would like to see for mainstream line of business applications, where there are more IT operational controls in place.

Red Hat later this year will deliver fee-based OpenShift solutions that allow enterprises to build PaaS applications in a variety of languages and frameworks including Java Enterprise Edition, Spring, Ruby, Node.js and others. Red Hat will offer an enterprise edition of OpenShift built with its Red Hat Enterprise Linux, JBoss Middleware and its assortment of programming languages, frameworks and developer tools, company officials said on a Webcast Wednesday.

Jay Lyman, a senior analyst at 451 Research, who was on the Red Hat Webcast, sized the PaaS market at $1 billion this year, a fraction of the $12 billion cloud market. Lyman said he sees the PaaS market growing to $3 billion by 2015. "PaaS is still in the very early stages," he said.

Red Hat last week released the OpenShift Origin code, which consists of the components of OpenShift, to the open source community. That, along with this week's announcement, set the stage for a battle between two considerably potent players, Red Hat and VMware, whose CloudFoundry open source PaaS effort has gained momentum in recent months.

Asked on the Webcast whether developers will have to choose one or the other, Scott Crenshaw, VP and general manger of Red Hat's cloud business unit said stakeholders will have to make a choice. "We applaud them [VMware] for joining us in leading the charge for an open PaaS. OpenShift and CloudFoundry are competitive and developers have to choose," he said.

In a follow-up interview with Lyman, he said it is too early to count out either effort. "At this point it will depend on whether you have more Red Hat or VMware infrastructure," Lyman said.

The consulting firm Accenture is emphasizing Red Hat's OpenShift with its clients. Adam Burden, Accenture's cloud apps and platform global lead, said the company has introduced OpenShift to its 40,000 Java developers, noting it was well-received and it didn't require much training.

"We are excited to see the rollout of this PaaS strategy, we've been looking forward to it for some time now," he said. "Many folks are anticipating a rapid adoption of PaaS and we think Red Hat is at the forefront of it." UPDATE: Burden sent me an e-mail saying the following: "Our PaaS practice (actually we call it the Cloud Apps & Platform group) is absolutely building skills/capability on multiple PaaS platforms. Among the different PaaS solutions we are working with are Cloud Foundry and OpenShift in addition to Force.com, Heroku, AWS, Google App Engine and others."

Others believe CloudFoundry is a better bet. Bart Copeland CEO of PaaS provider ActiveState, which offers a private PaaS solution called Stackato, is among those who have picked CloudFoundry.

"Cloud infrastructure and middleware technologies are evolving, but we are still in early days in this space," he said in an e-mail. "ActiveState has committed to Cloud Foundry because it represents the best framework for Stackato, our application platform for creating a private PaaS using any language on any stack on any cloud."

Posted by Jeffrey Schwartz on 05/10/2012 at 1:14 PM0 comments

Amazon Web Services will hold its first conference aimed at bringing partners, customers and the AWS team together. Amazon will hold "AWS re: Invent 2012" on Nov. 27 to 29 at the Venetian Hotel in Las Vegas. Targeted at developers, enterprise IT and startups, the conference will consist of over 100 sessions, though the company has not announced specific content.

According to the preliminary conference Web site, AWS is looking to bring together its product and development teams and its systems integration and ISV partners with customers, venture capitalists and press. The announcement comes on the heels of the company's move to expand its partner ecosystem.

Among the topics AWS plans to cover are big data, high-performance computing, Web apps, development of mobile apps games and enterprise IT applications, the company said.

"AWS re: Invent is an opportunity for our current and future customers to learn proven strategies for taking advantage of the AWS cloud and take home new ideas that will help them invent within their own businesses and deliver more value to their customers," said AWS worldwide marketing head Ariel Kelman in a statement.

The company is recruiting potential speakers to give presentations. The deadline to propose a presentation is May 31.

Posted by Jeffrey Schwartz on 05/09/2012 at 1:14 PM0 comments

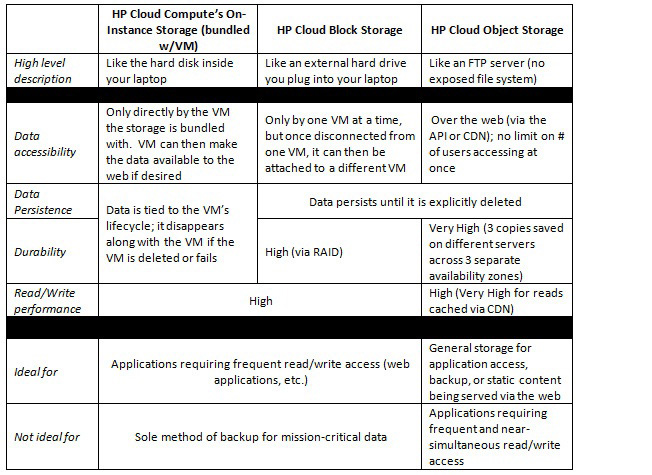

As Hewlett-Packard gets set to make its public cloud compute service available this week, the company is already planning to upgrade the portfolio to include block storage.

HP released the high performance, high availability service, called HP Block Storage, to private beta today. The company didn't disclose when it will release a public beta, when the service will be generally available or how much it will cost.

The new service will allow customers to add storage volumes up to 2TB per volume and they will be able to add multiple volumes atop of HP Cloud Compute instances. Customers will also be able to create snapshots of their volumes to create point-in-time copies, allowing them to create new volumes from the snapshots, which can be backed up to HP Object Storage for added redundancy if needed.

With the current private beta, users must manually create backups but HP said it will release an API to automate the process. Gavin Pratt, a senior product manager for HP's Cloud Service, said in a blog post the service will appeal to enterprise customers requiring high availability. "Using RAID, along with other high availability techniques, your data will be preserved even if an entire hard disk or RAID controller fails," Pratt wrote.

|

Comparison chart of HP's different cloud storage service options. Source: Hewlett-Packard Company. (Click image to view larger version.) |

HP's Cloud Block Storage will appeal to those who require additional capacity on top of their compute instances as well as those who want to ensure data from compute instances persist beyond the life of a specific virtual machine," according to Pratt. "Additionally, it's perfect for applications & databases where you need to perform reads and writes concurrently with high IOPS performance," he said.

Posted by Jeffrey Schwartz on 05/08/2012 at 1:14 PM0 comments

The list of company's supporting Facebook's Open Compute Project (OCP), an effort to provide open source datacenter design specifications, is growing.

The OCP last week announced the addition of new members Alibaba, AMD, Avnet, Canonical, Cloudscaling, DDN, Fidelity Investments, Hewlett-Packard, Salesforce.com, Quanta, Supermicro Tencent, Vantage and VMware. They join existing members Arista Networks, Dell, Intel, Goldman Sachs, Rackspace, Red Hat and Synnex.

More than 500 people attended the second OCP Summit, held last week at Rackspace's headquarters in San Antonio. The first summit was held in New York back in November. Since that meeting, the OCP has notched a number of key accomplishments, wrote Frank Frankovsky, director of hardware design and supply chain at Facebook and an OCP board member.

"The momentum that has gathered behind the project -- especially in the last six months -- has been nothing short of amazing," he said. In addition to announcing the new members, Frankovsky said HP, Quanta and Tencent have joined the OCP Incubation Committee, which determines what projects it the overall group will support.

Already on the committee's docket is Facebook's design for a storage server, code-named Knox, and "highly efficient" motherboard designs targeted to meet requirements of financial services firms. The designs include a Roadrunner, the code name for AMD's design, and Decathlete, Intel's offering.

The OCP also said it will bring together its Open Rack server rack design with a similar spec called Project Scorpio, developed by Baidu and Tencent. The goal is to have a common server rack design spec in 2013. Dell and HP both have server and storage system designs that will be compatible with the new spec.

Software providers also announced support for the OCP rack configuration. Among them were VMware, which will certify its vSPhere virtualization software to run on OCP-compatible systems and DDN will certify its WOS storage system. In addition, Linux distributor Canonical said it will provide certification.

The consortium also launched the OCP Solutions Provider program, which will let partners and distributors sell Open Compute Project-compatible systems. Among those that said they are seeking to join the program are Avnet, Hyve and ZT Systems as well as Quanta's QCT and Wistron's Winynn.

Posted by Jeffrey Schwartz on 05/07/2012 at 1:14 PM0 comments

As VMware's Cloud Foundry effort continues to gain momentum toward creating an open-source platform as a service (PaaS) ecosystem, Red Hat this week re-invigorated its rival OpenShift alternative. But it remains to be seen if Red Hat's bid to rain on VMware's parade will succeed.

Both companies launched their respective projects last year but VMware appears to have gained the upper hand in lining up a broad mix of partners and enthusiasm for Cloud Foundry than Red Hat has with OpenShift. Red Hat revived OpenShift on Monday by releasing OpenShift Origin, the components of its OpenShift PaaS platform to the open source community. Developers can access the code from GitHub.

The move was expected but the announcement failed to generate much noise. When I talk to various software suppliers, service providers and others looking to migrate their platforms to the cloud, many speak of their interest, if not intent to support CloudFoundry. I have not encountered the same fervor for OpenShift.

"It hasn't had the kind of traction that they would like," said Forrester Research analyst James Staten. "This is true for frankly all of the cloud efforts that have come out of Red Hat. They have all been done in the open source community way but they haven't grabbed the community's attention."

For its part, it looks like Red Hat is trying to position OpenShift as a more open alternative to Cloud Foundry. While not mentioning its rival by name, Red Hat took a subtle dig at VMware's stewardship of Cloud Foundry.

"For developers who want to push and improve PaaS technology, [it's] a real, truly open and participatory open source project that you can pull, read, play with, and push back," wrote Mark Atwood, Red Hat's PaaS Evangelist, in a blog post. "There is a community that you can engage with. Your participation will be welcomed, your bug reports will be acknowledged, your bug fixes will be accepted, and your new code and pull requests will be honored. If you really want to be part of this, you will be a real member of the development process, not just if you work for Red Hat."

With the release of OpenShift Origin, VMware and Red Hat are set to butt heads, said RedMonk analyst Donnie Berkholz, adding that the market for PaaS is still burgeoning, meaning neither effort is central to the respective companies' revenues. But PaaS is expected to grow. Gartner predicts the market for PaaS this year will be $707 million, jumping to $1.8 billion in 2015.

"If one crushes the other, don't be surprised to see either of these companies jump ship," Berkholz said. "At the end of the day, Red Hat and VMware are going to support whatever makes them money on their core products and services."

While noting Cloud Foundry has a first-mover advantage, Red Hat has waged a new battle in a release of OpenShift Origin. The coming months will likely tell if one wins out over the other or both exist as legitimate PaaS options, though potentially setting up a scenario of fractured open-source PaaS options.

"OpenShift was operating at a serious disadvantage up until now, because Cloud Foundry's code has been open-source," Berkholz noted. "Now that OpenShift is truly open, it's got a new chance to build further momentum behind it on an equal footing. Second chances don't come often in life, so Red Hat needs to take advantage of this one -- and I fully expect it will."

Forrester's Staten said it's too early to tell how this will play out. The public PaaS market is still in its formative stages and even further out is private PaaS. Cloud Foundry has picked up some partners in the service provider community, such as Joyent and AppFog, which is creating links to Amazon Web Services. But it's not too late for Red Hat to create enthusiasm for OpenShift and it has a key asset in its JBoss middleware platform.

At this point, Staten has seen a stronger push by VMware to build Cloud Foundry than Red Hat toward OpenShift. "It's not clear there is the same strategic push or the same priority within the company," Staten said. "Red hat may try to refute that or point to some things that are different but we haven't seen that so far from them."

It's also interesting to note that both companies have reached out to the OpenStack infrastructure as a service (IaaS) open source initiative based on initial contributions by NASA and Rackspace. Having a rich set of compatible IaaS options would bolster both efforts and support for OpenStack could further solidify it as an alternative to Amazon Web Services.

Staten said popular PaaS providers such as CloudBees, Engine Yard and Heroku have an advantage by running atop of an infrastructure as a service cloud. "They have benefited from being on top of infrastructure as service because some parts of your applications may not be appropriate to run in a platform as a service but will be appropriate to run on an infrastructure as a service," he said. "That combination of infrastructure as a service plus platform as a service is a wining combination going forward."

To that end, Red Hat last month announced it has joined the 155-plus member OpenStack project, while Piston Cloud Computing Monday launched a new open source initiative aimed at letting Cloud Foundry to run on OpenStack. VMware said it will cooperate in enabling Piston to develop a "cloud provider interface" that integrates its OpenStack Piston Cloud OS with Cloud Foundry.

Piston's code would allow service providers and enterprises to deploy Cloud Foundry on top of OpenStack, making it easier to deploy the two as a complete solution, Staten pointed out, though he warned Piston hasn't announced a product, just a development effort. Yet if the project leads to a product, it would appeal to large enterprises, he said. "Piston is focused 100 percent on enterprises and highly secure environments only," he said. "Every one in the private cloud space wants to offer a higher level value and this could be a differentiator for Piston."

VMware detractors will certainly like to see OpenShift succeed. But will it? And what are the repercussions of two strong horses in the open-source PaaS race? Drop me a line at [email protected].

Posted by Jeffrey Schwartz on 05/03/2012 at 1:14 PM0 comments

CollabNet, well known by software developers for its commercial distribution of the Subversion open-source application lifecycle management (ALM) platform, is bringing its source version control (SVC) technology to the cloud.

The company this week is launching CloudForge, a self-service iteration of its TeamForge ALM platform and source code repository that runs in public and private clouds. CollabNet's ALM technology is used by developers who practice Agile development.

Enterprises typically have used TeamForge on premises or in privately managed datacenters. But as IT organizations look to move more of their non-essential infrastructure off-premises, CollabNet sought to cloud-enable its platform. The effort began in earnest in 2010 with the acquisition of Codesion. At the time, CollabNet launched a multi-tenant version of TeamForge called TeamForge Project.

CloudForge will allow distributed development teams to manage the lifecycle of their code on public cloud services from Amazon Web Services, Joyent Google (App Engine) and Salesforce.com (Force.com). Through a partnership with VMware, CloudForge will also work with public and private clouds based on CloudFoundry.

CollabNet describes CloudForge as a development platform as a service, or dPaaS, because it's architected for development, deployment and scaling of applications. "We looked broadly across our 10,000 customers and started to recognize the trend of how public cloud customers are deploying to Amazon Web Services and leveraging our source code repositories on demand, and how our on premises customers are doing the same thing," said Guy Marion, VP and general manager for CollabNet's cloud services and formerly Codesion's CEO. CloudForge is built on the Ruby on Rails-based multi-tenant platform that runs Codesion, Marion said.

CloudForge will appeal to development teams because it provides self-service access to various programming tools and simplified deployment to public and private clouds, according to Marion. At the same time, it's designed to address enterprise concerns by offering role-based security, automated backups and support compliance requirements of running code in the cloud. CollabNet's TeamForge and Subversion Edge will also integrate with CloudForge.

Available first is Subversion Edge CloudBackup, which provides archiving, redundancy and migration services for CollabNet's on-prem offerings. Later this year, CollabNet will offer elastic server provisioning, reporting and collaboration services for hybrid cloud services.

The hybrid cloud platform will include a UI that supports collaboration among developers, an activity feed for developers that provides real time streaming, reporting for IT pros, the new AppCenter for hosting or connecting Agile tools from a provisioning platform called My Services to a number of third party tools such as Basecamp, Rally, JIRA and Pivotal.

CollabNet is also launching Marketplace, an online store where developers can purchase add-on tools and services from its partners such as Cornerstone, ALMWorks, BrainEngine, SOASTA, New Relic and Zendesk.

Posted by Jeffrey Schwartz on 05/02/2012 at 1:14 PM1 comments

Looking to shore up its big data strategy, IBM has formed a partnership with Cloudera, provider of the widely deployed distribution of Hadoop, the open-source data management platform for searching and analyzing structured and unstructured data distributed across large commodity compute and storage infrastructures.

IBM also announced Wednesday it has agreed to acquire Vivisimo, whose federated search and navigation software is used to analyze big data distributed across an enterprise. Terms were not disclosed. IBM made the two announcements together as it seeks to stake its position as a key player in the hotly contested market of big data analytics providers.

Big Blue is gunning to extend its analytics leadership beyond traditional data warehouses and marts, as it looks to let users analyze petabytes of data generated from content management repositories and less traditional sources including social media. IBM's rivals, including Oracle, EMC, Hewlett-Packard, Teradata, Microsoft and SAP as well as upstarts like Splunk, Hortonworks and even Cloudera are investing heavily in offering big data solutions.

IBM said it will integrate the Cloudera Distribution of Hadoop (CDH) and Cloudera Manager with the IBM open source big data platform, called InfoSphere BigInsights, available both as software on premises and in the cloud. The goal is to enable IBM customers and partners to run BigInsights within CDH and Cloud Manager and allow them to build on top of it.

"Our intention is not to compete directly with Cloudera, it's to build value on top," said David Corrigan, IBM's director of information management strategy. "We struck this partnership with Cloudera and ensured our BigInsights components, advanced components for workload optimization or development environments, would actually leverage the Cloudera open source distribution instead of our own."

Corrigan said Cloudera customers can run IBM's analytics tools, enabling them to extend and take advantage of IBM's big data capabilities, which include enterprise integration, support for real-time analysis of streamed data and extended parallel processing and workload management.

Meanwhile, IBM's move to acquire Pittsburgh-based Vivisimo gives it software that enables federated discovery and navigation of big data. The software allows users to search structured and unstructured data -- typically massive amounts of content -- across disparate systems and allows for the analysis of the data without moving it, Corrigan explained. Instead of moving the data, the software indexes it, assigns relevance and lets users analyze it. "You don't have to move the data into a new location to get a sense of its value," he said. "Leave it in place, therefore it's a faster time to value."

Forrester analyst Boris Evelson said in a blog post data discovery is an important but first step in the BI and analytics cycle. "Once you discover a pattern using a product like Vivisimo, you may need to productionalize or persist your findings in a traditional DW, and then build reports and dashboards for further analysis using traditional BI technologies."

Posted by Jeffrey Schwartz on 04/26/2012 at 1:14 PM0 comments

The personal cloud storage landscape this week grew with Google throwing its hat in the ring -- but not without the competition attempting to rain on its parade.

Google Drive arrived Tuesday, making it the latest personal cloud storage offering to join the likes of Apple's iCloud, Box, Dropbox and Microsoft SkyDrive. Google Drive includes 5 GB of free storage with the option of paying for more. Among several alternatives, Google Drive subscribers can pay $4.99 per month for 100 GB of storage or $49.99 per month for 1 TB.

Users can download the Google Drive app on their PCs, Macs or Android- or iOS-based devices and use it to store and synchronize files, images and videos. Google Drive uses Google Docs to access files and, naturally, it allows users to search their documents and content.

But in anticipation of the Google Drive launch, Box, Dropbox and Microsoft this week jockeyed for position by announcing new capabilities in their respective offerings. Box announced an updated API designed to make it easier for developers to integrate their apps with its service. Dropbox added a feature to its namesake service that lets users share access to files (documents, spreadsheets, folders, etc.) by providing links to designated recipients. And most prominently, Microsoft, seemingly anticipating the arrival of Google Drive, launched a major new release of its own SkyDrive offering.

I primarily use Dropbox to store documents but put files in there sparingly because the free version I signed up for has a limit of 2 GB. When Microsoft started offering 25 GB for its free SkyDrive service, I couldn't resist setting up an account.

Though I didn't find SkyDrive as easy to use as Dropbox, I did backup all of my personal photos on my SkyDrive account. I assumed SkyDrive would eventually become easier to use but always wondered if the 25 GB capacity limit would be yanked away. Sure enough, this week both of my assumptions came to pass.

Microsoft improved SkyDrive by adding a new downloadable app for Windows that works much like the Dropbox client. Now SkyDrive appears as another drive on Windows, allowing users to drag and drop files from their PCs to SkyDrive and vice versa. Microsoft added some other niceties such as the ability to synchronize and upload large files and folders up to 2 GB.

But as I had feared, Microsoft cut the 25 GB limit. The new maximum is 7 GB. While that's a major cut, it's still more generous than the 2 GB Dropbox offers and just enough to one-up Google Drive (Box offers 5 GB, as well). The good news is for those of us fortunate enough to set up SkyDrive accounts before this week, we get to keep that higher limit as long as we opt to do so. I fail to see why anyone would opt not to but I am presuming Microsoft is betting that many will fail to take that action.

Of course, I am tempering my excitement. While Microsoft hasn't said it may take the 25 GB limit away at some point for those now grandfathered, the company hasn't given any assurances it's permanent either, leading me to wonder when the other shoe will drop. Microsoft did not respond to a request for comment.

But in a blog post announcing the changes to SkyDrive, Microsoft said 99.94 percent of its SkyDrive customers used less than 7 GB of the capacity. I must admit I was among those in that range but it was nice knowing the added capacity was available.

While many individuals are likely to set up free accounts from multiple providers, all of them want to be your cloud storage provider of choice. And they want your organization to coalesce around a cloud storage provider where collaboration will take place and ultimately customers will start paying to store all of their content.

This is not just about a battle for cloud storage. As Google and Microsoft take each other on with their respective Office 365 and Google Apps cloud productivity offerings, the cloud storage services will lay a foundational layer for how a growing number of groups collaborate.

Many larger organizations use Microsoft SharePoint. But Google wants to stop Microsoft from further taking over the market for collaboration, said Forrester analyst Rob Koplowitz in a blog post. "The top deployed workloads for SharePoint are collaboration and content management," Koplowitz noted. "Expect Google Drive to go right after that."

And expect Box (which has gained a strong foothold into numerous large enterprises), Dropbox and a number of other cloud storage providers to go after that, as well.

Posted by Jeffrey Schwartz on 04/25/2012 at 1:14 PM0 comments

Looking to incent enterprises to use its Windows Azure cloud service to backup and archive their data, Microsoft today launched a discounted plan that lets customers store up to 41 TB of data for one year for $50,000.

At 8.5 cents per GB, that's a healthy discount over the current per-GB rate of about 12.5 cents, said Karl Dittman, a Microsoft business development director. "Normally a customer would have to purchase a petabyte of storage in order to get this pricing model we are providing for [approximately] 50 TB," Dittman said. That's not the upper limit; pricing will scale for larger amounts of storage.

Microsoft is offering the service with longtime partner CommVault, whose Simpana 9 Express software will allow IT pros to manage their backups and archives. The 41 TB limit covers standard Windows Azure storage. For those using non-geo replicated storage, the limit is 62 TB.

Simpana 9 Express includes a policy engine, supports data de-duplication, encryption and support for application awareness with Microsoft apps, said Randy De Meno, CommVault's chief technologist for Windows products.

Aimed at midsized and large enterprises, Simpana provides backup, recovery and archiving of Microsoft's key software offerings, including file services, SharePoint, Exchange and SQL Server, to premises systems running Windows Server 2008 and Hyper-V and to the Windows Azure cloud service.

Customers sign up for the service from Microsoft, which is providing free usage of CommVault's software for the year. By CommVault's estimate, it costs $8,000 per TB for primary disk storage on premises.

Less clear is how much it will cost in subsequent years. In a call with Microsoft's Dittman and CommVault officials, they said it will depend on the customer's configuration, noting the amount of stored data will only increase over time. Dittman did say Microsoft will continue to offer the 8.5-cents-per-GB rate for Windows Azure storage but customers will have to buy licenses for CommVault's full-fledged Simpana 9 package through the vendor or its channel partners.

Both companies see this as an opportunity for enterprises to use Azure for backup and archiving of Microsoft-based data initially and, ultimately, Linux and Unix data, as well. CommVault is not the first vendor to link its software to Windows Azure; Symantec's Backup Exec and CA's ARCserve offerings also allow customers to backup their data to Microsoft's cloud service.

It remains to be seen whether Microsoft will offer similar pricing for bundles with other storage software providers. But the bigger question is how quickly enterprises will turn to Windows Azure for backup and archiving as a replacement for premises-based storage. The less it costs to see how it works at scale, the better.

Posted by Jeffrey Schwartz on 04/23/2012 at 1:14 PM0 comments