In-Depth

Containers vs. Virtual Machines

The release of Windows Server 2016 will bring a new option of building apps based on micro-services that run in Docker and other standard containers. Does that portend the end of the VM?

- By Michael Otey

- 09/06/2016

The rise of containers that run small components known as micro-services has generated a lot of buzz in the trenches of enterprise IT over the past two years because of the potential model for how organizations architect infrastructure and build applications. Containers make it easier to help accelerate the move to the DevOps model. Although containers have been around for a while in the Linux world, they're new to Windows, set to debut with this fall's Windows Server 2016 release. Many organizations are looking at embracing containers, especially those with business imperatives that require a more agile approach to responding to the whims of customers, partners, suppliers and even employees. Nearly every major IT player has latched onto the open source container movement driven by Docker Inc.

At the recent DockerCon 16 conference in Seattle, Wash., 4,000 clearly eager attendees learned how containerized micro-services will set the stage for how the generation of applications are designed, built, deployed, and managed, ideal for serving the needs of organizations and ISVs alike who want to build cloud-native apps or bridge legacy software into this new world.

These emerging micro-services applications are often referred to as Mode 2 apps, which consist of lightweight containers running small apps or networked micro-services. This new style of application is expected to replace the heavyweight monolithic apps that today run in virtual machines (VMs). Does that mean containers will replace VMs? After attending the two-day DockerCon in late June, I can explore how containers and containerized applications compare to VMs running traditional applications and how Windows Server 2016 and a revamped Hyper-V will raise this question.

Windows Container Types

First, let's take a quick look at the forthcoming Windows Server Containers. Basically, a container is an isolated space where an application can run without affecting the rest of the system or other containers. Unlike VMs, which are all essentially the same, there are two types of containers in Windows Server 2016:

- Windows Server Containers: Running directly on top of the Window Server OS, Windows Server Containers provide application isolation through process and namespace abstraction. All Windows Server Containers share the same kernel network connection and base file system with the container host.

- Hyper-V Containers: More secure than Windows Server Containers, Hyper-V Containers each run in a highly optimized VM. With Hyper-V Containers the kernel of the container host isn't shared with the Hyper-V Containers. Instead, the container uses the VM's base OS. This provides a more secure environment as the containers are isolated from the underlying host, but have more overhead.

The Windows Server Containers themselves are compatible with Hyper-V Containers and other Windows Server Containers. The new Nano deployment option for Windows Server is intended as a platform for running containers.

Container and VM Architecture

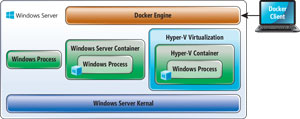

Containers have been called the next generation of virtualization because they provide application abstraction in much the same way that VMs provide hardware abstraction. Instead of virtualizing the hardware like a VM, a container virtualizes at the OS level. Containers run at a layer on top of the host OS and they share the OS kernel. Containers have much lower overhead than VMs and a much smaller footprint. You can see the Windows Server Container and Hyper-V Container architecture in Figure 1.

[Click on image for larger view.]

Figure 1. The Windows Container Architecture

[Click on image for larger view.]

Figure 1. The Windows Container Architecture

As illustrated, the VMs run on top of a hypervisor that's installed directly on the bare-metal system hardware. Each VM has its own emulated hardware, OS and applications. VMs can be paused, stopped and started. They can be moved between virtualization hosts without any end-user downtime by using technologies such as live migration or vMotion.

Containers are quite different. The container runtime is installed on top of the host OS and every containerized application shares the same base underlying OS. Each container is isolated from the other containers. Unlike VMs where each VM has its own individual kernel and OS, containers share the same kernel, network connection and base file system as the underlying OS. You don't need a whole new and separate OS, memory and storage as you would for a VM. Because containers don't have to emulate physical hardware and the entire OS, they're far smaller and more resource-efficient than VMs.

Container and VM Storage

By now most people are familiar with VM storage. With VMs one or more virtual disks provide the storage for the VM. There are different types of fixed virtual disks. VMs have dynamic, fixed and differential virtual disks. In each case they're stored as a file on the virtualization host or on a shared storage location. The VM sees virtual disks as different OS drives.

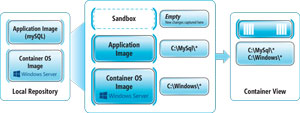

Container storage is quite different. Containers don't use virtual hard drives. They're designed to be stateless, easily created and discarded. Containers use a concept commonly called sandboxing to isolate any disk writes from the underlying host. Once a container has been started, all write actions such as file system modifications, registry modifications or software installations are captured in this sandbox layer (see Figure 2).

[Click on image for larger view.]

Figure 2. Container Storage Overview

[Click on image for larger view.]

Figure 2. Container Storage Overview

In the center of Figure 2 you can see a running container built from two separate container images. The container would see these as separate directories. These images are unchanged when the container runs. All of the container changes are captured in the sandbox and by default would be discarded when the container is stopped.

That all sounds great, but what if you want to persist the changes made from a container? There are a couple of ways that storage changes are persisted using containers. You can save your container with its changes as a new image or you can mount an existing directory from the host on the container.

You can mount a volume using the -v parameter of the Docker run command. This will enable all files from the host directory to be available in the container. Any files created by the container or changes to files in the mounted volume will be stored on the host. You can mount the same host volume to multiple containers using: docker run -it -v c:\source:c:\containerdir windowsservercore cmd.

A single file can be mounted by specifying the file name instead of the directory name.

Container and VM Deployment

The way containers are created is also quite different from VMs. VMs are composed much like physical machines. You create the VM virtual hardware, install an OS on the VM and then install the applications. Changes to the virtual disk files are typically persistent.

In contrast, containers are deployed from images that are stored in the container repository. The container repository can be local or it can be a public host like DockerHub. These images can be reused to create new containers multiple times. DockerHub, the public containerized application registry that Docker maintains, is currently publishing more than 180,000 applications in the public community repository. Figure 2 illustrates how images are stored in a local repository.

Containers typically consist of a container OS image, an application image and the sandbox. The container OS image is the first layer in potentially many image layers that make up a container. This image provides the OS environment and is a subset of the host OS. The container OS image is never changed by the container. It's possible to create as many containers as necessary from the images. Multiple containers can share the same image. Because the container has everything it needs to run your application, it's very portable and can run on any machine that has the same host OS. This enables Windows Server Containers to be easily deployed to any Windows Server 2016 host. The host can be in a VM or it can be bare metal.

Unlike VMs, containers are intended to be stateless and disposable. If you power off of the host, then the contents of the container are gone. Containers don't have live migration or failover and because a container isn't an individual server, you don't log into a container.

Container Networking vs. VM Networking

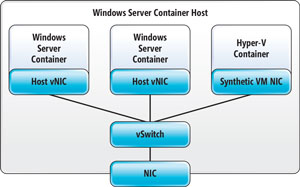

VM networking is a fairly familiar technology to any IT professional working with datacenter infrastructure. Each VM has one or more virtual network adapters. These virtual network adapters connect to a virtual switch and that virtual switch can connect to an internal, private or external network. If it connects to an external network, the VM typically gets its IP address from an external DHCP and all network traffic flows through the host's physical network adapter.

Windows Containers have similar network functionality to VMs. Each container has a virtual network adapter that's connected to a virtual switch. All inbound and outbound traffic for the container flows through the virtual switch and the container's virtual network adapter. The virtual switch can be connected to a private, internal or external network. Containers use the virtual switch on the machine to communicate either an IP/MAC address combination or a NAT IP address (see Figure 3).

[Click on image for larger view.]

Figure 3. Overview of Windows Server Container Networking

[Click on image for larger view.]

Figure 3. Overview of Windows Server Container Networking

Windows Containers support four different networking modes:

- Network Address Translation Mode: In this mode the container is connected to an internal virtual switch that uses NAT to connect to a private IP subnet. External access to the container is provided through port mapping from the external IP address and port on the container host to the internal IP address and port of the container. By default, when the Docker daemon starts, a NAT network is created automatically.

- Transparent Mode: Here, much like a VM, each container is connected to an external virtual switch that's attached to the physical network. IP addresses are assigned either statically or using the network's DHCP.

- L2 Bridge Mode: In this mode each container is connected to an external virtual switch. Network traffic between two containers in the same IP subnet and container host will be directly bridged. The traffic between two containers on different IP subnets or attached to different container hosts will be routed through the external virtual switch. MAC addresses are re-written on egress and ingress.

- L2 Tunnel Mode: This networking mode is only intended to be used with containers running in the Microsoft Azure cloud. The tunnel mode is like L2 Bridge mode, except all container network traffic is forwarded to the host's virtual switch.

Important Facts About Windows Containers

There are a few key things you need to know about Windows Server Containers and Hyper-V Containers:

- You can't run Linux containers on Windows Server. One common misperception is that Docker support in Windows Server enables Linux containers to run on Windows and vice versa. That's not the case. Because containers rely on the OS kernel functionality provided by the host, they cannot cross over to different host OSes. Therefore, at this time you can't run Linux containers on Windows Server 2016 and you can't run Windows Server Containers on Linux.

- Not everything runs in a container yet. Eventually all Windows applications should be able to run in a Windows Server Container. However, at this time the Windows Server support for containers is still in an early phase and not everything can be containerized. Notably, at the time of this writing the full versions of SQL Server cannot run in a container. However, there is a SQL Server Express image. Microsoft has published a list of compatible applications on the "Application Compatibility in Windows Containers" documentation page.

- Docker is the management layer. It's important to realize that Docker is not the Windows Server Container execution environment. Docker is the management tool. The advantage of using Docker is that it provides the same management model for both Windows Server 2016 and Linux. You can also manage Windows Server 2016 Containers with Windows PowerShell.

Putting a Lid on It

Containers offer the promise of enabling organizations to improve application performance, increase the density of apps running on their servers and speed up application deployment. Although containers offer a lot of advantages for application abstraction, containers are not about to replace VMs in the Windows Server world just yet. First, not all workloads run on Windows Server Containers. Next, the state of management tools and third-party management products for VMs is currently much more mature than the container management ecosystem. Further, while you could use bare metal, most companies are not likely to deploy containers on VMs.