In-Depth

Storage Spaces Matures in Windows Server 2016

A more resilient Storage Spaces in the forthcoming Windows Server 2016 gives software-defined storage a major boost, promising to usher in more intelligent converged infrastructure.

- By Paul Ferrill

- 03/30/2016

Software versus hardware remains a widely debated topic when it comes to the best way to architect computer systems and networks. As CPU chips get more cores and, with that, the ability to perform a higher number of computational chores, the argument has become even more heated. Performance is no longer an issue with these new CPUs, and the advantages of a software solution begin to outweigh those with dedicated hardware.

Microsoft has traditionally been known as a software company, and it's pretty obvious where it falls in the discussion. Storage Spaces is Microsoft's software-based data resiliency capability found in both the client and server versions of the Windows OS. Introduced with Windows Server 2012 and Windows 8, Microsoft has upgraded Storage Spaces with new features and capabilities now available in the latest technical preview release of Windows Server 2016.

First Version

In its initial incarnation, Storage Spaces provided a number of basic features that essentially use software to implement a few basic hardware RAID levels. As a mirroring capability, RAID 1 uses a minimum of two disks to store an exact copy of all data. If one of the disks fails, you still have all the data on the second disk. A three-way mirror does the same thing but uses three disks. Storage Spaces has the same mirror functionality to include a three-way mirror. The disadvantage of this method is the requirement for a minimum of two disks of the same size producing a usable disk space equivalent to one disk.

RAID 5 utilizes a different disk technique to write two copies of a chunk of data to at least two different disks along with parity information to enhance error detection. Storage Spaces calls this type of arrangement a parity disk and does the same basic process of writing data to two separate physical disks. It is possible to configure Storage Spaces to write data to three different disks for higher reliability. This method has a space advantage over mirror because you typically lose only a percentage of the total available space. This technique also makes it possible to quickly rebuild a failed disk drive from the data stored on the other disks. RAID 5 typically uses three or more physical disks.

Microsoft's first major upgrade of Storage Spaces appeared in Windows Server 2012 R2 and Windows 8.1, and introduced the concept of tiering, which moves more frequently accessed data to faster storage such as a solid-state disk (SSD) while migrating infrequently accessed data to slower storage. Also added was write-back cache, providing a way to buffer small, random writes to SSD storage, thereby reducing the latency of writes. One of the most significant new features in that version of Storage Spaces was the ability to rebuild a storage space after a disk failure by using spare capacity in the pool instead of a single hot spare.

Storage Spaces Direct

In technical preview 2 of Windows Server 2016, Microsoft introduced Storage Spaces Direct (S2D), which utilizes local direct-attached storage shared across a cluster of a minimum of four physical nodes. Slava Kuznetsov, a Microsoft software engineer, presented a paper (downloadable as a PDF here) at last fall's Storage Networking Industry Association (SNIA) conference in Santa Clara, Calif., introducing the concept. Under the covers it uses the same basic techniques for resiliency to write either a mirrored or parity copy of data to another disk on the same host or across the network to a different host.

S2D is built on top of the Software Storage Bus (SSB), which essentially implements mesh connectivity between the storage nodes so that each server in the cluster can see the disks on the other nodes. S2D will use RDMA if supported by the network adapters over InfiniBand and over Ethernet with iWARP and RoCE. Cluster shared volumes (CSVs) are built on top of this network and spread data across all nodes. Claus Joergensen is a Microsoft principal program manager for S2D and has written a number of blog posts with much more detail on the architecture of S2D.

In a post introducing S2D last May, Joergensen noted administrators can manage it with Microsoft System Center or Windows Powershell. Microsoft also updated System Center Virtual Machine Manager (SCVMM) and System Center Operations Manager (SCOM) to support S2D, according to Joergesen. The SCVMM upgrade allows for including bare-metal provisioning and cluster formation with storage provisioning and management, he noted, adding that the SCOM upgrade allows it to interface with the reimagined storage management model.

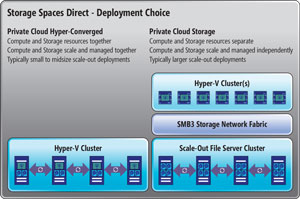

The primary use cases for S2D are private cloud -- on-premises or hosted -- according to Joergensen, who defined two deployment modes (see Figure 1): "private cloud hyper-converged, where Storage Spaces Direct and the hypervisor (Hyper-V) are on the same servers, or as private cloud storage where Storage Spaces Direct is disaggregated (separate storage cluster) from the hypervisor."

[Click on image for larger view.]

Figure 1. The two deployment options described by Microsoft.

[Click on image for larger view.]

Figure 1. The two deployment options described by Microsoft.

While the capability provided in S2D might look a lot like VMware VSAN on the surface, you'll find a multitude of differences at the architecture level. Microsoft leverages SMB3 for all communication between nodes, plus the high-speed SMB Direct and SMB Multichannel. It can make use of different types of SSD to include NVMe and, in the future, new non-volatile memory for both storage and write-back caching. In mixed-device mode VSAN uses only SSD as a caching tier. Comparisons aside, S2D represents Microsoft's official entry into the hyper-converged space where compute and storage reside on the same node.

The recently announced version 6.2 of VSAN includes new compression, deduplication and erasure coding features that work only with an all-flash configuration. This includes all nodes in the cluster and for erasure coding requires a minimum of four nodes for RAID 5 and six nodes for RAID 6. The typical VSAN hybrid implementation requires one SSD and from one to seven rotating disks to form a disk group. VSAN 6.2 does not presently support RDMA.

Technical preview 4 added multi-resilient virtual disks and ReFS real-time tiering (see "First Look: Microsoft's Resilient File System Version 2"). A multi-resilient virtual disk has one part that's a mirror and another part that uses erasure coding or parity. This configuration uses tiering to implement the different protection schemes across the different storage types. Write operations always go to the mirror tier, which is the better-performing of the two. ReFS then rotates data from the mirror tier into the parity tier in large sequential chunks computing the erasure coding in transit.

Industry Adoption

Early adoption by the big mainstream OEM partners of Storage Spaces was slow. It's only been in the last year or so that Dell Inc., Hewlett Packard Enterprise (HPE) and Lenovo have begun to sell and support servers with support for Storage Spaces. Presumably some of the delayed support had to do with hardware in that all the server vendors have historically used some type of hardware RAID controller in their products to provide storage resiliency. Many of these controllers did not support JBOD mode, which was required by the initial release of Storage Spaces.

Now, both Dell and HPE have been quick to support new features in the multiple-preview-release approach to Windows Server 2016. You can find white papers from both vendors and driver updates specifically for the new Storage Spaces capabilities. This shows an increased level of interest from both the vendors and from customers. The good news here is you won't have to wait long after the final release of Windows Server 2016 for the newest Storage Spaces features to be supported.

Bottom Line

Both Microsoft and VMware have brought these new capabilities into their platforms, which has caused the storage industry in general to scramble to justify their products and the associated cost. While the storage capacity might not meet every use case, it definitely has benefits for smaller environments, especially when you consider the management required for separate storage systems.

Other vendors in the hyper-converged market such as Gridstore Inc., SimpliVity Corp. and Nutanix have generated a lot of sales from this very same concept. They've teamed with server OEMs like Cisco, Dell, Lenovo and SuperMicro to deliver the same basic functionality as you get from VSAN and will get from Windows Server 2016. The issue then becomes one of cost and potentially how each platform integrates into your overall IT infrastructure. The good news is that choice and competition often drive innovation and cost in a good direction.

About the Author

Paul Ferrill, a Microsoft Cloud and Datacenter Management MVP, has a BS and MS in Electrical Engineering and has been writing in the computer trade press for over 25 years. He's also written three books including the most recent Microsoft Press title "Exam Ref 70-413 Designing and Implementing a Server Infrastructure (MCSE)" which he coauthored with his son.