In-Depth

Talking the Talk: When Will Microsoft Take Speech Recognition to the Enterprise?

Speech-recognition technology can boost efficiency and might someday make its mark on the enterprise -- if Microsoft would only promote it.

I have to confess, I've always been somewhat of a daredevil. Zero-gravity flights, shark dives and racing my cigarette boat are all things that I've done and enjoyed immensely. In 1994, though, my thrill-seeking caught up with me in a big way.

I decided to travel to Charlotte, N.C., to spend Thanksgiving weekend with my parents. On a particularly nice day while I was there, I borrowed my brother's in-line skates to get some exercise. Now, you have to understand that while I grew up skating, as kids my friends and I never even thought about wearing helmets or pads. Anybody who went skating with safety gear on would've been tagged as the biggest sissy on the block. So, needless to say, I wasn't wearing any safety gear on that fateful day in North Carolina.

Anyway, I wiped out at top speed while going down one of the biggest hills in the neighborhood. The end results were a compound fracture in my arm, a shattered wrist, two broken fingers and a lot of road rash. As you can imagine, I was in a cast for a long time.

My girlfriend (now my wife of 15 years) and I had planned to go to Cancun right after New Year's Day for a week of fun in the sun. My cast wasn't waterproof, so I did the only sensible thing I could think of -- I cut it off myself. The next day, I re-injured my arm playing water polo. OK, it was a stupid thing to do, but I was 20 years old at the time. Enough said.

Around the time my injuries started to heal, I was offered a job as a technology writer (I had previously been a network admin). Immediately after starting the job, I realized something: Typing more than a few sentences really hurt! That was a problem, as my entire job consisted of typing.

I knew I had to do something, and I began to investigate voice-recognition software. I ultimately invested in a product from IBM Corp. called ViaVoice. Unfortunately, speech recognition wasn't ready for prime time. Dictating an article with ViaVoice was more effort than it was worth. Defeated, I decided to suck it up and deal with the pain of typing.

Eventually, my injuries mostly healed. Even though typing isn't nearly as painful for me as it was back then, it can still be uncomfortable. That being the case, I did some research a few years ago to see if computer-based dictation had become any more practical.

I acquired a copy of Dragon Naturally Speaking (version 8) and tried it out. While the software was still far from perfect, it was infinitely better than the IBM software I'd used so long ago.

Today, I use Dragon Naturally Speaking version 10. I've found it produces far better results than version 8 did. The software is so good, in fact, that I dictate the majority of the articles I write -- including this one.

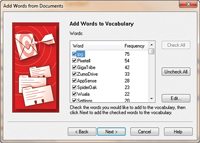

[Click on image for larger view.] |

| Figure 1. There's often a tradeoff between dictation accuracy and system performance. |

Why has there been such a dramatic improvement in dictation software? Several factors have contributed to how well modern dictation software works. For starters, dictation is CPU-intensive. When I tried out the IBM software many years ago, CPUs weren't powerful enough to perform accurate dictation.

Although I ran ViaVoice on a Pentium 133 -- which was then a top-of-the-line machine -- the software required the user to speak in discrete speech. In other words, you had to pause for a second or two after each word to give the software time to recognize the previous word. As CPUs have become more powerful, this requirement has gone away. Dragon and similar voice-recognition products don't require discrete speech.

Faster CPUs have improved dictation software in other ways, too. Dictation software must analyze speech data in real time, and 64-bit, multi-core CPUs are capable of processing more instructions per second than was possible even a few years ago. Today's dictation software can perform a more in-depth analysis on voice data than what was previously possible, resulting in a higher degree of accuracy.

Of course, performing in-depth analysis on speech data has the potential to bog down all but the most robust of CPUs. That being the case, Nuance Communications Inc., maker of Dragon Naturally Speaking, built a feature into the software that allows users to adjust the CPU load. Doing so helps to improve the system's performance, but at the cost of accuracy.

Parts of Speech

Although faster CPUs are probably the single most important factor contributing to the accuracy of speech-recognition software, they certainly aren't the only factor. Dragon Naturally Speaking goes to great lengths to improve accuracy by learning the user's speech patterns.

When you first install the Dragon software, you must train it by reading some excerpts from various books. This helps Dragon to learn what you sound like, and how you pronounce various words. Additionally, Dragon provides you with the option of analyzing your documents and e-mail messages. This helps the software to learn your sentence structure.

Under normal circumstances, a product such as Dragon Naturally Speaking probably wouldn't understand words such as IPSec, Hyper-V or SharePoint. However, when Dragon analyzes your documents, it compiles a list of words that you've used in your documents but aren't in its dictionary. The software shows you each word that it didn't recognize, as well as how often you used the word. You then have the option of training Dragon to recognize the unknown words.

A Voice in the Enterprise

Speech-recognition software is starting to become more commonplace in the enterprise. Over the last several years, Microsoft has been investing heavily in speech-recognition research. In fact, speech-recognition capabilities have begun to show up in several different Microsoft products. This trend seems to have started with Microsoft Office 2003, which had built-in dictation capabilities.

[Click on image for larger view.] |

| Figure 2. Dragon Naturally Speaking can learn your writing style by analyzing your documents. |

Microsoft did away with the dictation feature in Office 2007 and Office 2010, choosing instead to integrate the feature into the Windows OS. Both Windows Vista and Windows 7 have built-in speech-recognition capabilities.

As with Dragon Naturally Speaking, the Windows Speech Recognition feature allows users to dictate documents and e-mail messages. Users can even use voice commands to control the OS (Dragon also offers this capability). So, if speech-recognition capabilities are built directly into the Windows OS, why don't more people use them? There are several contributing reasons.

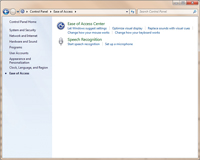

For starters, Microsoft hasn't done a good job of marketing the feature. Not only do you hardly ever hear someone from Microsoft publicly mention Windows speech-recognition capabilities, but the speech-recognition feature is poorly represented within Windows. Think about it for a moment: Have you ever even seen a Speech Recognition icon in

Windows Vista or Windows 7? If not, it's because the icon is in the Control Panel's Ease of Access section. Microsoft treats speech recognition as an accessibility feature rather than as a productivity feature.

I haven't heard anything official from Microsoft regarding the future of speech recognition, but there's evidence to suggest it may play a much bigger role in the future. In 2008, Bill Gates gave an interview to BBC News in which he predicted the keyboard and mouse would eventually give way to more intuitive technologies such as touch, vision and speech recognition. This transition has already begun. Windows 7, for example, natively supports speech recognition as well as multitouch displays.

Other evidence suggesting that speech recognition may soon become a more prominent Windows feature includes the fact that Microsoft has begun creating tools that make it easier to support Windows Speech Recognition in the enterprise. When users enable the Speech Recognition feature, Windows stores data pertaining to the user's unique speech patterns in the user's profile. Microsoft has created the Windows Speech Recognition Profile Tool, which allows the user's speech profiles to be backed up and restored (you can download the tool here). Such a tool will be critical to supporting the Windows Speech Recognition feature in corporate environments.

Additionally, Microsoft has created an entire resource kit dedicated to desktop dictation, downloadable here. There's even a Software Development Kit that lets third-party developers create speech-enabled apps, available here. Clearly, Microsoft is creating an entire arsenal of speech-recognition support software.

[Click on image for larger view.] |

| Figure 3. Microsoft treats speech recognition as an accessibility feature. |

A Vocal Exchange

Although the add-ons that Microsoft has created for the Windows Speech Recognition feature are impressive, the best evidence that speech recognition is about to go mainstream comes from Microsoft Exchange Server.

Exchange Server 2007 and 2010 both contain a feature called Unified Messaging. The Unified Messaging feature allows Exchange to be linked to a corporate telephone system. The primary benefit to this linkage is that it allows voicemail messages and faxes to be routed to a user's Inbox (referred to as a Universal Inbox), where they're displayed alongside normal e-mail messages.

In Exchange 2007, voicemail messages were presented to users as .WAV files attached to normal e-mail messages. Users had the option of playing these files directly through Outlook 2007 or listening to the message through a telephone. In Exchange 2010, Microsoft extended the voicemail feature to include automatic transcription. That way, users can either listen to a voice message, or they can read a transcript of the message that was generated by speech-recognition software running on the Unified Messaging Server.

As handy as the voicemail transcription feature is, though, it's more than just a convenience feature. Outlook is designed to index e-mail messages so users can easily search for specific messages. Because Exchange 2010 transcribes voicemail messages, it's now possible for Outlook to index them in the same way in which it indexes e-mail messages.

Even though voicemail transcription is new to Exchange 2010, Exchange 2007 also included a speech-recognition engine. This engine was exposed through a feature called Outlook Voice Access, which also exists in Exchange 2010.

Outlook Voice Access allows users to verbally interact with their Exchange mailboxes. Users dial an access number from any telephone, enter their extension numbers and a PIN, and they're connected to Exchange. Once connected, users can listen to or even verbally compose e-mail messages. E-mail messages composed over the phone are not transcribed, though; they're attached to standard messages as .WAV files.

Of course, history is filled with stories of technical innovations that people thought were good ideas but never really caught on. And even though Microsoft is including speech-recognition capabilities in its products, there are no guarantees that the technology will become widely used. Even so, I tend to think that speech-recognition technology isn't just something Microsoft is trying to push on its customers, but something that has practical applications.

What Does the Future Hold?

So, what will happen with speech-recognition technology? It's hard to say. Although the technology is beginning to mature, it still remains to be seen as to whether or not it will be widely adopted. As someone who uses speech-recognition software on a daily basis, though, I find the technology both practical and reliable. As such, I suspect it will only be a matter of time before speech-recognition software gains wider acceptance in the enterprise.