In-Depth

Microsoft's Massive Azure Extension into the Enterprise

Now that Windows Server 2016 is available, Microsoft says IT pros and developers can build and extend containerized workloads and applications to its network- and compute-enhanced Azure public cloud -- and vice versa.

Since the launch of Microsoft Azure back in early 2010, Microsoft has consistently emphasized its goal of bringing parity between the datacenter and cloud, a prospect easier said than done, especially because infrastructure services didn't arrive until many years later. Microsoft first underscored hybrid clouds as the way to get there from the beginning with Windows Azure Connect, a tool allowing connectivity from Microsoft-based endpoints to its public cloud. The company took a further step with the release of Windows Server 2012, which it dubbed Cloud OS for its ability to bridge datacenter workloads to Azure. While Microsoft would like to see organizations move the vast majority of their workloads to Azure over time, the company has determined the server OS had to go beyond merely allowing customers to just move existing applications and data to Azure. The next server OS needed a runtime environment and multitenant architecture attributes, security and the ability to allow virtual machines (VMs) and applications to run on-premises and in Azure with the same properties, which became the core focus of Windows Server 2016.

After an extensive two-year preview cycle consisting of five beta releases, Microsoft has finally delivered Windows Server 2016, a much more extensible version of the OS for hybrid cloud environments, bringing it closer to the vision outlined in 2010. Not to be mistaken for next year's anticipated launch of Azure Stack, Windows Server 2016 offers the best path for those looking to transition or share existing and new workload and application stacks to Azure.

"We think of Windows Server 2016 in many ways as the edge of our Azure cloud, and one of the things that we recommend you think of is Azure as the edge of all your on-premises servers," said Scott Guthrie, executive VP of Microsoft's Cloud and Enterprise group, announcing the official release of the OS during the opening keynote session at the Ignite conference in Atlanta. "Windows Server 2016 is a major enhancement of Windows Server. It's cloud-ready and incorporates a lot of the deep learnings that we've had running our own cloud with Azure, including the core capabilities that you need to run a software-defined datacenter."

Many of the new features in Windows Server 2016, outlined in various first-look articles in Redmond magazine over the release cycles of the technical previews, include support for software-defined network (SDN) infrastructure, more resilient Storage Spaces, support for Windows Server and Hyper-V containers, multi-tenant security via the Shielded Hyper-V VM feature, and the Nano Server headless deployment configuration option, which provides a much smaller server OS footprint that allows you to source packages from repositories on either a local path or from the cloud.

Over time, Microsoft and others believe the Nano Server option -- and similar types of server core lightweight configurations -- could be the server deployment method of the future. According to Microsoft, the Nano Server option offers a higher-density and more efficient resource utilization of the OS. This is important when configuring private cloud infrastructure, notably clustered Hyper-V, storage and core networking, or for deployment as an application platform for running cloud-scale apps based on containers and micro-services architectures. Despite the potential for the Nano Server configuration option, its usefulness for now is limited as most commercial applications can't currently run on it because it lacks some critical APIs, as explained by Redmond contributor Brien Posey, though he noted there are some tools that IT pros can use as a shim for some applications.

Azure-Based Windows Server Management

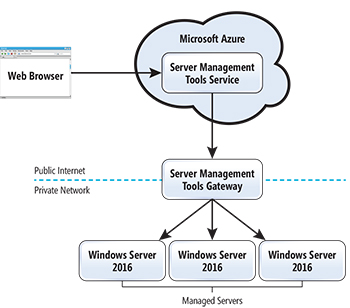

Most noteworthy -- and a key reason Guthrie describes Windows Server as the edge of Azure and vice versa -- is the new Windows Server 2016 Azure-based Remote Server Management Tools (RSMT) option, which promises to be used in tandem with the Nano Server deployment option. While it wasn't clear if RSMT would be available upon the release of Windows Server 2016 in our June first look at the RSMT preview, Microsoft confirmed key components of the tools can be found in the Azure Marketplace. RSMT lets administrators manage any Windows Server 2016 servers running on-premises, as well as VMs in Azure. As Server Core and Nano Server options become more prevalent -- which Microsoft and others predict will become the norm over time -- traditional tools such as Server Manager or Microsoft Management Console (MMC) snap-ins won't work with the headless servers. RSMT uses a gateway architecture to allow for remote management of Windows Server 2016 machines and VMs on-premises. When creating a connection to the machines, administrators also configure and create a connection to the gateway machine (see Figure 1).

[Click on image for larger view.]

Figure 1. The Remote Server Management Tools (RSMT) and Microsoft Azure gateway architecture.

[Click on image for larger view.]

Figure 1. The Remote Server Management Tools (RSMT) and Microsoft Azure gateway architecture.

Among the management features now available with RSMT are Device Manager, Event Viewer, Firewall Roles, PowerShell, Processes, Registry Editor, Roles, and Features and Services. In the pipeline are Storage and Certificate Manager, according to Kriti Jindal, a program manager on Microsoft's Windows Server and Services team, in a short RSMT video posted here.

Bringing Windows Containers to Azure

An equally important new capability Microsoft has brought to Windows Server 2016 is the support for the Docker Engine, a commercial runtime environment of the open source container platform. Microsoft announced at Ignite that the Docker Engine will be included free of charge in Windows Server 2016. "This makes it incredibly easy for developers and IT administrators to leverage container-based deployments using Windows Server 2016." Guthrie said.

Among the first customers Microsoft has worked with to demonstrate how customers might use Docker containers in Windows Server 2016 and Azure is Tyco, a provider of fire safety and security systems that are used by 3 million commercial and residential customers worldwide, with 900 locations in 50 countries and 57 employees (not accounting for its just-completed $3.9 billion merger with Johnson Controls Inc.).

Daryll Fogal, the CIO of Tyco, joined Guthrie on stage during the Ignite keynote to explain how his company has started using the Docker engine in Windows Server 2016 to make apps more modular and expedite updates and then allow for modernization of legacy apps using micro-services. The proliferation of new sensors, readers, cameras and Internet of Things (IoT)-type monitoring devices is generating far more data than the existing three-tier, .NET-based security operations management platform (now used by 18,000 Tyco customers) can accommodate, according to a description of its work with Tyco posted by Microsoft.

"We think of Windows Server 2016 in many ways as the edge of our Azure cloud, and one of the things that we recommend you think of is Azure as the edge of all your on-premises servers."

Scott Guthrie, Executive VP, Microsoft Cloud and Enterprise Group

To provide a more scalable and elastic architecture Tyco migrated the components of the existing application into VMs. However, upon learning that Docker containers would be included in Windows Server 2016, it determined they'd be lighter-weight and more portable than VMs. That's because each container is an independent file with its own runtime including the app, all of its dependencies, libraries and configuration components, so it can run in any environment and not require the overhead of a monolithic app.

When the application was originally built, it didn't have IoT or globalization in mind, according to Fogal. While containerizing its legacy apps provided additional scalability, the plan was to take the legacy applications and refactor them into Azure micro-services, he said. "And then for all practical purposes we have infinite scalability," Fogal explained.

Azure will continue to be a bridge for Tyco, he added. "We've got all these legacy people, legacy applications on on-premises servers, and we need to bridge [them] to Infrastructure as a Service and ultimately to cloud," he said. "And what we've found is that the path that Microsoft has laid out for us has made that very easy."

Tyco officials claim it only took several days to change the .NET code into Docker files and the result is better app availability, along with consistency and control for developers, testers and deployment teams. Also, the DevOps group can push updates faster with minimal or no downtime. Most notably, the newly containerized app, which Tyco calls C-CURE 9000, now allows the company to run it anywhere, including Windows Server and Azure.

Docker Engine Inside Windows Server

Microsoft and Docker first got together more than two years ago and revealed plans to create a commercial version of the Docker Engine that would work in the Azure cloud, Windows Server and Hyper-V. It was a defining decision by Microsoft because it meant casting aside its own container development efforts and joining with the open source community and some of its key rivals, including Amazon Web Services Inc. (AWS), Google Inc., IBM Corp., Red Hat Inc. and VMware Inc.

With the launch of Windows Server 2016, the two companies also announced that the commercially supported version of Docker Engine embedded in the server OS is now included at no additional cost and supported by both companies. Microsoft will be taking the frontline tier 1 and tier 2 support, which will be backed by Docker. Though it's included at no additional charge, the Docker Engine technically isn't part of the Windows Server product or the license, says Mike Schutz, general manager of Microsoft's Cloud and Enterprise division.

"We want to make it available at no additional cost to customers so it really eases their use of the container technologies," Schutz says. "Part of it was our desire and belief that customers can really benefit from the container technologies in Windows, and because Docker is a leader in containerization and they have this Docker Engine capability that was available, in order to help accelerate customers getting the value of the containers in Windows Server, we thought this would be a great partnership."

Tyco's early work with Docker was a key impetus for deciding to extend the integration of Docker Engine with Windows Server 2016, Schutz explains. "They're a proof point and in many ways they were an inspiration for us to create this agreement with Docker because we saw how well they were able to become more agile within their development organization by using Windows Server and Docker together," Schutz says. "Now we're trying to replicate that through the breadth of our customer base."

By working with Docker, Microsoft was able to create the core technologies to enable the creation of both Windows Server and Hyper-V containers. Once created, the Docker Engine provides the ability to orchestrate and manage those containers. "It's really broadly used in the Linux container world, and so what we're doing is allowing organizations that are already using containers to be able to take advantage of Windows Containers in those same environments using the same Docker tooling," Schutz says. "So bringing together the Linux ecosystem with the Windows ecosystem is a great benefit. The other benefit is because Docker has so much experience in containers already, bringing that to the .NET developer world and helping them to rapidly bring some of their applications in the containers using the Docker tooling will help accelerate their usage."

Scott Johnston, COO of Docker, explains the interrelationship between Windows Containers and the Docker Engine. "The Windows kernel has the container primitives and the Docker Engine takes advantage of those primitives," Johnston says. "So when Microsoft says Windows Containers or Hyper-V containers, that's synonymous with Docker Engine containers. The way you take advantage of Windows containers is using the Docker Engine interface."

Integrating Docker Management Tools

In addition to Microsoft offering Docker Engine in Windows Server, Hyper-V and Azure, the company said it will promote the use of Docker Datacenter, the container provider's hybrid cloud development and management platform announced earlier this year. Docker Datacenter for managing Windows Server container-based workloads is slated for imminent release, Docker said.

Available in both companies' respective cloud marketplaces, Docker Datacenter is designed to allow enterprises and services providers to deploy Containers as a Service (CaaS) either on-premises or in a virtual private cloud, providing a managed and secure environment that allows developers to build and deploy self-service applications. It costs $50 per month per node. Docker Datacenter includes Docker's Universal Control Plane embedded with the Swarm native clustering tool, Trusted Registry (DTR) 1.4.3, that provides Docker image management, security and an environment for collaboration and the most recent version of the Docker engine runtime.

Johnston says Docker Datacenter pairs well with the Microsoft System Center and Operations Management Suite (OMS). "So they're managing the hardware and the hypervisors and Docker Datacenter is managing the applications in the containers on top of the infrastructure," he says. "Microsoft actually produced an OMS monitoring agent for Docker so there's already good integration happening."

Docker Datacenter also works with the Azure public cloud and, back in June, Microsoft Azure CTO Mark Russinovich demonstrated how it can be used to build and deploy applications to the forthcoming Azure Stack on-premises version of the Microsoft public cloud.

New Training Requirements

Like any other version of Windows Server, the latest release will take at least a year, if not several years, before it's commonly a core component of the datacenter. Windows Server 2012 R2 and Windows Server 2008 R2 will remain the cornerstone of customer datacenters for many years to come, though many organizations will start to look to transition from the latter. In part, the delayed rollout of a new server OS is that it requires IT professionals to get up to speed with the new platform and there's much to learn about Windows Server 2016, experts say.

Garth Schulte, a trainer with CBT Nuggets, underscores the ramp-up IT pros will require for Windows Server 2016 because it brings in so many new concepts not familiar to server administrators, including the containers, Nano Server Option and a slew of new Windows PowerShell cmdlets to support these new features and RMST. Certification also will require more preparation given Microsoft's exams have 25 percent more objectives -- 180 in all compared with 150 before. "The exam is bigger and harder, especially the containers because containers are a whole new world," Schulte says.

Because Schulte is also a Google-authorized trainer, he sees how Microsoft's move to containers will have a significant impact on the refactoring of apps and the development and management of new ones. "Lots of people realize what containers can do in their environment and what it'll do to their workflow and for continuous integration," Schulte says, adding it mirrors how those in Google environments have been building and managing apps. "It's transforming how we do applications and to see that come to the Microsoft world and to see Microsoft folks go through that process, it's fun to watch," he adds. "It's almost like déjà vu, for me."

Azure Stack on the Horizon

If Windows Server 2016 is the edge of the Azure cloud, what does that mean for the forthcoming Azure Stack? Simply put, Azure Stack is the Microsoft cloud. As we reported back in September, Microsoft announced in July that the first iteration of Azure Stack will come on converged systems co-engineered by Microsoft and three partners on their respective systems -- Dell Inc., Hewlett Packard Enterprise (HPE) and Lenovo. The three companies debuted prototypes of their planned systems, set for release next summer, but will likely be used initially by large enterprises and hosting providers. Windows Server 2016 will make sense for many traditional workloads, Schulte believes. "People aren't going to go all in," Schulte says. "They're going to slowly transition into it."

Nevertheless, there's a lot of curiosity about Azure Stack. Attendees at Ignite crowded around the various displays of the Azure Stack prototypes on the Ignite exhibit floor, while technical sessions on the topic were standing-room-only. For its part, HPE disclosed it will offer a four-node and eight-node Azure Stack system built on its popular DL380 servers and up to 14 cores per node. The company will offer usage-based pricing, says Ken Won, director of Integrated Cloud Solutions at HPE.

Of the three suppliers, only Won would offer a hint to what the systems might cost -- likely $250,000 to $300,000, though he described that as a "guesstimate." Those going with usage-based pricing plans can get one bill from HPE for their datacenter and Azure public cloud consumption, Won says.

HPE provides its Operations Bridge multi-cloud and datacenter management suite to manage Azure Stack, and it will work with its own ArcSight SIEM and security analytics platform. Won said ArcSight, though not part of Microsoft's Azure Security Center marketplace, is fully compatible with Azure, which HPE CEO Meg Whitman has described as the company's preferred public cloud.

"We can pull data, log information, out of Azure to the extent that they publish it, and use it as part of the ArcSight solution to look at all of the data you need to look at to identify any security issues," Won says.

Lenovo showcased its Azure Stack prototype, as well. Lenovo previewed its converged eight-node system running 22 cores per Intel Broadwell-based CPU. The Azure Stack systems were popular among those visiting the booth, says Lenovo Advisory Engineer Michael Miller, noting a vast majority of those inquiring about the systems were those representing enterprises of all sizes, primarily from Europe, a region with strict data sovereignty regulations.

"We've talked to a lot of developers who use Azure who are interested in doing their work on-premises and then be able to move it into the public cloud," Miller says. "There's been a lot of interest from health care companies [and] actually some interest from government agencies and at least two transportation agencies. A lot of people want to know pricing, and we've told them that hasn't been worked out, that the system is still in development."

Jim Ganthier, vice president and general manager of engineered solutions for the HPC and cloud units at Dell-EMC, says his company will be "on stage when Microsoft releases Azure Stack," but steered the conversation toward the launch of its new SQL Server 2016 and Exchange Server integrated solutions, only saying that Dell doesn't talk about unannounced products -- though it, too, had a prototype on display at Ignite.

Nearly eight months after issuing the first technical preview of Azure Stack, Microsoft recently released an update to the test version of the software that will ultimately let enterprises and hosting providers replicate the Azure public cloud within their own datacenters -- albeit on a smaller scale.

The second technical preview, commonly referred to by the company and testers alike as TP2, introduces a number of new features and services, covering the entire stack: the Azure Portal, security, compute, network and storage. TP2 also offers added monitoring capabilities within the Azure Portal and, for hosting providers, support for billing and usage monitoring.

The first new feature pointed out by Schutz was the ability to use Microsoft's Key Vault for providing secure management of keys and passwords. "It helps from a key-management perspective and security perspective," Schutz says.

Testers can now also utilize application queuing and federated access to the Azure Marketplace with the ability to deploy select solutions, demonstrated in a session by Jason Zander, which is now available for viewing on demand. Microsoft outlined a complete list of the new Azure Stack features in an online document.

Despite outcry over Microsoft's plan to limit the distribution model of Azure Stack to the three partners, Schutz insists the move is the best way to ensure the consistency the company is aiming to deliver. "What we've found is most private cloud deployments fail because of how complex it is to bring together cloud hardware and cloud software," he says. "So we're still very focused on delivering Azure Stack with integrated systems and over time we'll evaluate how broad we can go in terms of other deployment models, but right now we're very focused on the integrated systems approach."

Azure's Network Boost

One of the big unexpected revelations at Ignite was that Microsoft has quietly upgraded every node in its Azure public cloud with software-defined network (SDN) infrastructure, developed using field-programmable gate arrays (FPGAs).

Described as a massive global SDN upgrade, it means that the Azure public cloud fabric is now built on a 25Gbps backbone -- up from 10Gbps -- with a 10x reduction in latency, which Microsoft believes translates to Azure having the highest speed network among cloud services. Combined with new GPU nodes, recently made available in the Azure Portal, Microsoft also claims its cloud can function as the world's fastest supercomputer, capable of running artificial intelligence, cognitive computing and even neuro networking-based applications.

The stealth upgrade started two years ago when Microsoft began installing the FPGAs -- effectively SDN-based processors from Altera, now part of Intel. Microsoft CEO Satya Nadella demonstrated some of the AI supercomputing capabilities the newly bolstered Azure is capable of during his keynote session.

"We have the ability, through the magic of the fabric that we've built, to distribute your machine learning tasks and your deep neural nets to all of the silicon that is available so that you can get performance that scales," Nadella said.

Doug Burger, a networking expert from Microsoft Research, joined Nadella on stage to describe why Microsoft made a significant investment in the FPGAs and SDN architecture. "FPGAs are programmable hardware," Burger explained. "What that means is that you get the efficiency of hardware, but you also get flexibility because you can change their functionality on the fly. And this new architecture that we've built effectively embeds an FPGA-based AI supercomputer into our global hyper-scale cloud. We get awesome speed, scale and efficiency. It will change what's possible for AI."

"This new architecture that we've built effectively embeds an FPGA-based AI supercomputer into our global hyper-scale cloud. We get awesome speed, scale and efficiency. It will change what's possible for AI."

Doug Burger, Microsoft Research

Burger said Microsoft is using a special type of neural network called a "convolutional neural net," which can recognize the content within a collection of images. Adding a 30-watt FPGA to a server turbocharges it, allowing the CPU to recognize images significantly faster. "It gives the server a huge boost for AI tasks," he said.

Showing a more complex task, Burger demonstrated how adding four FPGA boards to a high-end 24 CPU core configuration can translate the 1,400-page book, "War and Peace," from Russian to English in 2.5 seconds. "Our accelerated cognitive services run blazingly fast," he said. "Even more importantly, we can now do accelerated AI on a global scale, at hyper-scale. "

Applying 50 FPGA boards to 50 nodes, the AI-based cloud supercomputer can translate 5 billion words into another language in less than one-tenth of a second, according to Burger, amounting to 100 trillion operations per second. "That crazy speed shows the raw power of what we've deployed in our intelligent cloud," he said.

In an interview, Burger described the deployment of this new network infrastructure in Azure as a major milestone and differentiator for the company's public cloud. "This architecture is disruptive," Burger says, noting it's also deployed in the fabric of the Bing search engine. "So when you do a Bing search, you're actually touching this new fabric."

Karl Freund, a senior analyst at Moor Insights and Technology, was aware of Microsoft's intense interest in FPGAs five years ago when Microsoft Research quietly described "Project Catapult," outlining a five-year proposal of deploying the accelerators throughout Azure.

Still, it was a surprise that Microsoft actually moved forward with the deployment, Freund says. Freund also emphasized how Microsoft's choice of deploying FPGAs contrasts with how Google is building AI into its cloud using non-programmable ASICs. Google's fixed function chip is called the TPU, the tensor processing unit, based on the TensorFlow machine learning libraries and graph for processing complex mathematical calculations. Google developed TensorFlow and contributed it to the open source community. Google revealed back in May that it had started running the TPUs in its cloud more than a year ago.

The key difference between Google's and Microsoft's approaches to powering their respective clouds with AI-based computational and network power is that FPGAs are programmable and Google's TPUs, because they're ASIC-based, are not. "Microsoft will be able to react more readily. They can reprogram their FPGAs once a month because they're field-programmable, meaning they can change the gates without replacing the chip, whereas you can't reprogram a TPU -- you can't change the silicon," he said. Consequently, Google will have to swap out the processors in every node of its cloud, he explained.

The advantage Google has over Microsoft is that its TPUs are substantially faster -- potentially 10 times faster -- than today's FPGAs, Freund said. "They're going to get more throughput," he said. "If you're the scale of Google, which is a scale few of us can even comprehend, you need a lot of throughput. You need literally hundreds or thousands or even millions of simultaneous transactions accessing these trained neural networks. So they have a total throughput performance advantage versus anyone using an FPGA. The problem is if a new algorithm comes along that allows them to double the performance, they have to change the silicon and they're going to be, you could argue, late to adopt those advances."

So who has an advantage: Microsoft with its ability to easily reprogram their FPGAs or Google using its faster TPUs? "Time will tell who has the right strategy but my intuition says they are both right and there is going to be plenty of room for both approaches, even within a given datacenter."

In either case, Microsoft's explanation of how it is extending Azure -- not only the datacenter region footprint, now up to 30 with six more in the queue, but the massive boost in processing and network throughput now available -- sheds light on how new applications such as AI, conversational computing and advanced analytics will be possible on user devices, in the datacenter and beyond.