In-Depth

vSphere vs. Hyper-V: Hypervisors Battle for the Enterprise

VMware has rolled out its first major new hypervisor platform in three years and introduces some features not yet in Hyper-V.

Since the release of Windows Server 2012 R2, which included the third generation of Hyper-V, the Microsoft hypervisor reached near parity with the vSphere virtualization platform from VMware Inc. Unquestionably, there are some things vSphere does better than Hyper-V, but there are also areas where Hyper-V does excel over vSphere.

And now that VMware has released vSphere 6.0, the first major upgrade of its hypervisor platform in three years, it's time to see how it compares to Hyper-V. While a feature-by-feature comparison would be too exhaustive for an overview, I'll emphasize the most important features in the new vSphere 6.0. VMware published a technical white paper with a complete list of new vSphere 6.0 features, which you can download from here.

Scalability

VMware ESXi offers improved scalability over its predecessor. The hypervisor can now scale to support up to 64 hosts in a cluster, double the previous limit of 32 hosts per cluster. A VMware 6.0 cluster can accommodate 8,000 virtual machines (VMs) -- a twofold increase over the prior release.

The company has also improved the scalability of individual hosts. A single-host server can now accommodate up to 1,000 VMs. A host now supports up to 480 physical CPUs and up to 12TB of RAM. Windows Server 2012 R2 Hyper-V also supports up to 64 nodes per cluster and each cluster can accommodate up to 8,000 VMs. Individual Hyper-V hosts can accommodate up to 320 logical processors and up to 4TB of RAM. A single Hyper-V server can host up to 1,024 running VMs.

Security

VMware has made significant security enhancements to its new hypervisor. For starters, VMware enables central management of accounts and permissions for individual host servers. It's also now possible to centrally manage password complexity rules for hosts in a cluster. VMware has also introduced a couple new settings for the management of failed logon attempts with local accounts.

In contrast, Hyper-V is a Windows Server role, and Windows Server has long supported the central management of security and passwords via Active Directory.

Virtual Machines

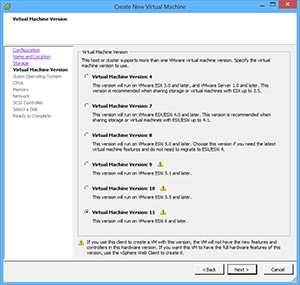

Given VM support is the core function of any hypervisor, it should come as no surprise VMware has taken steps to improve VM functionality. In doing so, VMware has introduced VM hardware version 11. For those not familiar with this concept, VMware has a history of introducing a new VM hardware version with each major release. For example, hardware version 9 was introduced with vSphere 5.1 and hardware version 10 was introduced with vSphere 5.5.

[Click on image for larger view.] Figure 1. Organizations running VMware ESXi 6 and later can opt to use virtual machine version 11.

[Click on image for larger view.] Figure 1. Organizations running VMware ESXi 6 and later can opt to use virtual machine version 11.

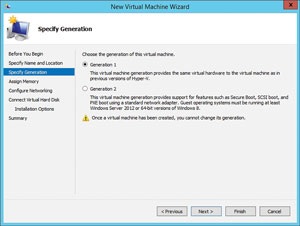

The notion of VM hardware versions is something Microsoft has only recently adopted. In Windows Server 2012 R2, Microsoft introduced generation 2 VMs (see Figure 2). These second-generation VMs used different virtual hardware than first-generation VMs. Generation 2 VMs, for example, supported the ability to boot from SCSI virtual hard disks, used UEFI firmware and performed PXE boots from standard network adapters (among other things).

[Click on image for larger view.]

Figure 2. Microsoft introduced second-generation virtual machines in Windows Server 2012 R2 Hyper-V.

[Click on image for larger view.]

Figure 2. Microsoft introduced second-generation virtual machines in Windows Server 2012 R2 Hyper-V.

Similar to Microsoft VM generations, VMware VM hardware versions represent changes in the virtual hardware. Version 11 VMs offer some improvements in the way non-uniform memory access (NUMA) memory is used. When memory is hot-added to a VM, that memory is allocated equally across all NUMA regions. Before, the memory was allocated only to region zero. This makes it easier to scale a VM without taking it offline.

[Click on image for larger view.] Figure 3. VMware's Memory Hot Plug option makes it easier to scale a virtual machine without taking it offline.

[Click on image for larger view.] Figure 3. VMware's Memory Hot Plug option makes it easier to scale a virtual machine without taking it offline.

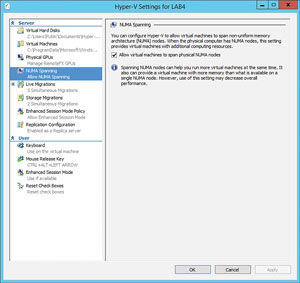

Microsoft has offered support for virtual NUMA since Windows Server 2012 Hyper-V. This virtual NUMA support allows a VM to span multiple NUMA nodes so the VM can scale to support larger workloads (see Figure 4) and can take advantage of NUMA-related performance optimizations.

[Click on image for larger view.]

Figure 4. Hyper-V also supports spanning non-uniform memory access nodes.

[Click on image for larger view.]

Figure 4. Hyper-V also supports spanning non-uniform memory access nodes.

Another enhancement to VMware VMs in virtual hardware version 11 is the increase to 32 serial ports that are supported. Also, vSphere 6.0 gives administrators the ability to remove unneeded serial and parallel ports.

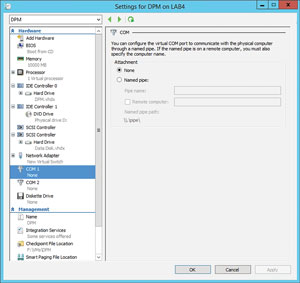

This is one area VMware clearly has better support than Microsoft. For all practical purposes, Hyper-V doesn't support the use of physical serial or parallel ports by VMs. However, it's possible to configure a virtual COM port to communicate with a physical computer through a named pipe (see Figure 5).

[Click on image for larger view.]

Figure 5. Virtual COM ports can communicate with physical computers through named pipes.

[Click on image for larger view.]

Figure 5. Virtual COM ports can communicate with physical computers through named pipes.

Microsoft also provides a workaround for generation 2 VMs, which allows a serial port to be used for debugging purposes even though virtual COM ports don't officially exist in generation 2 VMs.

One more change VMware has made to its VMs is there are a number of new guest OSes that are now officially supported. Among the newly supported OSes are:

- Oracle Unbreakable Enterprise Kernel Release 3 Quarterly Update 3

- Asianux 4 SP4

- Solaris 11.2

- Ubuntu 12.04.5

- Ubuntu 14.04.1

- Oracle Linux 7

- FreeBSD 9.3

- Mac OS X 10.10

VMware has published a complete list of supported OSes, which is available at vmw.re/1IUTXC7.

Like VMware, Microsoft supports a number of different guest OSes for use with Hyper-V. The supported Windows OSes (complete list available here) include:

- Windows Server 2012 R2

- Windows Server 2012

- Windows Server 2008 R2 SP1

- Windows Server 2008 SP2

- Windows Home Server 2011

- Windows Small Business Server 2011

- Windows Server 2003 R2 SP2

- Windows Server 2003 SP2

- Windows 8.1

- Windows 8

- Windows 7 SP1

- Windows 7

- Windows Vista SP2

- Windows XP SP3

- Windows XP X64 Edition SP2

Microsoft also provides support for many of the more popular Linux distributions, including:

- CentOS

- Red Hat Enterprise Linux

- Debian

- Oracle Linux

- SUSE

- Ubuntu

- FreeBSD

Virtual Volumes

The most prominent new feature in vSphere 6.0 is Virtual Volumes, which is essentially a mechanism for allowing array-based operations at the virtual disk level.

In the past, VM storage has primarily been provisioned at the LUN level. With releases prior to vSphere 6.0, when a VMware administrator would have to set up some new VMs, it involved calling or e-mailing the storage administrator and asking them to create a LUN according to certain specifications. The storage administrator created the LUN, making sure it would use the most appropriate storage type, RAID level and so on. Once this LUN was created, the virtualization administrator could create a datastore on the LUN and begin creating VMs. This process might have varied slightly from one organization to the next, but in every case there was abstraction between physical storage and the individual virtual hard disks.

Virtual Volumes is designed to change the way VMs interact with physical storage. This new feature allows VMDK files (VMware virtual hard disks) to natively interact with the physical storage array and allows the physical storage array's capabilities to be exposed through vCenter. This means a VMware admin can direct the array to create a VMDK that meets a specific set of requirements.

The main advantage of using Virtual Volumes is it allows VMware administrators to leverage the storage hardware's native capabilities at the VM level. Virtual Volumes makes it possible, for example, to clone, replicate or make a snapshot of a VMDK. Sure, VMware administrators already had the ability to clone, replicate or make snapshots of VMs, but the difference is the Virtual Volumes feature allows those functions to be performed at the storage level rather than at the hypervisor level.

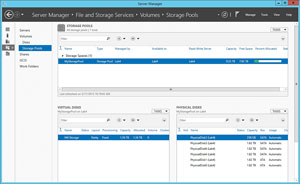

Hyper-V doesn't have an equivalent feature. However, it's important to note Hyper-V is a Windows Server role, not a standalone product like VMware ESXi. Although Hyper-V itself doesn't have a VMware-style Virtual Volumes feature, the underlying Windows Server OS does provide native support for storage hardware. Windows Storage Spaces, for example, exposes storage to the OS. Virtual disks can be created within a storage pool in a way that mimics various RAID levels. For instance, you can create a virtual disk on top of a storage pool made up of physical disks (see Figure 6).

[Click on image for larger view.]

Figure 6. Windows Server supports the creation of virtual disks on physical storage.

[Click on image for larger view.]

Figure 6. Windows Server supports the creation of virtual disks on physical storage.

Windows Server 2012 R2 is also designed to take advantage of native storage hardware capabilities by default. For example, storage arrays commonly offer a feature called Offload Data Transfer (ODX). Windows Server is able to leverage this feature for file copy operations, thereby allowing those operations to be handed off to the storage hardware rather than the operation being performed at the server level. The advantage to using this feature is the operation completes more quickly and it doesn't consume CPU and network bandwidth resources on servers.

vSphere Network I/O Control Enhancements

Yet another new capability introduced by VMware in vSphere 6.0 is vSphere network I/O control. This is a fancy way of saying VMware allows administrators to reserve network bandwidth for use by a specific vNIC (or a distributed port group). The benefit to doing so is bandwidth reservations allow administrators to guarantee that VMs receive a specified minimum level of bandwidth regardless of the demand for network bandwidth made by other VMs.

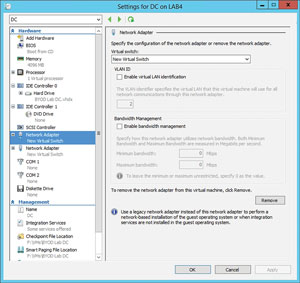

It appears Microsoft engineers also understand the importance of bandwidth management, as Hyper-V already supports the reservation of network bandwidth. Hyper-V not only lets your reserve network bandwidth on a per-vNIC basis (see Figure 7), you can use the Bandwidth Management feature to cap network bandwidth consumption. This is especially useful for reining in chatty VMs because it's often easier to limit your chatty VMs than to reserve bandwidth for all of your other VMs.

[Click on image for larger view.]

Figure 7. Hyper-V has its own Bandwidth Management feature.

[Click on image for larger view.]

Figure 7. Hyper-V has its own Bandwidth Management feature.

The Winner Is...

So how does vSphere 6.0 compare to the current version of Hyper-V? In my opinion, the two hypervisors are more or less on par with one another. VMware has added some important new features, however, at least some of those new features mimic capabilities that already exist in Hyper-V or in the Windows OS.

In all fairness, there are plenty of other new VMware features not covered here. Many revolve around multi-datacenter deployments. VMware outlines this in detail in the technical white paper I mentioned earlier.

Given the new feature set that VMware has brought to the table, it'll be interesting to see how Windows Server vNext Hyper-V compares upon its release next year.